slashdot CELL article:

-------------------------

The Cell is just a PowerPC with some extra vector processing.

Not quite. The Cell is 9 complete yet simple CPU's in one. Each handles its own tasks with its own memory. Imagine 9 computers each with a really fast network connection to the other 8. You could problably treat them as extra vector processors, but you'd then miss out on a lot of potential applications. For instance, the small processors can talk to each other rather than work with the PowerPC at all.

Sony will have to sell the PS3 at an incredible loss to make it competitive.

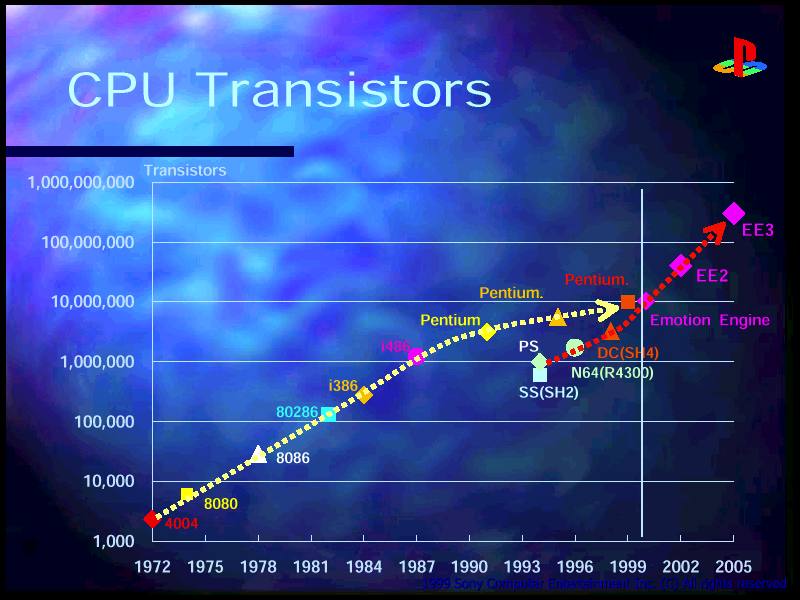

Hardly. Sony is following the same game plan as they did with their Emotion Engine in the PS2. Everyone thought that they were losing 1-200 bucks per machine at launch, but financial records have shown that besides the initial R&D (the cost of which is hard to figure out), they were only selling the PS2 at a small loss initially, and were breaking even by the end of the first year. By fabbing their own units, they took a huge risk, but they reaped huge benefits. Their risk and reward is roughly the same now as it was then.

Apple is going to use this processor in their new machine.

Doubtful. The problem is that though the main CPU is PowerPC-based like current Apple chips, it is stripped down, and the Altivec support will be much lower than in current G5s. Unoptomized, Apple code would run like a G4 on this hardware. They would have to commit to a lot of R&D for their OS to use the additional 8 processors on the chip, and redesign all their tweaked Altivec code. It would not be a simple port. A couple of years to complete, at least.

The parallel nature will make it impossible to program.

This is half-true. While it will be hard, most game logic will be performed on the traditional PowerPC part of the Cell, and thus normal to program. The difficult part will be concentrated in specific algorithms, like a physics engine, or certain AI. The modular nature of this code will mean that you could buy a physics engine already designed to fit into the 128k limitation of the subprocessor, and add the hooks into your code. Easy as pie.

The Cell will do the graphics processing, leaving only rasterezation to the video card. Most likely false. The high-end video cards coming out now can process the rendering chain as fast as the Cell can, looking at the raw specs of 256Gflops from the Cell, as opposed to about 200GFlops from video cards. In two years, video cards will be capable of much more, and they are already optomized for this, where the Cell is not, so video cards will perform closer to the theoretical limits.

The OS will handle the 8 additional vector processors so the programmer doesn't need to.

Bwahahaha! No way. This is a delicate bit of coding that is going to need to be tweaked by highly-paid coders for every single game. Letting on OS predictively determine what code needs to get sent to what processor to run is insane in this case. The cost of switching out instructions is going to be very high, so any switch will need to be carefully considered by the designer, or the frame-rate will hit rock-bottom.

The Cell chip is too large to fab efficiently.

This is one myth that could be correct. The Cell is huge (relatively), and given IBM's problems in the recent past with making large, fast PowerPC chips, it's a huge gamble on the part of all parties involved that they can fab enough of these things.