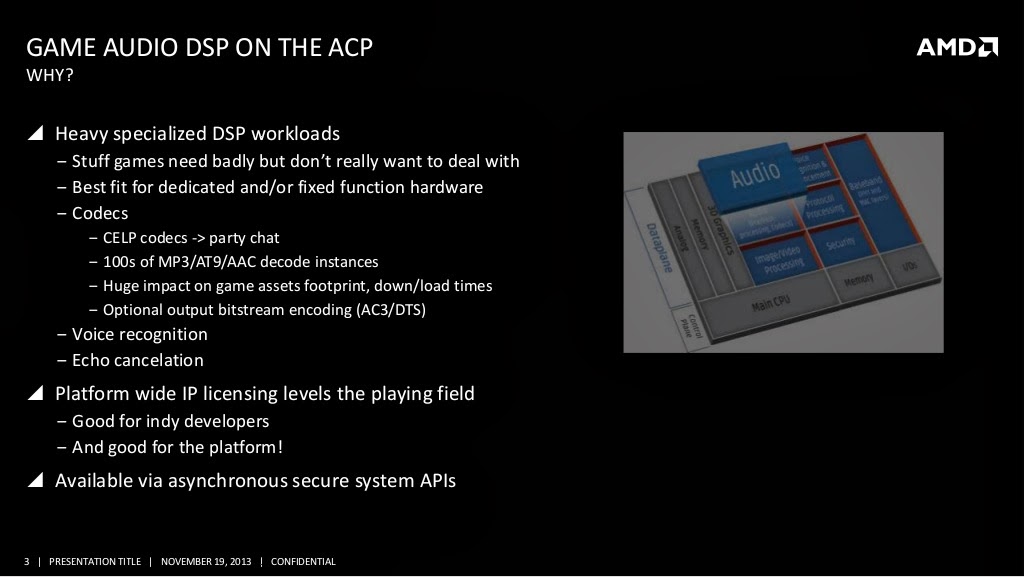

TrueAudio is not the same thing as what is implemented in the consoles, in terms of who can access it and the mix of resources IP. There's the base idea that there is a DSP block, and it seems like AMD's TrueAudio is a vanilla implementation that exists in the absence of specific problem it means to solve.

The presentation the slide you attached had a followup slide about what (significant) drawbacks running audio on the ACP had, some of which are different from what TrueAudio has.

Slide 4 of the

following.

Exotic hardware and dev environment

- Closed to games

- Closed to middleware

- Platform specific

Asynchronous interface

-Can't have sequential interleaving of DSP back and forth between CPU and ACP w/o latency buildup

-But ultimately, we want the DSP pipeline to be data driven (by artists who know nothing about this)

-Modularity

Slow clock rate @ 800MHz, very limited SIMD and no FP support

-Tough sell against Jaguar for many DSP algorithms

-Very tight local memory shared by multiple DSP cores

Already pretty busy with codec loads and system tasks

Unlike TrueAudio, the ACP is more closed off and dedicated to Sony's system services.

Even in this constrained case, we see it does inject complexity if it is being used for audio since work has to be transferred and another interface needs to be managed.

The PC environment frequently has a vast reservoir of CPU power, when Jaguar is frequently enough to beat the ACP.

The ACP is hidden behind a secure API (from your slide) which in the presentation that used this slide deck added a frame of audio latency, and the ACP dedicates much of its limited resources to codec and system services.

The payoff to all these restrictions is that it serves a purpose for Sony, so it gets used.

If a DPU is added to an SOC, but its data production is essentially being fed directly into the graphics pipeline, the GPU domain is very accommodating and can readily envelop a custom microprocessor or controller inside its borders.