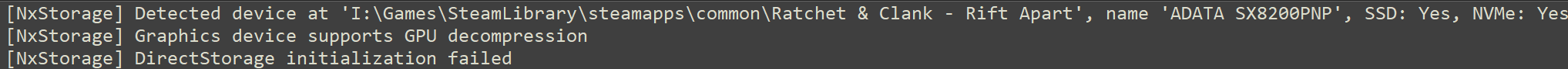

Maybe a stupid question, but if you delete the direct storage DLLs, aren't than the DLLs from the systen32 being used?

The other thing might be, if direct storage GPU decompression is used, the GPU has more to do. But this might have a negative impact as your CPU is just idle (after all the PS5 CPU isn't that great) and your SSD can provide enough read speed. The old way might just have loaded more, but as bandwidth was not a problem here and the CPU has enough overhead, the GPU decompression is just more work for the GPU.

The other thing might be, if direct storage GPU decompression is used, the GPU has more to do. But this might have a negative impact as your CPU is just idle (after all the PS5 CPU isn't that great) and your SSD can provide enough read speed. The old way might just have loaded more, but as bandwidth was not a problem here and the CPU has enough overhead, the GPU decompression is just more work for the GPU.