what surprises me the most about this patch is that it's been confirmed that AMD GPUs still don't have RT support. It'd be okay if the game could only use the best of the best at RT, which are nVidia GPUs, but then the game supports RT on Intel Arc GPUs and runs fine, so something is amiss here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ratchet & Clank technical analysis *spawn

- Thread starter Inuhanyou

- Start date

davis.anthony

Veteran

what surprises me the most about this patch is that it's been confirmed that AMD GPUs still don't have RT support. It'd be okay if the game could only use the best of the best at RT, which are nVidia GPUs, but then the game supports RT on Intel Arc GPUs and runs fine, so something is amiss here.

To be fair, architecturally Arc is much better at RT than RDNA2/3.

I think there's a fairly reliable way to check for this. I wrote earlier about how BypassIO makes disk activity invisible when reading DirectStorage data in this post here. And, sure enough, that's exactly what happens if those DLLs are active in the game's folder showing no disk activity to speak of except for non-DS data. But when I remove those files then all of those disk accesses become plainly visible.(This all of course assumes removing these dll's actually removes DirectStrorage support from the game entirely, it's possible an earlier version of DS without GPU decompression may be in Windows system folders and the game just defaults to that? Dunno.)

This happens solely because I have BypassIO enabled. If I disable that in Windows' registry then those disk accesses remain visible both with and without those DLLs.

Silent_Buddha

Legend

Uh, why was NXG brought into the DF thread again? Isn't there another thread for that?

Regards,

SB

Regards,

SB

Panino Manino

Newcomer

There are people talking about a "cap" that may be causing the PC to lost to the PS5 in loading speed.

Isn't this difference caused but the PS5 IO? That thing is very powerful.

This is another case where I ask, where is AMD? This game was originally made for their hardware and runs beautifully, but now it arrives in the PC with things not working on their GPUs? No RT? How is this possible? And the FSR present is not the current version.

Shouldn't and couldn't AMD do more to prevent these recurrent embarrassments?

Isn't this difference caused but the PS5 IO? That thing is very powerful.

FSR loses again.

This is another case where I ask, where is AMD? This game was originally made for their hardware and runs beautifully, but now it arrives in the PC with things not working on their GPUs? No RT? How is this possible? And the FSR present is not the current version.

Shouldn't and couldn't AMD do more to prevent these recurrent embarrassments?

davis.anthony

Veteran

There are people talking about a "cap" that may be causing the PC to lost to the PS5 in loading speed.

Isn't this difference caused but the PS5 IO? That thing is very powerful.

Direct Storage tests have shown it can do over 30GB/s of throughput, so well above what PS5 can manage.

A cap makes sense when looking at the variability of storage speeds available on PC as it would allow a consistent gameplay experience regardless of drive speed.

The 1 second slower loading might not even be I/O related, it could be the time it takes for the CPU or GPU initialise something that has a higher latency than the same task on PS5 has, thus requiring a slight delay to loading to compensate.

Last edited:

To be fair, it's not that AMD GPUs don't support RT with this game currently... there's simply a bug with dynamic resolution while being used with RT on, which crashes the game.. so it's disabled for the time being.what surprises me the most about this patch is that it's been confirmed that AMD GPUs still don't have RT support. It'd be okay if the game could only use the best of the best at RT, which are nVidia GPUs, but then the game supports RT on Intel Arc GPUs and runs fine, so something is amiss here.

I think the cap makes more sense as a way to ensure there will be enough bandwidth, while not needlessly dropping GPU performance further during decompression.Direct Storage tests have shown it can do over 30GB/s of throughput, so well above what PS5 can manage.

A cap makes sense when looking at the variability of storage speeds available on PC as it would allow a consistent gameplay experience regardless of drive speed.

The 1 second slower loading might not even be I/O related, it could be the time it takes for the CPU or GPU initialise something that has a higher latency than the same task on PS5 has, thus requiring a slight delay to loading to compensate.

I'mDudditz!

Newcomer

There are people talking about a "cap" that may be causing the PC to lost to the PS5 in loading speed.

Isn't this difference caused but the PS5 IO? That thing is very powerful.

It really has proven to be exceptional and represents a paradigm shift in how games will be created in the future.

Flappy Pannus

Veteran

Who are you trying to convince with these flawed arguments? You were flat out wrong about DLAA which you've just glossed over. You haven't even attempted to address flappys point about the big performance drop off if not resetting the PC between resolution changes (here's more info on the impact that can have btw). A

Yeah people need to be aware that there's an issue currently with changing settings and not reloading the game. During my testing of direcstorage on/off I also tested to see if it had an effect on a HDD, and before that I was tweaking with some graphics settings like ray tracing on/off. When I loaded into a save, was getting massive constant hard stutters. Was about to post "Welp it looks like DS actually hugely benefits the latency from slower storage!" until I decided to re-test by relaunching the game. Perfectly smooth.

I do note he claims the PS5 is also running at a higher average resolution however this ignores the higher performance penalty (with corresponding higher image quality) of DLSS vs IGTI. Ultimately, if the 2070 is outputting as good or better image quality thanks to DLSS despite a lower internal resolution, then that's what matters from the end user experience perspective, and it's doing so by leveraging an architectural advantage that it holds over the PS5, certainly something that I would consider fair in a game that was literally built from the ground up to take full advantage of the PS5 architecture and then shoehorned onto the PC.

I've noticed this in his videos - DLSS might as well not exist in terms of it actually being analyzed. It's fine to mention what relative raster performance is between the two platforms, but there's almost never any actual analysis of image quality by examining one of the most prominent features brought to PC gaming in the past decade. For videos that center around comparing the efficiencies of two different architectures, not examining the efficiency of a particular architectural feature of one is...odd.

Panino Manino

Newcomer

Direct Storage tests have shown it can do over 30GB/s of throughput, so well above what PS5 can manage.

A cap makes sense when looking at the variability of storage speeds available on PC as it would allow a consistent gameplay experience regardless of drive speed.

The 1 second slower loading might not even be I/O related, it could be the time it takes for the CPU or GPU initialise something that has a higher latency than the same task on PS5 has, thus requiring a slight delay to loading to compensate.

That's what I mean when I think the IO chip on the PS5 making the difference, it's not a cap, is not any limitation on the transfer speed on the PC, it's just that the PS5 IO can handle the transferred data faster than current PCs. So there's nothing wrong or in need to be improved, we are just seeing what the best PC right now can do.

Flappy Pannus

Veteran

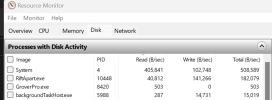

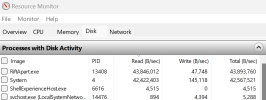

I think there's a fairly reliable way to check for this. I wrote earlier about how BypassIO makes disk activity invisible when reading DirectStorage data in this post here. And, sure enough, that's exactly what happens if those DLLs are active in the game's folder showing no disk activity to speak of except for non-DS data. But when I remove those files then all of those disk accesses become plainly visible.

This happens solely because I have BypassIO enabled. If I disable that in Windows' registry then those disk accesses remain visible both with and without those DLLs.

With DirectStorage DLL's loaded:

Without DS Dll's, same scene:

How do you know it's not a cap? What evidence points to the limitation being processing or IO transaction rate or something specific to PS5's IO chain over being a minium time limit imposed in the game to, say, ensure a decent transition animation regardless of drive-speed such that the game always looks nice in play?That's what I mean when I think the IO chip on the PS5 making the difference, it's not a cap...

Do the results show far show CPU or GPU or RAM bandwidth or whatever being saturated during load?

Thanks, this somewhat supports it but it's more revealing if you open the window below that one. The one labeled 'Disk Activity' rather than 'Processes with Disk Activity'.With DirectStorage DLL's loaded:

View attachment 9300

Without DS Dll's, same scene:

View attachment 9302

If you revisit my screenshots then you'll note that the first screenshot only shows the executable and some DLLs being accessed while the latter screenshot is filled with more typical game data files like textures/models/etc.

Flappy Pannus

Veteran

Thanks, this somewhat supports it but it's more revealing if you open the window below that one. The one labeled 'Disk Activity' rather than 'Processes with Disk Activity'.

If you revisit my screenshots then you'll note that the first screenshot only shows the executable and some DLLs being accessed while the latter screenshot is filled with more typical game data files like textures/models/etc.

With DS:

Without:

Thanks again, that's the very same behavior that I see and suggests that DirectStorage is genuinely being disabled.

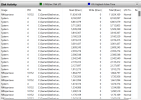

That's actually very interesting and I stand corrected on the "PCIe 3.0 being enough" argument - at least in that portal segment. It seems to be exceeding the read speed of PCIe 3.0 on several occasions. Although notably it's no-where near PCIe 4.0 limits. This could certainly impact Turing class GPU's during that sequence and result in some pretty heavy frame drops. That said, this sequence if far from representative of the games average gameplay where I doubt PCIe 3.0 would prove to be a limiting factor. So this is quite a good example of why using that sequence to judge the average performance of Turing GPU's in this game - especially using 1% lows is a pretty terrible idea.

"People don't seem to have grasped the storage situation with Ratchet and Clank..."

" I think the thing people should consider is that there's more to data access speed than just the drive that the game is stored on..."

In other words, all of these DirectStorage drive speed benchmarks are really a moot point when considering the application of I/O in actual games.

Fantastic insights from DF here.

I'm responding to this one here to keep the R&C technical discussion in one place.

So what Rich says here is obviously correct. But what I want to know is where you think the bottleneck on PC lies?

The DS benchmarks are not "drive speed" benchmarks. They are end to end data throughput benchmarks. They measure drive speed, decompression speed, and indirectly, bus capacity.

What they don't really measure is CPU limits because those benchmarks are very light on the CPU.

That's why I initially suspected the CPU might be the bottleneck on PC. But we've now seen, thanks to Alex that CPU makes no difference beyond a base performance level. Neither does GPU performance (i.e. decompression speed), RAM speed, PCIe width etc...

So where do you think the bottleneck is? What component of the PS5 IO chain do you specifically think is faster than what the PC is able to deliver? Personally I have no idea now. All the major components seem to have been ruled out, but I'm not convinced yet that it's a cap imposed in the game engine. If it were - it could have been done a lot better.

Last edited:

That's actually very interesting and I stand corrected on the "PCIe 3.0 being enough" argument - at least in that portal segment. It seems to be exceeding the read speed of PCIe 3.0 on several occasions. Although notably it's no-where near PCIe 4.0 limits. This could certainly impact Turing class GPU's during that sequence and result in some pretty heavy frame drops. That said, this sequence if far from representative of the games average gameplay where I doubt PCIe 3.0 would prove to be a limiting factor. So this is quite a good example of why using that sequence to judge the average performance of Turing GPU's in this game - especially using 1% lows is a pretty terrible idea.

Those figures are so high it's like the GPU is constantly swapping data across PCIe, or decompressing textures (from GDeflate), sending some back to DDR, then reading them back to render.

2 ~ 11 GB/s *transmit* from the GPU across PCIe (that's what GPU PCIE Tx means, right?)??

Could something be b0rked with the counters for DS? Wat is going on.

Similar threads

- Replies

- 70

- Views

- 21K

- Replies

- 64

- Views

- 53K

- Replies

- 149

- Views

- 27K

- Replies

- 4

- Views

- 4K