You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PlayStation 4 (codename Orbis) technical hardware investigation (news and rumours)

- Thread starter Love_In_Rio

- Start date

- Status

- Not open for further replies.

(((interference)))

Veteran

Is it true that GDDR5 is much higher latency than DDR3? Could that be an issue for Orbis games? (especially since it doesn't have low latency memory pools like ESRAM to compensate)

There's been some discussion about this in other threads and the conclusion was there isn't a significant difference.Is it true that GDDR5 is much higher latency than DDR3?

Eh ? Do you have a link to Sony’s Vita customization ? I heard they tweaked the bandwidth and cache, but haven’t heard anything about the media engine.

EDIT: I googled a bit. Can't find any official claim for "Vita Media Engine 2". Care to reveal more ?

Found more Vita audio info.

http://www.develop-online.net/features/1513/Heard-About-PS-Vita

So what’s under the hood that you can talk about? What is it, in fact, capable of?

There’s two sides to the audio processing on PS Vita: the ARM processing and the codec engine processing.

The ARM side processing is also where the main game is processed, so it has been very important to keep CPU use to a minimum here.

The codec engine is where the serious audio processing happens – mixing, resampling, DSP processing, and such like. I can’t go into too much detail regarding the hardware, but suffice to say, it’s very powerful.

Developers are not allowed direct access to the codec engine, as with the PSP’s ‘Media Engine’. As such, we had to ensure that the design of Next-Gen Synth took this into consideration.

We needed to allow the synth to be configurable – synth DSP module routing and bus routing, for example – and we made sure that we’d include the high-priority DSP effects as standard, as it wouldn’t be possible for developers to write their own on the codec engine.

This is another major reason why we needed feedback from middleware developers at an early stage.

It’s probably worth noting that developers can just write their own synth on the ARM processors if they wish.

However, NGS’s use of the codec engine allows for far more processing to be available for game (graphics and AI) use.

Will it deliver PS3 quality audio? In terms of fidelity and scope?

It really depends what PS3 games with which you want to compare the audio capabilities of PS Vita and NGS. But the goal was to allow our own WWS PS3 titles to work on PS Vita with minimum changes.

In terms of power, games process hundreds of audio channels, as well as processing high quality reverbs, etc.

We consider that a "game voice" would have resampling, volume changing, filter and some kind of codec decoding all being active.

So when I say that NGS can process hundreds of audio channels, I mean real "game voice" audio channels.

The main audio difference is obviously that it's a portable unit with stereo output, rather than full-fat 7.1. But in terms of fidelity, I'd say it's up with that of PS3, and in terms of scope, I think that the synth design is actually more flexible, allowing for wider opportunities for creativity than that of PS3 in the longer term.

Of course, PS3 is a home console. It plugs into the mains and has a fan to keep it from overheating! PS Vita is a portable, battery powered unit and as such, you have to be sensible when making comparisons between the two.

I see it like this: if you used the whole PS3 to do something amazing with audio (whatever that would be), then yes, PS3 delivers more.

But if you consider what resources are normally available for game audio on a home console (memory, CPU use, DSPs), then without a doubt, PS Vita can deliver to that level.

PS3's Multistream audio SDK:

http://www.gamasutra.com/view/feature/132208/nextgen_audio_squareoff_.php?print=1

Jonathan Lanier: Several. First, the fact that the PS3 has HDMI 8-channel PCM outputs means that we could play all our audio in 5.1/7.1 on an HDMI system with no recompression, which sounds completely awesome. Second, for those without HDMI who must use bitstream audio, we had the ability to support DTS, which is very high fidelity.

Third, we are guaranteed that each PS3 has a hard drive, so we could dynamically cache important sounds and streams to the hard drive to guarantee full performance, even without requiring an installation. Fourth, the Blu-ray disc storage was immense, which meant that we did not have to reduce the sampling rate of our streaming audio or overcompress it, and we never ran out of space even given the massive amount of dialog in Uncharted (in multiple languages, no less).

Fifth, the power of the Cell meant that we had a lot of power to do as much audio codec and DSP as we needed to. Since all audio is synthesized in software on the PS3 with the Cell processor, there's really no limit to what can be done.

About how many simultaneous streams were used, and what techniques were used for transitions in the music?

JL: Up to 12 simultaneous streams were supported, of which 6 could be multichannel (stereo or 6-channel). Two multichannel streams were used for interactive music, each of which was 3-track stereo (i.e. 6-channel). A few additional multichannel streams were used for streaming 4-channel background sound effects. The remainder were used for streaming mono dialog and sound effects. All the streams were dynamically cached to the PS3's internal hard drive, which guaranteed smooth playback.

The music transitions were based on game events, such as changing tasks and/or completing tasks, as well as entering or exiting combat. Also, within a piece of music, we could dynamically mix the three stereo tracks in an interactive stream to change the music intensity based on the excitement level of the gameplay.

They both sound more interesting than AMD's integrated HD audio processor. Its highlights seem to be TruHD and DTS Master Audio, plus discrete multi-point audio output. The latter doesn't sound like a common use case.

D

Deleted member 7537

Guest

PS4 related?

http://www.theregister.co.uk/2013/05/01/amd_huma/

EDIT:

From Arstechnica forum (Blacken00100)

http://www.theregister.co.uk/2013/05/01/amd_huma/

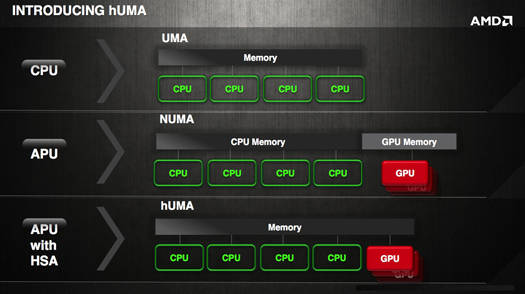

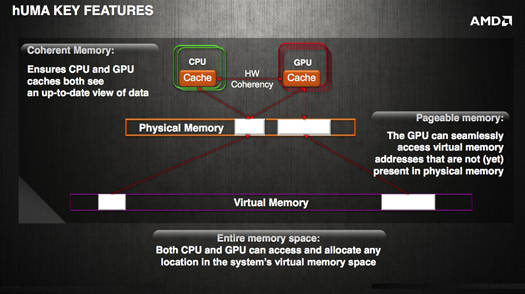

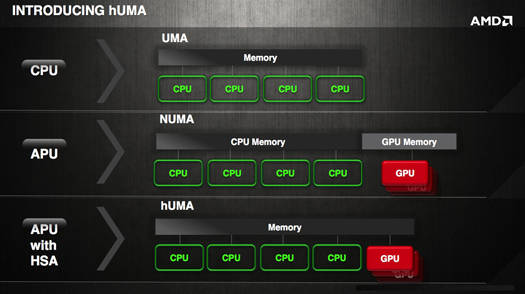

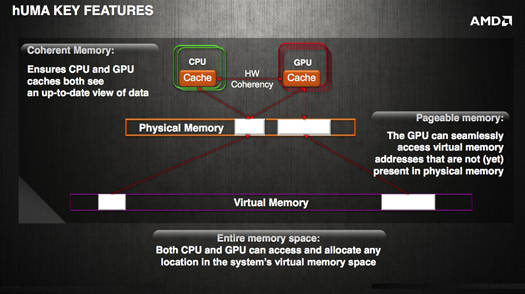

In fact, hUMAfication already appears to be on the way – and not necessarily where you might have first expected. AMD is supplying a custom processor for Sony's upcoming PlayStation 4

EDIT:

From Arstechnica forum (Blacken00100)

So, a couple of random things I've learned:

-The memory structure is unified, but weird; it's not like the GPU can just grab arbitrary memory like some people were thinking (rather, it can, but it's slow). They're incorporating another type of shader that can basically read from a ring buffer (supplied in a streaming fashion by the CPU) and write to an output buffer. I don't have all the details, but it seems interesting.

AMD seem to be looking for a more general solution where the CPU and GPU have full cache coherency anytime, anywhere.

I think Cerny's team came up with a different solution. The ring buffers and command queues may be coherent but the GPU can grab other data from the memory at "full speed" in a non-coherent fashion. The processors "just" synchronize their work with some concurrency control mechanism(s).

I think Cerny's team came up with a different solution. The ring buffers and command queues may be coherent but the GPU can grab other data from the memory at "full speed" in a non-coherent fashion. The processors "just" synchronize their work with some concurrency control mechanism(s).

It could be different but I could see the PS4 and AMD next APU and the Xbox mostly using the sale solution. In the two later cases though it would not be exposed, though people working on the drivers and the driver/API (on the xbox) could benefit from the feature.AMD seem to be looking for a more general solution where the CPU and GPU have full cache coherency anytime, anywhere.

I think Cerny's team came up with a different solution. The ring buffers and command queues may be coherent but the GPU can grab other data from the memory at "full speed" in a non-coherent fashion. The processors "just" synchronize their work with some concurrency control mechanism(s).

If D.Kanter is right Intel late APU have a coherent view of the memory space though it is not exposed in DirectX D.Kanter hints that it should benefit the drivers development teams.

I guess that's why Tim Lottes went gah-gah when Sony said developers will have low level access. Then again how low does Sony really want to let them go ?

It is interesting that on the audio front, they may take the opposite direction.

Sony may use Vita's approach for audio processing. The Vita audio stack is designed to simplify porting vis-a-vis PS3. Developers don't have direct access to the audio processor (unlike PS3 SPUs). They provide a synth layer/module to enable routing, mixing, decoding and synthesizing streams. Base hardware is Wolfson Micro WM1803E. Can't find any info on the chip.

It is possible that the PS4 audio processor lies outside the APU.

It is interesting that on the audio front, they may take the opposite direction.

Sony may use Vita's approach for audio processing. The Vita audio stack is designed to simplify porting vis-a-vis PS3. Developers don't have direct access to the audio processor (unlike PS3 SPUs). They provide a synth layer/module to enable routing, mixing, decoding and synthesizing streams. Base hardware is Wolfson Micro WM1803E. Can't find any info on the chip.

It is possible that the PS4 audio processor lies outside the APU.

It could be different but I could see the PS4 and AMD next APU and the Xbox mostly using the sale solution. In the two later cases though it would not be exposed, though people working on the drivers and the driver/API (on the xbox) could benefit from the feature.

If D.Kanter is right Intel late APU have a coherent view of the memory space though it is not exposed in DirectX D.Kanter hints that it should benefit the drivers development teams.

They're possibly the same, but I can see areas where the consoles could have a less capable solution.

Giving the GPU the ability to page fault might not be as necessary for consoles, which can have their software environment start out with a default pinning of the memory outside of system reserved memory.

With low-level access, a game's streaming engines could be tasked with making sure everything needed is in memory.

The AMD slides also hint at probe filters and cache directories to maintain efficiency. This could be present for Orbis. The comparatively limited coherent bandwidth in Onion and Onion+ may mean Orbis uses a less capable version.

It could be different but I could see the PS4 and AMD next APU and the Xbox mostly using the sale solution. In the two later cases though it would not be exposed, though people working on the drivers and the driver/API (on the xbox) could benefit from the feature.

If D.Kanter is right Intel late APU have a coherent view of the memory space though it is not exposed in DirectX D.Kanter hints that it should benefit the drivers development teams.

Or maybe MS's longer term relationship with AMD allowed them to contract exclusive use of AMD more forward looking designs. And Sony basically went, "Pfft, we are a hardware company that has pretty decent knowledge of heterogenous design (Cell)". So, Sony just worked with AMD to come up with a custom alternative design to facillitate some of desired features that current AMD apus lack but are planned in the future processors.

That Sony received a patent on context switching encourages my belief that Sony spent time engineering workarounds.

Last edited by a moderator:

Love_In_Rio

Veteran

Or maybe MS's longer term relationship with AMD allowed them to contract exclusive use of AMD more forward looking designs. And Sony basically went, "Pfft, we are a hardware company that has pretty decent knowledge of heterogenous design (Cell)". So, Sony just worked with AMD to come up with a custom alternative design to facillitate some of desired features that current AMD apus lack but are planned in the future processors.

The ideal that Sony received a patent on context switching encourages my belief that Sony spent time engineering workarounds.

This would have been quite dump from Sony and Dave Baumann already said the custom console APUs would leverage the 2013 designs.

This would have been quite dump from Sony and Dave Baumann already said the custom console APUs would leverage the 2013 designs.

True but MS and/or Sony patents seem to describe some features that falls in AMD's bullet points for the 2014 road map (context switching, preemption and QOS). I think both APUs will extend beyond AMD's 2013 design with MS making use some of AMD planned implementations while Sony makes use of alternative implementations thats a product of Sony's and AMD's collaboration.

I doubt highly that MS didn't try to restrain AMD in some fashion as I highly doubt that Sony didn't mandate that Cell couldn't show up in other consoles.

Last edited by a moderator:

Gradthrawn

Veteran

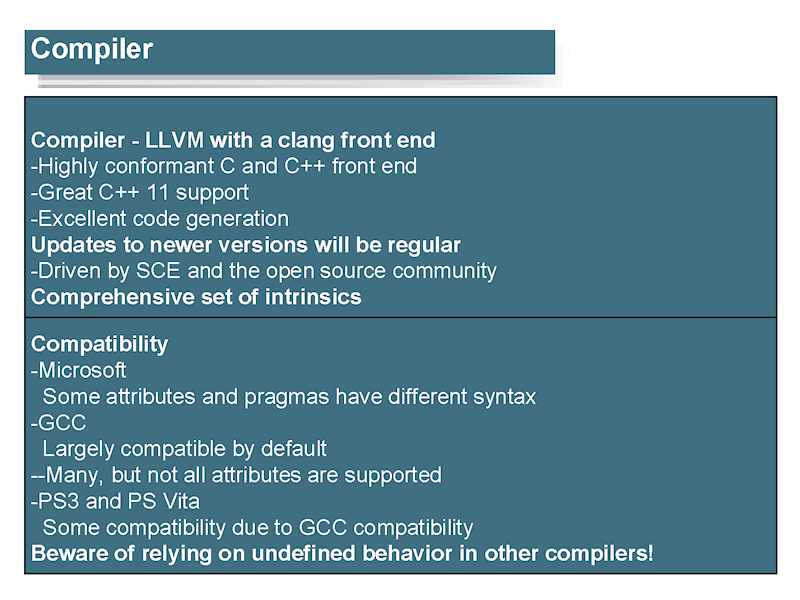

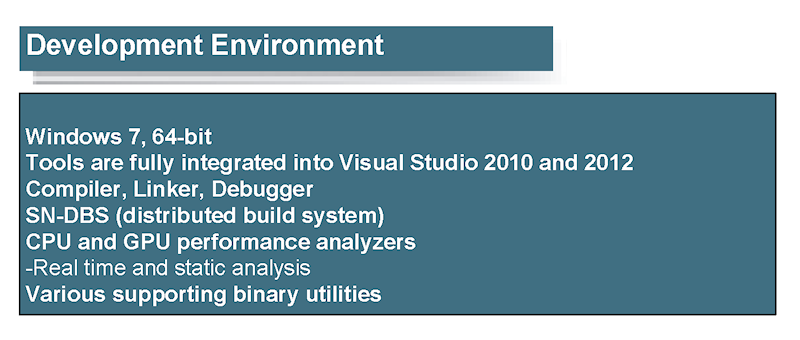

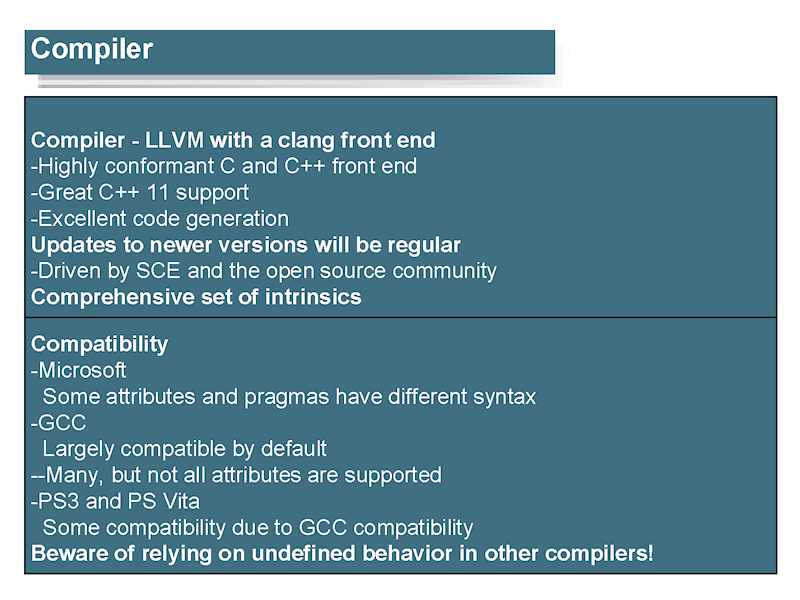

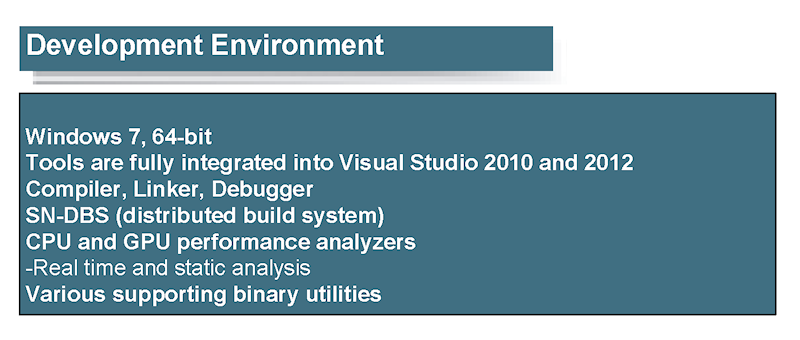

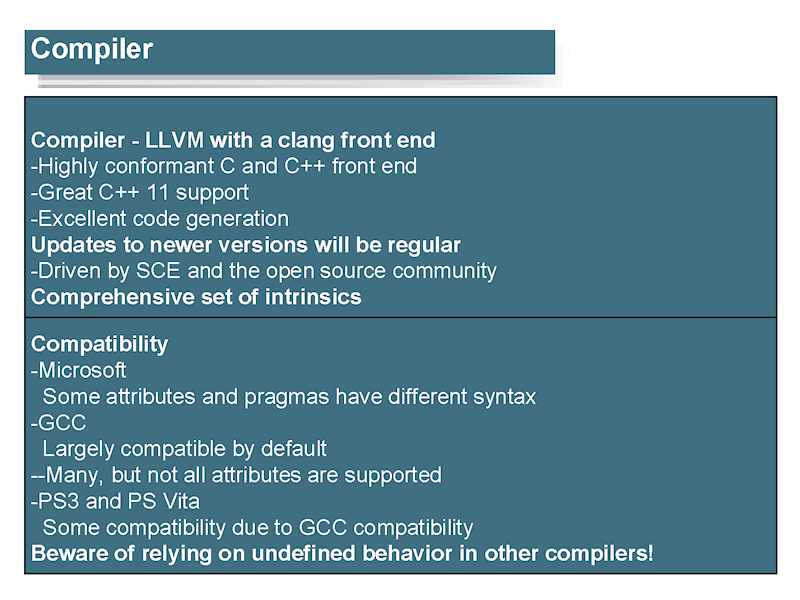

New Watch Impress speculative/investigative article about general purpose computing on the GPU. Mentions PSSL along with the low level and wrapper APIs (nothing new there). But it also mentions LLVM as a compiler with apparent regular updates from SCE.I hadn't heard that mentioned before.

They're possibly the same, but I can see areas where the consoles could have a less capable solution.

Giving the GPU the ability to page fault might not be as necessary for consoles, which can have their software environment start out with a default pinning of the memory outside of system reserved memory.

With low-level access, a game's streaming engines could be tasked with making sure everything needed is in memory.

The AMD slides also hint at probe filters and cache directories to maintain efficiency. This could be present for Orbis. The comparatively limited coherent bandwidth in Onion and Onion+ may mean Orbis uses a less capable version.

Yap, that would be my guess as well. AMD's requirements are quite different from Sony's.

New Watch Impress speculative/investigative article about general purpose computing on the GPU. Mentions PSSL along with the low level and wrapper APIs (nothing new there). But it also mentions LLVM as a compiler with apparent regular updates from SCE.I hadn't heard that mentioned before.

Yeah llvm is expected these days.

What may be more interesting is Cerny's comments about using one language to implement both the CPU and GPU compute jobs.

EDIT:

On FreeBSD and llvm: http://unix.stackexchange.com/questions/49906/why-is-freebsd-deprecating-gcc-in-favor-of-clang-llvm

True but MS and/or Sony patents seem to describe some features that falls in AMD's bullet points for the 2014 road map (context switching, preemption and QOS). I think both APUs will extend beyond AMD's 2013 design with MS making use some of AMD planned implementations while Sony makes use of alternative implementations thats a product of Sony's and AMD's collaboration.

I doubt highly that MS didn't try to restrain AMD in some fashion as I highly doubt that Sony didn't mandate that Cell couldn't show up in other consoles.

I think one of the articles mentioned that Sony went ahead and roll their own because AMD's solution wasn't ready yet. I suspect it's the scheduling and caching stuff.

Will be interesting to see their Southbridge and dedicated units too. They all need to fit together in a unified memory.

Gradthrawn

Veteran

I was thinking about this when I first saw the recent Edge translated Yoshida interview with 4Gamers.net but I forgot to bring it up by the time I finished reading it. In it he mentions switching between a game and Netflix at will

I thought this was quite strange since I figured Netflix, like a game, would just simply be considered as a non-system application. Now, this could just be a mis-understanding on Yoshida's part. Based on the information we have directly from SCE so far, it safe to say a certain amount of OS level functions will be available while a full App is running. As such, OS video playback (directly from the CUX) may well be supported by using whatever reserved resources the OS has, while an active game is still in memory. Some confusion on his part may have stemmed from that.

Setting that aside, if his statement is accurate, this could imply a few things. We know some amount of memory is reserved for the OS and unavailable to running applications. Thus if Netflix, in his example, were to be treated like a regular application then launching it should boot any running game (or other app) from unreserved memory. Unless SCE is making some of the OS reserved memory available to apps that meet certain performance and size requirements (they know how much they need for system level functions, and make the rest available to qualified apps). Similarly, the originally rumored OS reservation may have been bumped up from 512MB (unsupported rumors currently at 1 GB). Instead of reserving that extra space for the OS, it acts a a separate memory space for Apps that meet certain performance requirements.

Of course, the problem with this theory is how resources outside of memory would be handled. We know the DCE can only handle 2 planes, with 1 reserved for the OS. So, as I understand it there's no hardware level combing/blending that can be done before dropping everything to the front buffer. Since there's a chance the game could be refreshing at framerate A, with the OS at B, and Netflix at C, some level of software scaling, blending, and combining would have to be done to account for this wouldn't it (to ensure a responsive user experience)? Which would likely have to be done by whatever resources are available to the OS. That's along with any additional GPU and audio (ACP) resources needed (which may very well be minimal for an App like Netflix which would rely on the UVD).

Regardless, it will be interesting to see how it all shakes out. I would really like to be able to swap between a game and media applications like Netflix, Hulu, Crunchyroll, etc, but I wasn't counting on it (not on the PS4 at least). Of course, there's also the possibility that games will drop into some sort of suspended state and cache their memory state to HDD (that could be a lot of data to try and write quickly, and then read back out again). Or, something along the lines of what's done with the Vita. On the VIta, if you have a game running, and open the browser, if you try to open a page it can't currently handle, it tells you what application you need to close (typically or perhaps always the game) to free up the resources it needs.

“In that case,” he said, “we need to make sure users consider the PS4 version to be the best and the one they want to play. That might mean that the graphics are better, the controller is more comfortable, or the point I mentioned earlier about the console being more user-friendly, like you don’t have to turn the power on and off or you can switch between your game and Netflix at will. Those things will become key.”

I thought this was quite strange since I figured Netflix, like a game, would just simply be considered as a non-system application. Now, this could just be a mis-understanding on Yoshida's part. Based on the information we have directly from SCE so far, it safe to say a certain amount of OS level functions will be available while a full App is running. As such, OS video playback (directly from the CUX) may well be supported by using whatever reserved resources the OS has, while an active game is still in memory. Some confusion on his part may have stemmed from that.

Setting that aside, if his statement is accurate, this could imply a few things. We know some amount of memory is reserved for the OS and unavailable to running applications. Thus if Netflix, in his example, were to be treated like a regular application then launching it should boot any running game (or other app) from unreserved memory. Unless SCE is making some of the OS reserved memory available to apps that meet certain performance and size requirements (they know how much they need for system level functions, and make the rest available to qualified apps). Similarly, the originally rumored OS reservation may have been bumped up from 512MB (unsupported rumors currently at 1 GB). Instead of reserving that extra space for the OS, it acts a a separate memory space for Apps that meet certain performance requirements.

Of course, the problem with this theory is how resources outside of memory would be handled. We know the DCE can only handle 2 planes, with 1 reserved for the OS. So, as I understand it there's no hardware level combing/blending that can be done before dropping everything to the front buffer. Since there's a chance the game could be refreshing at framerate A, with the OS at B, and Netflix at C, some level of software scaling, blending, and combining would have to be done to account for this wouldn't it (to ensure a responsive user experience)? Which would likely have to be done by whatever resources are available to the OS. That's along with any additional GPU and audio (ACP) resources needed (which may very well be minimal for an App like Netflix which would rely on the UVD).

Regardless, it will be interesting to see how it all shakes out. I would really like to be able to swap between a game and media applications like Netflix, Hulu, Crunchyroll, etc, but I wasn't counting on it (not on the PS4 at least). Of course, there's also the possibility that games will drop into some sort of suspended state and cache their memory state to HDD (that could be a lot of data to try and write quickly, and then read back out again). Or, something along the lines of what's done with the Vita. On the VIta, if you have a game running, and open the browser, if you try to open a page it can't currently handle, it tells you what application you need to close (typically or perhaps always the game) to free up the resources it needs.

I was thinking about this when I first saw the recent Edge translated Yoshida interview with 4Gamers.net but I forgot to bring it up by the time I finished reading it. In it he mentions switching between a game and Netflix at will

I thought this was quite strange since I figured Netflix, like a game, would just simply be considered as a non-system application. Now, this could just be a mis-understanding on Yoshida's part. Based on the information we have directly from SCE so far, it safe to say a certain amount of OS level functions will be available while a full App is running. As such, OS video playback (directly from the CUX) may well be supported by using whatever reserved resources the OS has, while an active game is still in memory. Some confusion on his part may have stemmed from that.

Setting that aside, if his statement is accurate, this could imply a few things. We know some amount of memory is reserved for the OS and unavailable to running applications. Thus if Netflix, in his example, were to be treated like a regular application then launching it should boot any running game (or other app) from unreserved memory. Unless SCE is making some of the OS reserved memory available to apps that meet certain performance and size requirements (they know how much they need for system level functions, and make the rest available to qualified apps). Similarly, the originally rumored OS reservation may have been bumped up from 512MB (unsupported rumors currently at 1 GB). Instead of reserving that extra space for the OS, it acts a a separate memory space for Apps that meet certain performance requirements.

Of course, the problem with this theory is how resources outside of memory would be handled. We know the DCE can only handle 2 planes, with 1 reserved for the OS. So, as I understand it there's no hardware level combing/blending that can be done before dropping everything to the front buffer. Since there's a chance the game could be refreshing at framerate A, with the OS at B, and Netflix at C, some level of software scaling, blending, and combining would have to be done to account for this wouldn't it (to ensure a responsive user experience)? Which would likely have to be done by whatever resources are available to the OS. That's along with any additional GPU and audio (ACP) resources needed (which may very well be minimal for an App like Netflix which would rely on the UVD).

Regardless, it will be interesting to see how it all shakes out. I would really like to be able to swap between a game and media applications like Netflix, Hulu, Crunchyroll, etc, but I wasn't counting on it (not on the PS4 at least). Of course, there's also the possibility that games will drop into some sort of suspended state and cache their memory state to HDD (that could be a lot of data to try and write quickly, and then read back out again). Or, something along the lines of what's done with the Vita. On the VIta, if you have a game running, and open the browser, if you try to open a page it can't currently handle, it tells you what application you need to close (typically or perhaps always the game) to free up the resources it needs.

I would guess that when the game goes into suspend mode it frees up resources for the OS/Apps.

- Status

- Not open for further replies.

Similar threads

- Replies

- 10

- Views

- 4K

- Replies

- 90

- Views

- 16K

- Replies

- 213

- Views

- 26K

- Replies

- 4

- Views

- 2K