DegustatoR

Legend

Was there a liquid cooled V100 DC SKU?Liquid cooling is pretty normal in data centre applications.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Was there a liquid cooled V100 DC SKU?Liquid cooling is pretty normal in data centre applications.

The vendor, that SKU was never officially unveiled.Who does the binning for those, NVidia or the vendor?

The latter. There is a 250w version as well, but it's boost is limited by power.Does the 500W version need new firmware? Or is the built-in boost capability of the processor enough to let it freewheel up to 500W?

Never heard of any.Was there a liquid cooled V100 DC SKU?

I don't know, to be honest. I don't understand why you're asking.Was there a liquid cooled V100 DC SKU?

Might the 250W product also be binned that way? A lower-clocking die being binned for a lower performance SKU?The latter. There is a 250w version as well, but it's boost is limited by power.

Liquid cooling is pretty normal in data centre applications.

PowerPoint Presentation (hpcuserforum.com)

Quoted simply because you joined the one-liner gang. Shame.

We would prefer it if you actually know your info before spewing it like that.300 to 500w

Because the comparison have to be apples to apples if we're looking at wattage between Volta and Ampere.I don't know, to be honest. I don't understand why you're asking.

It doesn't clock low, it reaches the same peak clocks, but the sustainable frequency drops depending on the load.Might the 250W product also be binned that way? A lower-clocking die being binned for a lower performance SKU?

That's my point, in your example it uses more power, so at sustained workloads it clocks lower.It doesn't clock low, it reaches the same peak clocks, but the sustainable frequency drops depending on the load.

Is LC maybe more common for servers not equipped with accelerators? I think LC is not standard yet. It's not total alien tech in Datacenter, but not commonplace either.I don't know, to be honest. I don't understand why you're asking.

Is LC maybe more common for servers not equipped with accelerators? I think LC is not standard yet. It's not total alien tech in Datacenter, but not commonplace either.

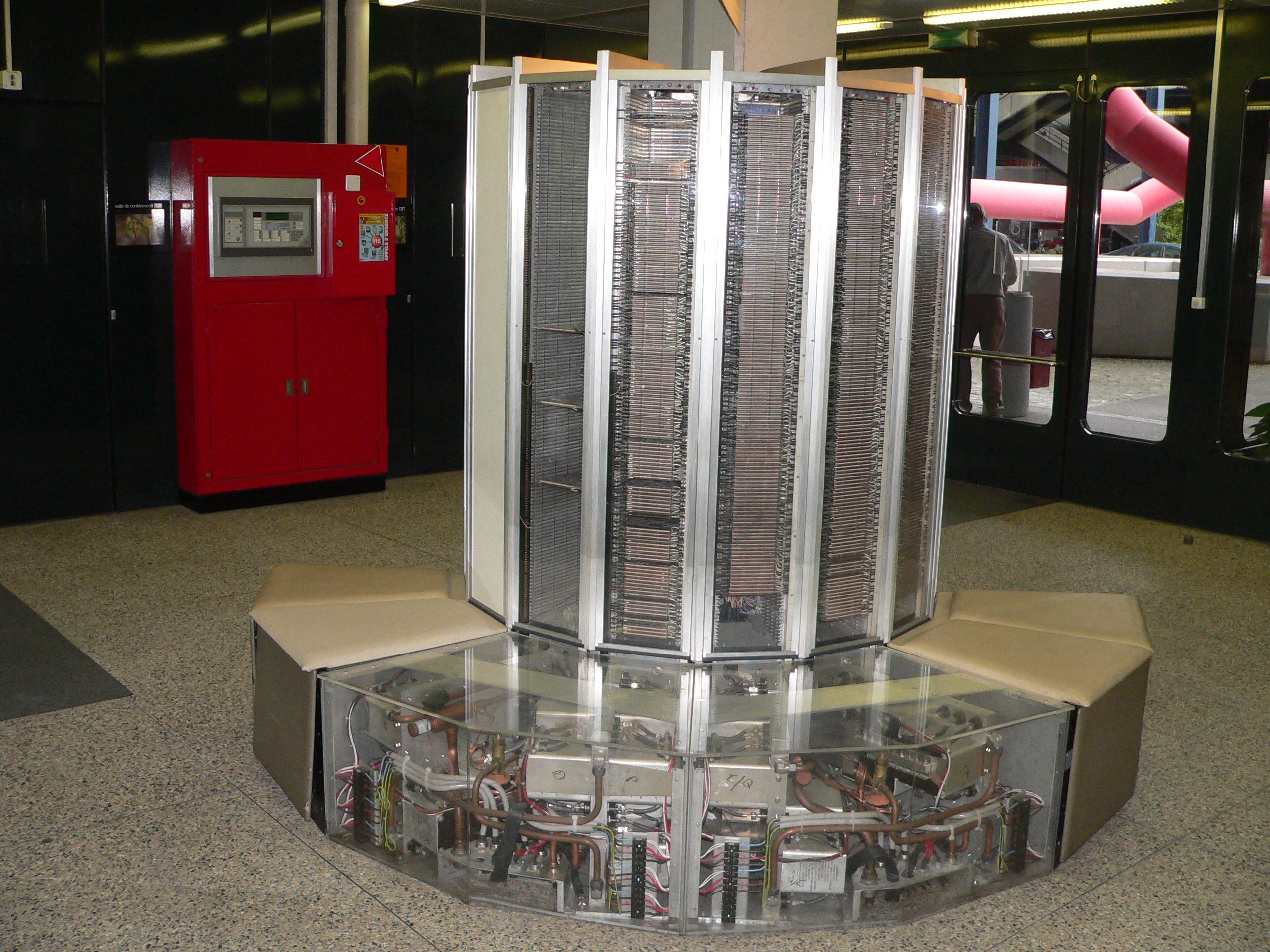

Cray-1 - Wikipedia

Key part of the design was the integrated liquid cooling

The history of liquid cooled computers is long, Further back than this, but the Cray 1 is an icon.

You could say it's the last resort to use liquid cooling in a data centre, which is why it seems "abnormal". But there have been many periods over the past 60 years where it was crucial in getting something working. These were commercial systems, too, not just built for government laboratories and universities.

I think it's fair to say "accelerator cards" make it much more difficult to implement liquid cooling. Immersion cooling has been a thing for decades, too, which is a nice work-around for the physical difficulties associated with plumbing.

Google Brings Liquid Cooling to Data Centers to Cool Latest AI Chips (datacenterknowledge.com)

When competitors have been doing liquid cooling for a while, you gotta catch up, I suppose.

Enter 500W SXM4The LC was because of their "design".

ECL hot CPU's...kinda no other option...if you did not wanted to sacrifice performance.

Enter 500W SXM4

Ah, the ever present but always forgotten silicon horror! If every chip was used to a full and complete 100% of it's potential then we say goodbye to all of our advancements in clockspeeds, the chips will be so out of whack power wise that any hope of maintaining even base clocks is lost.the improved DL-optimized COPA-GPU utilization may lead to increased total design power that may not be entirely mitigated by the power reduction within the memory system

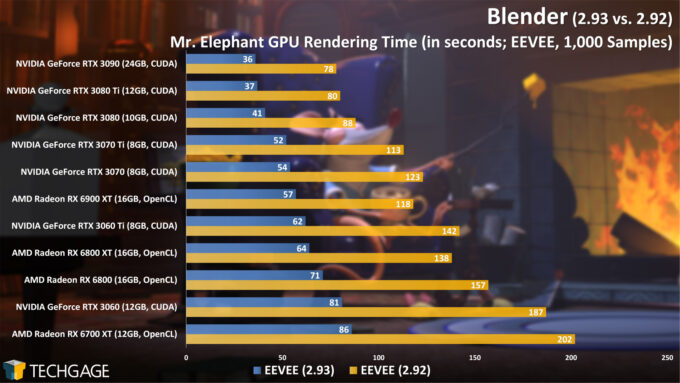

As we usually do, we’re diving into Blender 2.93’s performance in this article, taking a look at both rendering (to the CPU and GPU), and viewport performance (on the next page). We recently did a round of testing in Blender and other renderers for a recent article, but since that was published, we decided to redo all of our 2.93 testing on an AMD Ryzen 9 5950X platform to ensure that our CPU isn’t going to be a bottleneck for the viewport tests.

The EEVEE render engine doesn’t yet take advantage of OptiX accelerated ray tracing, so it gives us another apples-to-apples look at AMD vs. NVIDIA.

The most important part of the article is below:

On the topic of AMD, the de facto annoyance we’ve had when testing Blender the past year is seeing Radeon cards fall notably behind NVIDIA, even when ray tracing acceleration isn’t involved. The viewport results above can even highlight greater deltas between the two vendors than some of the renders do. Then there’s the issue of driver stability.

It was last fall, with our look at Blender 2.90, that some notable issues became more common in Blender with Radeon GPUs, and we’re not really sure that much has improved since then. In advance of any performance testing for a new article, we always ensure we have up-to-date drivers, but for Radeon, we couldn’t go that route this time. The recent 21.6.1 driver gives us errors in select renders, while the 21.6.2 driver has converted that error into a blue-screen-of-death.

There’s not really much more we can say here. We thoroughly test Blender, having done deep-dives for 2.80, 2.81, 2.83, 2.90, 2.91, 2.92, and of course, 2.93, and from our experience, you’ll have an easier and better time with NVIDIA if Blender is your primary tool of choice

Last time I checked CUDA and OpenCL aren't the same, so not apples-to-apples like they claim