I think I prefer his free analysis - much more entertaining....it is after all a satire site isn't it?

The schedule was obviously silly, and I don't know why Charlie expected the high end to go away in 2012, but he did get one thing right: low-end GPUs are dead. It took longer than a year or two, but it did happen. Obviously, any finite and ordered set of elements has a minimum, thus both AMD and NVIDIA still have "low-end" GPUs, but they're bigger (in mm²) and have wider buses than the low-end GPUs of yesteryear. If I'm not mistaken, NVIDIA's smallest desktop GPU for this generation is GM107, which is almost 150mm² and has a 128-bit bus.

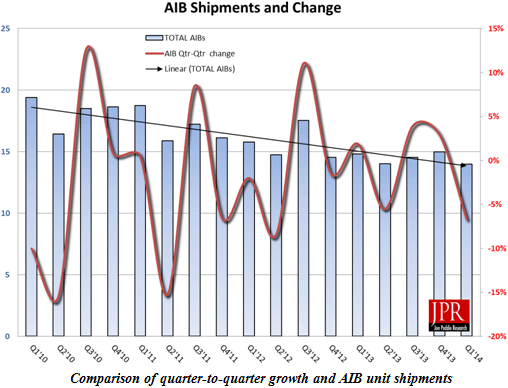

Beyond this, there are adverse trends for discrete GPUs. Attach rates are falling, in part due to the market's shift towards ever more mobile solutions, thus towards more constrained form factors, but that's not all. If you look at Intel's APUs for example (I'm using AMD's terminology here to refer to mainstream CPUs with integrated graphics) they have been "stuck" at 4 cores since Sandy Bridge. So that's Sandy Brdige, Ivy Bridge, Haswell, Broadwell, and almost certainly Skylake. There's nothing to suggest that the latter's replacement will have more CPU cores. In the meantime, processes have evolved from 32nm to 22nm and then 14nm. Once again, it's unlikely that future 10nm APUs will exceed 4 cores. The inevitable consequence of this is that the share of silicon real estate devoted to graphics increases at every generation, especially with shrinks. Meanwhile, discrete GPUs do not benefit from such increases in available space.

Since the first APUs and, for the most part, up to this day, the major problem has been bandwidth. For an equal amount of graphics silicon and a similar power budget, discrete GPUs always do better because they have access to fast GDDR5, and sometimes to wider buses; plus they don't have to share their bandwidth with CPU cores. But I think 2016/2017 will be the real test for the future viability of discrete GPUs as a mainstream product. This is when I expect stacked memory to become viable and affordable enough to be featured on mainstream APUs (be it HBM, stacked eDRAM, HMC or whatever) and all of a sudden, the bandwidth advantage of discrete GPUs should vanish. At that point, even ~150mm² GPUs will be difficult to justify, and it's only at 200mm² or above that they will start making some sense.

So, what does that mean for NVIDIA? If they can maintain their current (and huge) market share, and if they still dominate the professional market with Quadros and Teslas, they should be fine. But if their market share falls back to the 40~60% range and AMD keeps gaining share in the professional market, then Tegra had better start making money or they will indeed be in trouble. They have deep coffers, and if this is how they die, it will be a long, slow death, but I think the risk is very real.