GF117, which could replace GeForce 610M, GT 620M and GT 630M.GF114 680/670M

GK107 650/640M

GF118/119 630M etc.. later when GK106 is ready nV may launch it as 685M and 675M

Latest GeForce 295.55 also lists GF117-SKUs.

It seems a kind of funny that GK107 is crippled by DDR3, while GF117 has to use GDDR5 to reach adequate BW on its 64-Bit IMC.

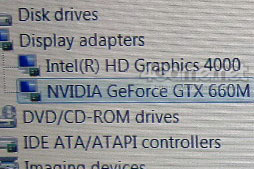

GTX 660M @ Cebit:

http://www.4gamer.net/games/120/G012093/20120306077/

And some kind of interview with Nick Stan from Nvidia:

... and more about Hyper-Threading CUDA cores?Google translated said:"Users of our products from traditional" performance only "about 1.5 times more information. Kepler would not be satisfied, but not obvious, and performance per unit of power consumption is up significantly, and conventional products of the same price range should also significantly improve performance compared to the absolute. "

Last edited by a moderator: