thanks for answering and not deleting my question(i have a bad habit of going off topic) im really interested in what the gt200 will offer price/performance wise

world domination for nVidia

.. seriously .. in the Discreet Graphics add-in market; they want it all!

[^my honest opinion^]

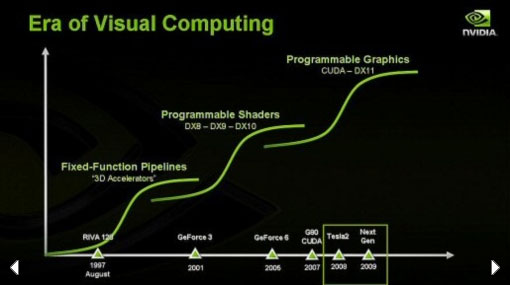

=>apoppin: I still don't think there will be a dual-chip GT200, even if the chip was shrinked. The architecture was modified to better cope with current and future games, but it still supports only DX10. So far, nVidia is doing a good job downplaying DX10.1, nevertheless, for BFUs 10.1 > 10.0. Now, imagine 45/40nm manufacturing won't be ready until Q1'09. By that time, Microsoft will probably have launched DX11 and it's not like nVidia to lag behind when a major DX version is available. Second, to allow for dual-chip cards, the chip would have to be shrunk to a size that would not allow 512bit memory. Not a problem in 2009, since there will be plenty of GDDR5 to go around, but oops, GT200 doesn't support GDDR5 or even GDDR4. That would mean having to desing a new memory controller, but I think nVidia will launch a completely new generation of GPUs instead. And make a lot of noise about DX11.

=>CJ: Since you already outed it, I can confirm that as well. (I also left a hint in the above text that somebody here could be able to see through, since I can't talk).

i do think so; but only if it is necessary. We know nVidia's preference is to have a SINGLE GPU - ideally. My own speculation is that it is held in "reserve" - IF AMD somehow comes up with a X2 or X4 that is a good performer compared to GT-200

i did not say it is a "done deal" .. remember r700 will also go thru a shrink... .

Do we know for sure that GT200 is not DX10.1?

and i think DX11 is coming after Vista7 .. in 2010; you may well be right about the next architecture after Tesla 2.0 GPU being DX11.

[my guess]

and Yes, i AM "listening" - this is called - what i am doing - "active questioning"

- reporters do this - after all "we" are not experts, you guys are. And you can also educate me

[believe-it-or-not]

Last edited by a moderator: