You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia giving free GPU samples to reviewers that follow procedure

They're not ignoring RT, they're just saying they don't think it's as important as many other things.So, you are saying a reviewer can ignore raytracing and should be entitled to get free samples from a company who is pushing raytracing? Sounds like a paradoxon.

No, it is not.No, using DLSS is fair. A reviewer should use a "performance" equal resolution on AMD's side so that viewer and reader can compare image quality. I mean when raytracing is bad because of the performance hit, rasterizing in higher resolution doesnt make any sense, too.

Reviews are supposed to be objective, some cards using native resolution and some cards rendering at lower resolution and scaling it, even íf really well, with artifacts is not objective comparison, it's apples to oranges.

DLSS is a fair point to bring up as separate entity, and show how it performs too, but it needs to be clearly separated from actual comparisons between brands. Separate image quality comparisons between different scaling methods are a different thing from performance comparisons, too.

Personal bias strikes again.

DLSS gives >60%+ more performance over native with TAA with a certain impact in image quality. "Non raytracing" options give >60%+ more performance over raytracing with a certain impact in image quality.

I think we have to give this paradoxon a name. Either you like frames over image quality or image quality over frames. Both is not possible.

DLSS gives >60%+ more performance over native with TAA with a certain impact in image quality. "Non raytracing" options give >60%+ more performance over raytracing with a certain impact in image quality.

I think we have to give this paradoxon a name. Either you like frames over image quality or image quality over frames. Both is not possible.

Eyes-enberg Principle?

>_>

Lol nice.

Gross.

Gross, again.

And a hello to you too!

You can't be serious. Surely you were intoxicated when you posted this.

https://www.youtube.com/watch?v=YH5QNQngL8A

https://www.youtube.com/watch?v=NxvoxpeeIaQ

https://twitter.com/hardwareunboxed/status/1337699116361658368?s=21

Yeah man, they totally ignore ray tracing.

Yeah, those aren't reviews of the latest cards. That's what I understood we were talking about in this thread - Nvidia sending HU review cards for their reviews.

Again, there are parallels between the hype surrounding ray tracing today and something like DX10 or tessellation back in the G80 vs R600 days.

Let's be clear: no one is denying that RT (and ML-based SS like DLSS) is the future of games. The issue is that, so far, most implementations of RT are not worth the drastic hit to performance for a negligible improvement in IQ. As devs learn to better utilise RT (thanks to Nvidia's RTX, the consoles, and Navi 2nd gen), this will change. I think games like Control and CP2077 are previews of what's to come in the future.

The DX9 -> DX10 move was huge. The move away from pixel shaders and fixed-function units towards a more generalist approach to GPU processing was a massive game changer. G80's performance was incredible and RV770 was amazing. But those first couple generations of DX10 and DX10.1 implementations sucked. But here we are, finally, with DX12 and Vulkan.

RT will follow the same path.

But none of this makes how cards handle RT now and in the coming year or two something to largely ignore in reviews. Completely the opposite, in my opinion.

And trying to get a handle on how the cards will fare against the most demanding workloads over their service life is an important part of a review - again, in my opinion. I think HU could, and probably should, have done better in this regard.

Oh, and I think there are lots of examples already where the hit to performance is worth it for RT. I don't buy into this idea that it's not worth it, and that data on it isn't important yet.

The sense of entitlement of Nvidia and its grifters regarding tech reviewers is both funny and a little depressing.

I see what you did there, but it doesn't really work because Nvidia actually are entitled to not give people cards. They literally are. They have a sense that they are entitled to do that, because they are literally entitled to do that.

Before long I expect HU will be doing more coverage of RT, and Nvidia will be back to sending them cards. In the mean time, while it has again highlighted that Nvidia can be dicks, it's also got people talking about Nvidia's RT advantage.

Personal bias strikes again.

DLSS gives >60%+ more performance over native with TAA with a certain impact in image quality. "Non raytracing" options give >60%+ more performance over raytracing with a certain impact in image quality.

I think we have to give this paradoxon a name. Either you like frames over image quality or image quality over frames. Both is not possible.

Yep, give people the information and let them decide.

it's also got people talking about Nvidia's RT advantage.

Was thinking that before, but ok now someone says it. Its free PR, its a way of getting this RT in these times when games like 2077 launch to the masses. Marketing teams know what to do. Let them haters hate and spread more news about ray tracing

Its a circle of events, if the PS had great dlss like tech, and beasty RT, there would be less of this discussions going on, which means less PR for NV.

This goes to show how important marketing is, how important it is to allocate large budgets to your pr teams.

Yep, give people the information and let them decide.

Just like that Hardware Unboxed channel does.

Having one game out of 15 feature ray tracing is a valid choice because that likely corresponds to the usage owners of current cards are going to get.

Just like that Hardware Unboxed channel does.

Having one game out of 15 feature ray tracing is a valid choice because that likely corresponds to the usage owners of current cards are going to get.

Disagree.

If a reviewer said they were testing the 6900xt and their review had 6 games with RT settings and one game at non RT settings, do you believe that would be a fair review as long as the reviewer said, “I believe RT is the future of gaming, and if you want non RT data go to another high quality channel.”

I don’t think anyone would consider that fair. This is essentially what HU did with their 3070 and 3060ti FE reviews, but even more heavily skewed in the opposite direction to rasterization.

Just like that Hardware Unboxed channel does.

Having one game out of 15 feature ray tracing is a valid choice because that likely corresponds to the usage owners of current cards are going to get.

No, that's not giving people the information on RT performance so they can decide. That's the opposite. That's deciding what someone's usage is going to be, and how much they will value RT, and consequently offering only the minimum possible amount of information on RT performance.

Selecting software for benchmarks is not easy, and you can't please everyone. But featuring only one RT title for your suite going into the launch of two new, rival, RT architectures at the end of 2020 (when RT consoles are also landing!!) is just ... wow.

That's definitely not giving people the information on RT so they can decide - it's deciding for them. And IMO it's not an adequately forward looking way to try and asses this latest hardware. It's heavily skewed away from an important and highly anticipated feature. And while I wish they had, it's not a line that Nvidia actually have to support them in taking.

It's pretty clear that the Nvidia grifters have no interest in engaging in a good faith discussion of a serious breach of media independence and unbiasedness.

I guess those benchmarks are CPU bound.

Also, define "lots", and show me an instance where I -- or anyone else in this thread -- said that the data on RT performance penalties as well as RT IQ improvements isn't important.

The whole point of our argument is that we have the data, and that the performance hits do not justify the minor IQ improvements. The exception to this rule, at present, are games like Control and Cyberpunk 2077, hence why HUB/GN/LTT do focus videos on those titles.

Another straw man! Who's arguing against this?

Nvidia is of course entitle to send cards to whomever they want. Just like Apple, Intel, AMD, Asus, MSI, and other vendors.

Nvidia, are not, however, entitled to dictating the what reviewers can or should say in their reviews.

This is the Barbara Streisand effect, and is free PR for AMD.

Every AMD fanboy and conspiracy against Nvidia has been vindicated, and now many people who were vendor agnostic (or with a slight preference for Nvidia) view Nvidia as the devil and AMD as the saviour of PC gaming graphics.

But hey, you do you. Let's just let all hardware companies dictate the terms of what a reviewer can and should say, and let's not allow proprietary tech get in the way of hardware comparisons! What could go wrong?

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22046789/lcimg_13d75f92_0404_43ca_95e0_e2c05d52f7ae.jpg)

The videos cover the RTX 2000 and 3000 series. Is there a new Nvidia RTX lineup based on an architecture newer than Ampere that we're not aware of?Yeah, those aren't reviews of the latest cards. That's what I understood we were talking about in this thread - Nvidia sending HU review cards for their reviews.

I think you need to revise your understanding of the word 'ignore'.But none of this makes how cards handle RT now and in the coming year or two something to largely ignore in reviews. Completely the opposite, in my opinion.

Yes, like showing how even $700 flagships struggle to hit 30 FPS with RT, which HUB/Gamers Nexus/LTT show.And trying to get a handle on how the cards will fare against the most demanding workloads over their service life is an important part of a review - again, in my opinion. I think HU could, and probably should, have done better in this regard.

I guess those benchmarks are CPU bound.

Yes, like HUB mentioned when talking about Control and Cyberpunk 2077.Oh, and I think there are lots of examples already where the hit to performance is worth it for RT. I don't buy into this idea that it's not worth it, and that data on it isn't important yet.

Also, define "lots", and show me an instance where I -- or anyone else in this thread -- said that the data on RT performance penalties as well as RT IQ improvements isn't important.

The whole point of our argument is that we have the data, and that the performance hits do not justify the minor IQ improvements. The exception to this rule, at present, are games like Control and Cyberpunk 2077, hence why HUB/GN/LTT do focus videos on those titles.

Nvidia actually are entitled to not give people cards. They literally are. They have a sense that they are entitled to do that, because they are literally entitled to do that.

Another straw man! Who's arguing against this?

Nvidia is of course entitle to send cards to whomever they want. Just like Apple, Intel, AMD, Asus, MSI, and other vendors.

Nvidia, are not, however, entitled to dictating the what reviewers can or should say in their reviews.

Before long I expect HU will be doing more coverage of RT, and Nvidia will be back to sending them cards. In the mean time, while it has again highlighted that Nvidia can be dicks, it's also got people talking about Nvidia's RT advantage.

This is the Barbara Streisand effect, and is free PR for AMD.

Every AMD fanboy and conspiracy against Nvidia has been vindicated, and now many people who were vendor agnostic (or with a slight preference for Nvidia) view Nvidia as the devil and AMD as the saviour of PC gaming graphics.

But hey, you do you. Let's just let all hardware companies dictate the terms of what a reviewer can and should say, and let's not allow proprietary tech get in the way of hardware comparisons! What could go wrong?

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22046789/lcimg_13d75f92_0404_43ca_95e0_e2c05d52f7ae.jpg)

@Wesker look at HU’s reviews of the 3070 FE and 3060ti FE. Tell me how much time, how many settings and how many games they benchmarked for RT. A review sample was provided free for those reviews. Was RT covered sufficiently for consumers to make decision about whether they’d want to use that feature on those cards now or in the future?

How well do the 3070 and 3060 Ti perform with RT?@Wesker look at HU’s reviews of the 3070 FE and 3060ti FE. Tell me how much time, how many settings and how many games they benchmarked for RT. A review sample was provided free for those reviews. Was RT covered sufficiently for consumers to make decision about whether they’d want to use that feature on those cards now or in the future?

Question: did HUB praise the 3070 and 3060 Ti or pan them?

How well do the 3070 and 3060 Ti perform with RT?

Question: did HUB praise the 3070 and 3060 Ti or pan them?

I actually have no idea how well they do RT and that’s funny because I watched the RT segments of HU’s reviews yesterday.

I actually have no idea how well they do RT and that’s funny because I watched the RT segments of HU’s reviews yesterday.

RTX 3070 review video (fast forwarded to discuss RT):

Cross-comparison benchmarks:

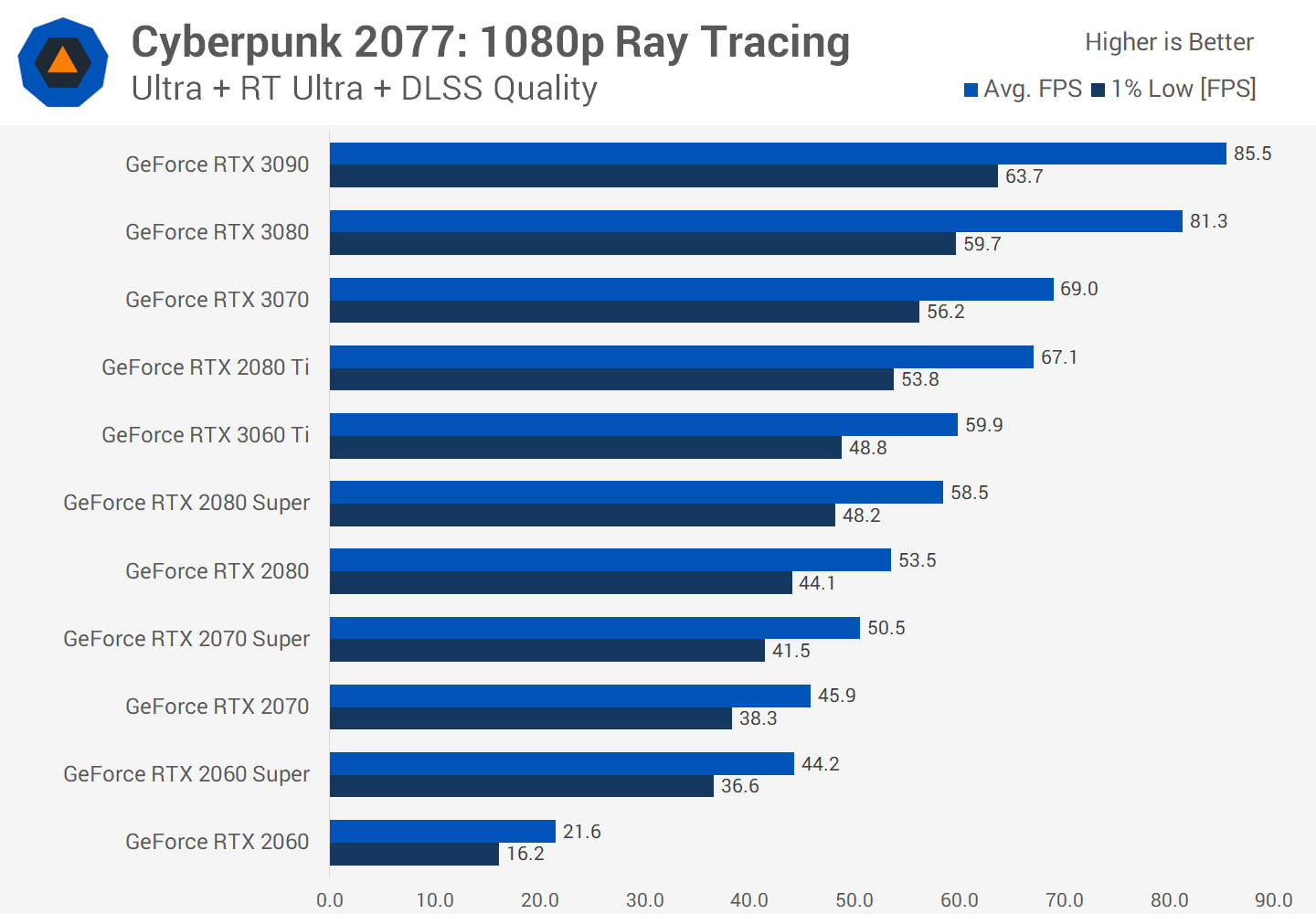

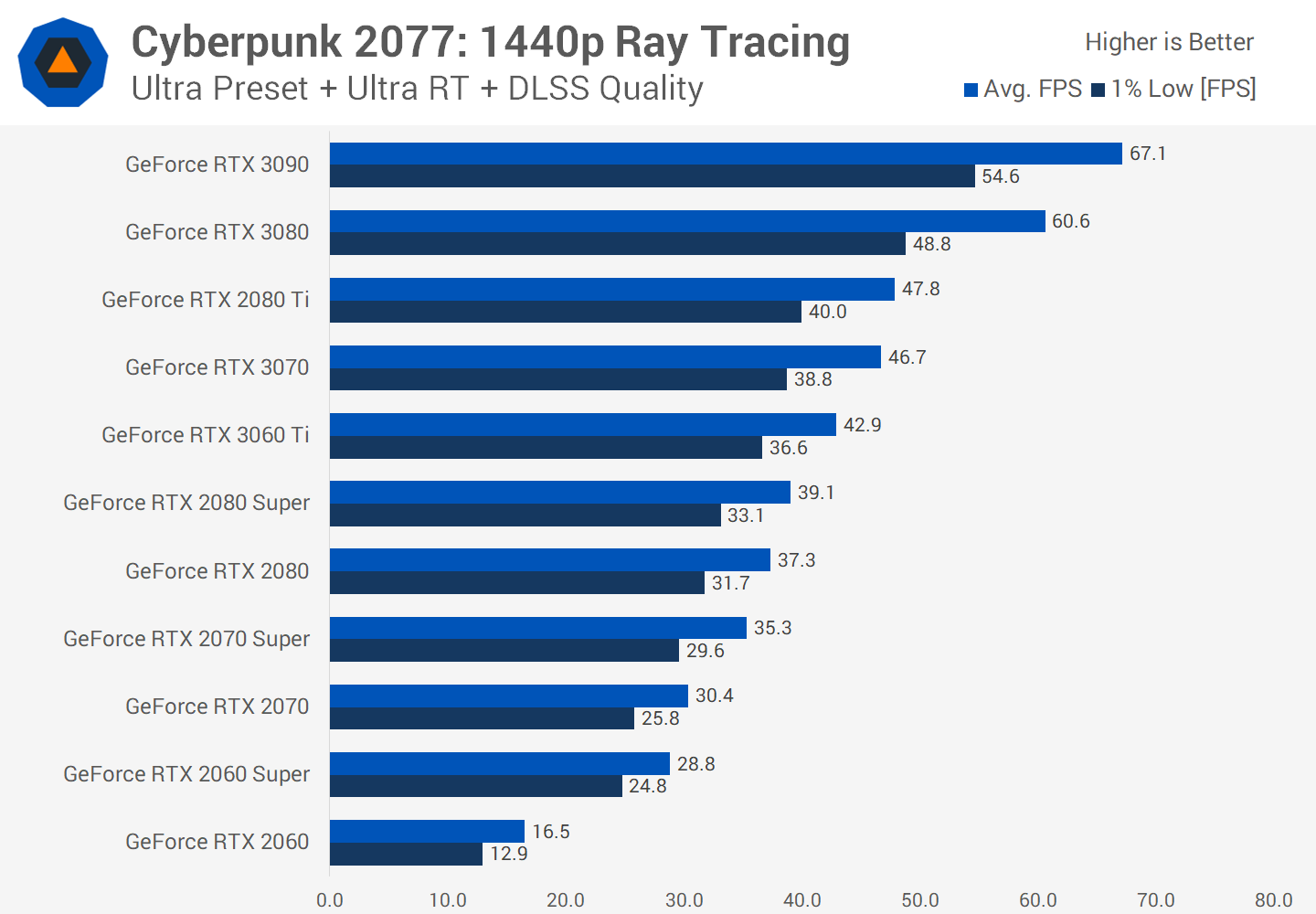

https://www.techspot.com/review/2124-geforce-rtx-3070/

Cyberpunk RT and DLSS benchmarks:

https://www.techspot.com/article/2165-cyberpunk-dlss-ray-tracing-performance/

EDIT: It's pointless engaging with you guys if you're going to be dishonest and intentionally dense.

How well do the 3070 and 3060 Ti perform with RT?

Pretty good apparently. And this is at “Ultra”. Performance is likely significantly higher with just RT reflections.

Yes, RT (and DLSS) in CP2077 is pretty good at 1080p for the RTX 3070 and 3060 Ti (~ RTX 2080 Ti and RTX 2080 Super, respectively) -- but that's the point, right?Pretty good apparently. And this is at “Ultra”. Performance is likely significantly higher with just RT reflections.

*snip*

EDIT:

Last edited:

Similar threads

- Replies

- 21

- Views

- 1K

- Replies

- 4

- Views

- 2K

- Replies

- 2

- Views

- 505

- Replies

- 115

- Views

- 12K