Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia BigK GK110 Kepler Speculation Thread

- Thread starter A1xLLcqAgt0qc2RyMz0y

- Start date

-

- Tags

- nvidia

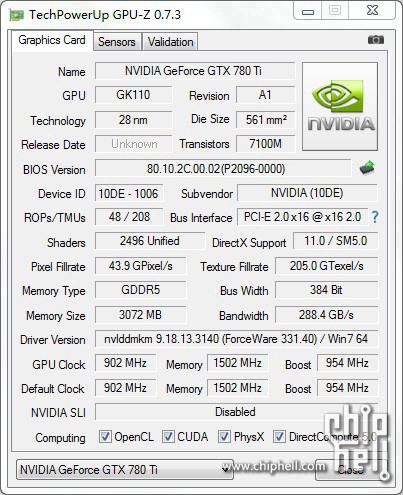

The fill-rates in this GPU-Z shot are wrong. But of course GPU-Z has sometimes problems with calculation.

Maybe this:Also, MyDrivers.com has a rumor of a "new GTX 780" that is not the 780 Ti.

http://www.techpowerup.com/gpudb/2513/geforce-gtx-780-rev-2.htmlNVIDIA GeForce GTX 780 Rev. 2

[...]

Bios Remade from the ground up

iMacmatician

Regular

9% higher core (base) clock, 15% higher memory clock and lower TDP (225W vs. 235W) compared to K20X. That's quite ok for something which is supposedly more or less the same chip.According to this document from HP, the Quadro K6000 uses Boost for a base clock of 797 MHz and a boost clock of the 902 MHz that we are familiar with.

I should have known a 902 MHz base clock in 225 W is too good to be true.Still, that's a nontrivial bump over the K20X.

boxleitnerb

Regular

And 1 SMX more. But somehow I doubt the 780 Ti will have all 2880 SPs enabled...

Ah yes forgot about that. Sounds really good then (17% more compute power, 15% more memory bandwidth and all with a 5% reduction in TDP so about a 20% power efficiency increase) though I'd want to see real-world power draw measurements. It also has twice the memory but since it's just 4gbit instead of 2gbit chips that shouldn't really make a difference in power draw.And 1 SMX more.

Considering how long it took from the first K20 shipments and until the chip was mature enough for the consumer market those early specs have probably been pretty conservative.

And I'm not sure we can really compare server and desktop tdp values either - the former is much more likely to be taken at face value.. (even a "power virus" should neither throttle or exceed the tdp)

And I'm not sure we can really compare server and desktop tdp values either - the former is much more likely to be taken at face value.. (even a "power virus" should neither throttle or exceed the tdp)

GK180 = GK110-B1?

The later one is already shipped on OC GTX 780:

http://www.xtremesystems.org/forums...Y-GTX-780-Hall-of-Fame-(HOF)-Performance-Test

TPU has the B1 added in its database, too:

http://www.techpowerup.com/gpudb/2513/geforce-gtx-780-rev-2.html

The later one is already shipped on OC GTX 780:

http://www.xtremesystems.org/forums...Y-GTX-780-Hall-of-Fame-(HOF)-Performance-Test

TPU has the B1 added in its database, too:

http://www.techpowerup.com/gpudb/2513/geforce-gtx-780-rev-2.html

Last edited by a moderator:

iMacmatician

Regular

From Fudzilla: "Geforce GTX 780 Ti to end up faster than Titan."

If the 780 Ti has equal or better specs than the Titan in every area (including the VRAM size and boost headroom) then I don't see why it would ever perform worse than the Titan, so if there is some benchmark where the 780 Ti loses, then that suggests some tradeoff in specs from the Titan to the 780 Ti (even if it's an overall upgrade). They don't say what benchmarks that they are not sure if the 780 Ti will win against the Titan in though (DP? Ones where if the 780 Ti were 3 GB it would be VRAM-limited?).Fuad Abazovic said:We are not sure if GTX 780 Ti beats the Titan in all benchmarks, but it will definitely be faster in most of them.

[XC] Oj101 on XtremeSystem:

"Stock clock speed on the 780 Ti puts it between 3 and 5 % below a 290X running at around 1150 MHz on the Performance Preset.

By the way, I'm pretty sure the 780 Ti will use a new revision of GK110, not GK180. I don't see any need for validating ECC, etc on a consumer desktop card. GK110 can indeed have more CUDA cores."

"Stock clock speed on the 780 Ti puts it between 3 and 5 % below a 290X running at around 1150 MHz on the Performance Preset.

By the way, I'm pretty sure the 780 Ti will use a new revision of GK110, not GK180. I don't see any need for validating ECC, etc on a consumer desktop card. GK110 can indeed have more CUDA cores."

A1xLLcqAgt0qc2RyMz0y

Veteran

[XC] Oj101 on XtremeSystem:By the way, I'm pretty sure the 780 Ti will use a new revision of GK110, not GK180.

If the GK180 is a respin with better yields than the GK110 at higher clocks and fully enabled then it more likely will be used for the 780 Ti.

Why would any validation of ECC need to be done at all on a consumer GPU.I don't see any need for validating ECC, etc on a consumer desktop card. GK110 can indeed have more CUDA cores."

iMacmatician

Regular

Does anyone have hard info on Kepler's register file bandwidth? The maximum throughput I'm seeing on a 680 is 128 instr/clk per SM using gpubench. Same goes for these guys - http://hal.inria.fr/docs/00/78/99/58/PDF/112_Lai.pdf.

Sorry for the self-quote but this is still a mystery to me. It's pretty clear at this point that a Kepler SMX can only issue 128 ALU instr/clk peak, regardless of what NVidia claims.

Does anyone have any idea why they would have 6 SIMDs per SMX when only 4 warps can be issued to them? Do they have some sort of CPU like setup where instruction issue ports are shared by the execution units and having more SIMDs helps to resolve port conflicts?

I'm sure they serve some purpose - just wish I knew what it was!

ftp://ftp.lal.in2p3.fr/pub/PetaQCD/talks-ANR-Final/Review_Junjie_LAI.pdf

Is this valid for GK110, too?

Yes.

Similar threads

- Replies

- 6

- Views

- 4K

- Replies

- 2K

- Views

- 229K

- Replies

- 176

- Views

- 72K

- Replies

- 27

- Views

- 10K