Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

DegustatoR

Legend

Quad SLI on cards each being 250W anyone? I still don't understand why people are so surprised by GPUs hitting 500W. They have been doing this for ages, and no one is forcing you to buy these products still.One thing is for sure... Pushing GPUs too far isn't a new thing. In fact it's the norm. And we should be laughing at their desperation to win. Or something.

I'm glad they usually include a quiet mode.

It’s only the norm when needed to compete. Nvidia didn't need to do this with Kepler up through Turing. They have gone back to the ways of the Fermi.One thing is for sure... Pushing GPUs too far isn't a new thing. In fact it's the norm. And we should be laughing at their desperation to win. Or something.

I'm glad they usually include a quiet mode.

arandomguy

Veteran

It’s only the norm when needed to compete. Nvidia didn't need to do this with Kepler up through Turing. They have gone back to the ways of the Fermi.

The interesting thing about this perception (that I see gets brought up a lot especially in comparison to how "light" Pascal supposedly was configured) is that the GTX 480 (Fermi) has the same official 250w TDP rating as the 780ti, 980ti, 1080ti and 2080ti. It also does draw under that in typical gaming workloads. The one caveat is that back then power limiters did not function like now and so you could blow past that via tests such as Furmark.

if you don't believe me - https://www.techpowerup.com/review/nvidia-geforce-gtx-480-fermi/30.htm

and another source - https://www.guru3d.com/articles-pages/asus-geforce-gtx-480-enggtx480-review,6.html

While 1080ti - https://www.techpowerup.com/review/nvidia-geforce-gtx-1080-ti/28.html

It is a good point but GTX 480 was problematic for them and was pushed to the limit so they wouldn't fall behind. GTX 580 is essentially the same chip but uses less power, is faster, quieter (than 480 and the competition). 580 also has a Furmark limiter.The interesting thing about this perception (that I see gets brought up a lot especially in comparison to how "light" Pascal supposedly was configured) is that the GTX 480 (Fermi) has the same official 250w TDP rating as the 780ti, 980ti, 1080ti and 2080ti. It also does draw under that in typical gaming workloads. The one caveat is that back then power limiters did not function like now and so you could blow past that via tests such as Furmark.

if you don't believe me - https://www.techpowerup.com/review/nvidia-geforce-gtx-480-fermi/30.htm

and another source - https://www.guru3d.com/articles-pages/asus-geforce-gtx-480-enggtx480-review,6.html

While 1080ti - https://www.techpowerup.com/review/nvidia-geforce-gtx-1080-ti/28.html

There were also the dual GPU cards like 5970 and 590. Those are right up there with today's most nightmarish.

Last edited:

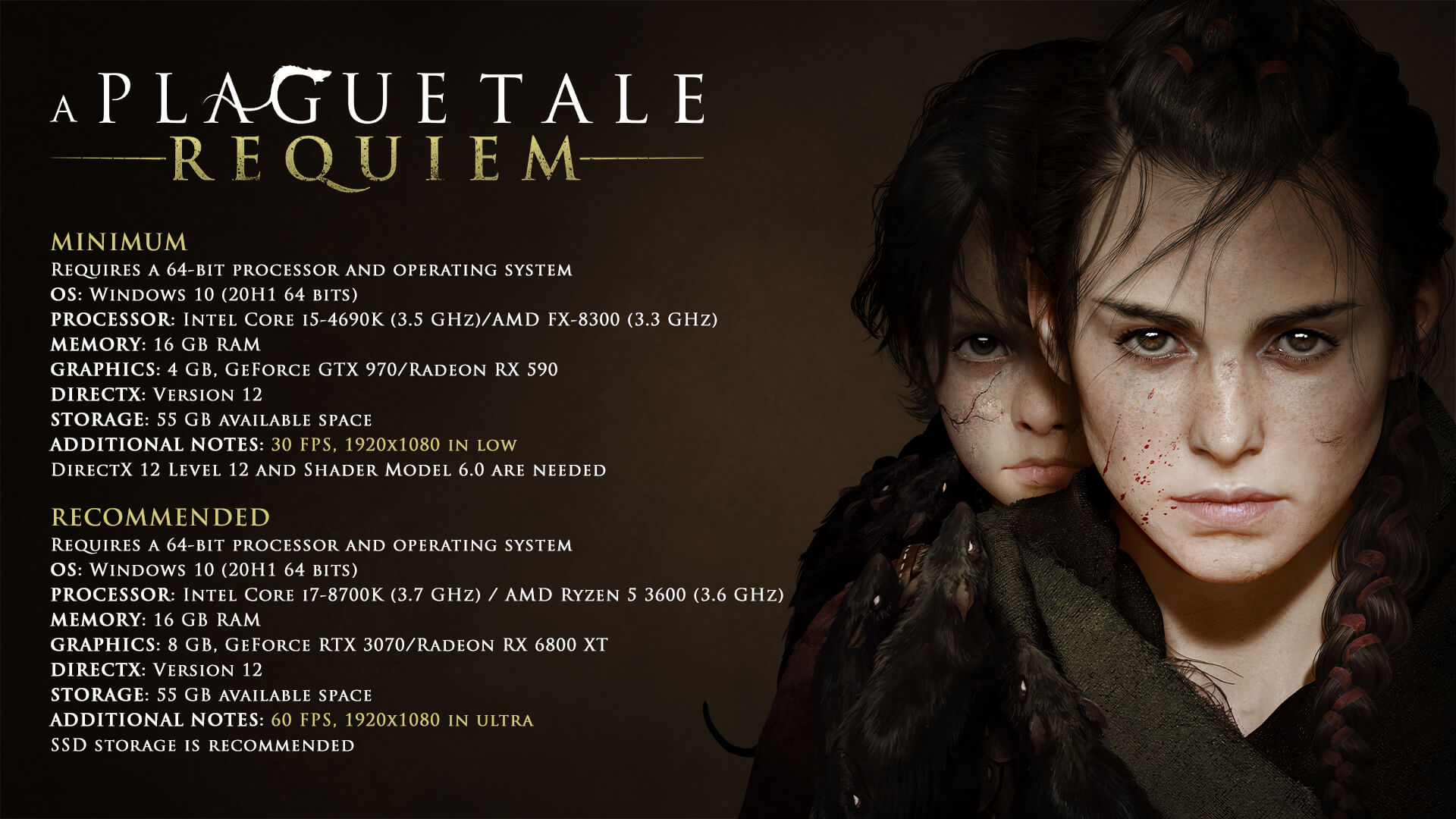

A 3070/6800 XT for 1080p/60fps/Ultra. This game has RT. I saw people online saying "What game needs a 4090?"

This is one of them and games will keep getting more punishing and as more and more RT features are introduced, it'll get even worse for performance. I'm personally glad we're seeing this push for ray tracing. The tech needs all the implementation it can get.

Is this really necessary?I'm personally glad you're personally glad for RT as well. Make a new thread about it. Just think about all the thumbs upping you guys could do with each other.

DegustatoR

Legend

I can get such posts in RDNA3 thread but in Lovelace's?I'm personally glad you're personally glad for RT as well. Make a new thread about it. Just think about all the thumbs upping you guys could do with each other.

Is this really necessary?

Ive decided to start just reporting more instead of actively reacting with a post and reporting. Hit the report button if you suspect faul play etc, one of the two active moderators will review it and if its something to be moderated it will happen. If mods decide to do nothing, no harm done its a dud, but then you know what is OK to post or not.

DavidGraham

Veteran

GeForce RTX 4090/3090 performance uplift:

videocardz.com

videocardz.com

- FireStrike Ultra: 2.00x – 2.04x

- TimeSpy Extreme: 1.84x – 1.89x

- Port Royal: 1.82x – 1.86x

NVIDIA GeForce RTX 4090 3DMark scores leaked, at least 82% faster than RTX 3090 - VideoCardz.com

NVIDIA RTX 4090 tested in 3DMark We have some early benchmarks of the upcoming Ada Lovelace flagship gaming card, the RTX 4090. The leaked benchmark scores feature 3DMark FireStrike Ultra, a DirectX11 benchmark running at 4K resolution, TimeSpy Extreme using DirectX12 API and same resolution...

The difference between the 4090 and 4080 16 GB is crazy. Ada is clearly a spectacular piece of silicon but with the current line up I can't help but feel it's pricing itself into irrelevance. I really can't see how DLSS3, let alone some of the more obscure features in Ada can ever become close to being mainstream when the GPU's are out of range of 99% of PC gamers.

The difference between the 4090 and 4080 16 GB is crazy. Ada is clearly a spectacular piece of silicon but with the current line up I can't help but feel it's pricing itself into irrelevance. I really can't see how DLSS3, let alone some of the more obscure features in Ada can ever become close to being mainstream when the GPU's are out of range of 99% of PC gamers.

I assume that RTX4060, RTX4070 etc will also support those features, with enough performance to them. 4060 should be ballpark 3080 i think.

It won't always be that way. And I'm just guessing here, but I think if you've gone through the trouble to integrate DLSS and any of the other temporal reconstruction techniques out there, it's probably not THAT much more effort to implement DLSS3... especially when you have Nvidia pushing for adoption. It's not just good for gamers, it's an easy marketing bullet point for the game as well.The difference between the 4090 and 4080 16 GB is crazy. Ada is clearly a spectacular piece of silicon but with the current line up I can't help but feel it's pricing itself into irrelevance. I really can't see how DLSS3, let alone some of the more obscure features in Ada can ever become close to being mainstream when the GPU's are out of range of 99% of PC gamers.

It won't always be that way.

That's true but we've already waited 2 years for a generational performance leap. Is the norm now that we have to wait 4 years for an affordable one? I'm not sure I can buy into that model.

And I'm just guessing here, but I think if you've gone through the trouble to integrate DLSS and any of the other temporal reconstruction techniques out there, it's probably not THAT much more effort to implement DLSS3... especially when you have Nvidia pushing for adoption. It's not just good for gamers, it's an easy marketing bullet point for the game as well.

I do suspect you're right on this one. DLSS 3's integration will likely be disproportionate to the 4xxx series market penetration for the reasons you state above, and because it brings benefit to existing gamers over and above DLSS2 in the form of Reflex.

I'm more concerned about Ada's other features that require specific dev integration. They offer huge potential but why would any dev bother to integrate them under the current circumstances without significant handouts? It seems to me that NV has created an incredible piece of silicon but wants to use it to drive short term profits rather than the long term health of the PC gaming market.

From a business perspective given the seemingly massive opportunities they have in the AI space moving forwards that might be a savvy move, but as a gamer I certainly don't celebrate it.

Rootax

Veteran

Do we even know what they have improved with these "new" cores ?The RT performance in Port Royal doesn't look particularly impressive though. I was expecting to see the largest difference there given the fact that these are 3rd generation RT cores.

- Status

- Not open for further replies.

Similar threads

- Replies

- 177

- Views

- 42K

D

- Replies

- 90

- Views

- 18K

- Replies

- 20

- Views

- 7K