We have just released Steam Audio 2.0 beta 15, which brings support for AMD Radeon Rays technology. Radeon Rays is a high-performance, GPU-accelerated software library for ray tracing, and works on any modern AMD, NVIDIA, or other GPU. Steam Audio uses ray tracing when baking indirect sound propagation and reverberation; using Radeon Rays lets Steam Audio achieve performance gains of 50x-150x over the built-in ray tracer running with a single thread during baking. For example, reverb bakes that required an hour using the built-in ray tracer with a single thread should now take less than a minute using Radeon Rays on a Radeon RX Vega 64 GPU.

Radeon Rays support is optional in Steam Audio; Steam Audio continues to work on any PC with any CPU or GPU, as well as on ARM-based Android devices.

How is Radeon Rays useful to Steam Audio?

Steam Audio uses ray tracing for baking indirect sound propagation. Rays are traced from a probe position and bounced around the scene until they hit a source. The surfaces hit by the rays determine how much energy is absorbed, and how much reaches the probe from the source. These energies, along with the arrival times of each ray, are used to construct the impulse response from the source to the probe. An impulse response (IR) is an audio filter that represents the acoustics of the scene; rendering a sound with the IR creates the impression that the sound was emitted from within the scene.

The above approach is also used when baking reverb; in this case rays are traced from a probe position and bounced around the scene until they hit the probe again. The IR constructed this way models the listener-centric reverb at the probe position.

When baking, ray tracing is used to simulate indirect sound propagation for hundreds, or even thousands of probes for a typical scene. Radeon Rays lets developers use the compute capabilities of their GPU to significantly accelerate baking, resulting in measurable time savings during the design process.

The current release of Steam Audio does not support real-time simulation with Radeon Rays.

What is Radeon Rays?

Radeon Rays is a software library that provides GPU-accelerated algorithms for tracing coherent rays (direct light) and incoherent rays (global illumination, sound propagation). Radeon Rays is highly optimized for modern GPUs, and provides OpenCL and Vulkan backends. Steam Audio uses the OpenCL backend, which requires a GPU that supports OpenCL 1.2 or higher.

Radeon Rays is not restricted to AMD hardware; it works with any device that supports OpenCL 1.2 or higher, including NVIDIA and Intel GPUs.

What are the benefits of Radeon Rays?

When using Steam Audio to bake indirect sound propagation or reverb, Radeon Rays provides significant speedups and time savings for designers:

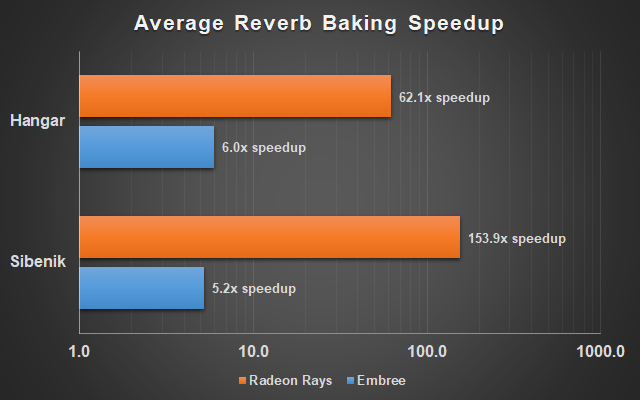

Figure: Speedup when baking reverb using Radeon Rays vs. Embree (single-threaded) vs. Steam Audio's built-in ray tracer (single-threaded), for two scenes: Sibenik cathedral (80k triangles) and a Hangar scene from the Unity Asset Store (140k triangles). Speedups are averaged over a range of simulation settings and probe grid densities, and plotted using a logarithmic scale. Speedups shown in the graph are relative to Steam Audio's built-in ray tracer. For example, on the Sibenik cathedral, Embree on a single core is 5.2x faster than the built-in ray tracer on a single core; Radeon Rays on an RX Vega 64 is 153.9x faster than the built-in ray tracer on a single core.

The above performance measurements were obtained on an Intel Core i7 5930K (Haswell E) CPU, along with an AMD Radeon RX Vega 64 GPU, running Windows 10 64-bit.