1. Chip-Level Multiprocessing (CMP)

Intel continues pioneering in one of the most important directions in microprocessor architecture—increasing parallelism for increased performance. As shown in Figure 1, we started with the superscalar architecture of the original Intel® Pentium® processor and multiprocessing, continued in the mid-90s by adding capabilities like "out of order execution," and most recently introduced Hyper-Threading Technology in the Pentium 4 processor. These paved the way for the next major step—the movement away from one, monolithic processing core to multiple cores on a single chip.

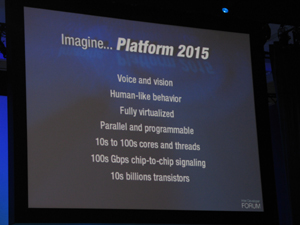

Intel is introducing multi-core processor-based platforms to the mainstream. These platforms will initially contain Intel processors with two cores, evolving to many more. We plan to deliver Intel processors over the next decade that will have dozens, and even hundreds of cores in some cases. We believe that Intel's chip-level multiprocessing (CMP) architectures represent the future of microprocessors because they deliver massive performance scaling while effectively managing power and heat.

Figure 1. Driving increasing degrees of parallelism on Intel® processor architectures.

In the past, performance scaling in conventional single-core processors has been accomplished largely through increases in clock frequency (accounting for roughly 80 percent of the performance gains to date). But frequency scaling is running into some fundamental physical barriers. First of all, as chip geometries shrink and clock frequencies rise, the transistor leakage current increases, leading to excess power consumption and heat (more on power consumption below).

Secondly, the advantages of higher clock speeds are in part negated by memory latency, since memory access times have not been able to keep pace with increasing clock frequencies. Third, for certain applications, traditional serial architectures are becoming less efficient as processors get faster (due to the so-called Von Neumann bottleneck), further undercutting any gains that frequency increases might otherwise buy. In addition, resistance-capacitance (RC) delays in signal transmission are growing as feature sizes shrink, imposing an additional bottleneck that frequency increases don't address.

Therefore, performance will have to come by other means than boosting the clock speed of large monolithic cores. Instead, the solution is to divide and conquer, breaking up functions into many concurrent operations and distributing these across many small processing units. Rather than carrying out a few operations serially at an extremely high frequency, Intel's CMP processors will achieve extreme performance at more practical clock rates, by executing many operations in parallel². Intel's CMP architectures will circumvent the problems posed by frequency scaling (increased leakage current, mismatches between core performance and memory speed and Von Neumann bottlenecks). Intel® architecture (IA) with many cores will also mitigate the impact of RC delays³.

Intel's CMP architectures provide a way to not only dramatically scale performance, but also to do so while minimizing power consumption and heat dissipation. Rather than relying on one big, power-hungry, heat-producing core, Intel's CMP chips need activate only those cores needed for a given function, while idle cores are powered down. This fine-grained control over processing resources enables the chip to use only as much power as is needed at any time.

Intel's CMP architectures will also provide the essential special-purpose performance and adaptability that future platforms will require. In addition to general-purpose cores, Intel's chips will include specialized cores for various classes of computation, such as graphics, speech recognition algorithms and communication-protocol processing. Moreover, Intel will design processors that allow dynamic reconfiguration of the cores, interconnects and caches to meet diverse and changing requirements.

Such reconfiguration might be performed by the chip manufacturer, to repurpose the same silicon for different markets; by the OEM, to tailor the processor to different kinds of systems; or in the field at runtime, to support changing workload requirements on the fly. Intel® IXP processors today provide such capability for special purpose network processing. As shown in Figure 2, the Intel IXP 2800 has 16 independent micro engines operating at 1.4 GHz along with an Intel XScale® core