Love_In_Rio

Veteran

I´m sorry, but I just had to put those two together. In one post

Plus this:Naughty Dog will show the answer.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I´m sorry, but I just had to put those two together. In one post

May I ask that how much efficiency PS4 can achieve compared with a PC having the same spec? 1.5 times of efficiency? Better/Worse? THX!

It looks good, but remember to separate "cinematic" from "gameplay". In the former (like infiltrator) you can pretty much just pre-bake all of the lighting (into probes and lightmaps) and optimize exactly what the user is seeing. While it is definitely pretty and is running well, don't necessarily expect the lighting and animation in particular to translate perfectly to dynamic gameplay situations.

Sure, I didn't mean to imply that we need fully dynamic GI for something to look good. I was just using that as an example of the fact that a very "directed" experience will always look better than gameplay. Animation is probably the more obvious example here.Dynamic GI is somewhat overrated, IMHO - games managed to do quite well without it for a long time, and it's not like gameplay has been getting more complex in recent years anyway.

That's obvious or consoles would have up to 100x performance advantage over a PC with similar hardware,All that evidence only relates to drawcalls, not the overall efficiency disadvantage of the PC so in isolation it's not really proof of anything other than the already well known fact that PC's can be draw call limited compared to consoles.

Saying that though the very article you link specifically refers to the improvements in DX11 further reinforcing the previous statements about Carmacks 2x advantage no longer being a valid reference point.

All that evidence only relates to drawcalls, not the overall efficiency disadvantage of the PC so in isolation it's not really proof of anything other than the already well known fact that PC's can be draw call limited compared to consoles.

Saying that though the very article you link specifically refers to the improvements in DX11 further reinforcing the previous statements about Carmacks 2x advantage no longer being a valid reference point.

No, it's because TressFX rendering is obnoxiously brute force. It'd run just as poorly (if not more) on a console. The compute part of it is fairly cheap and done all together at the start of the frame. 1 compute/render transition in a frame is hardly an issue (Frostbite typically does several for instance).Tomb Raider, with TressFX, cut PC performance almost in half. Wouldn't that likely be because the GPU had to stop processing graphics to do compute tasks?

Uhuh... :S Yeah let's stop this conversation already before it gets even more stupid.720P aside, todays console games look in the same ballpark as todays PC games, despite 1/10-1/20th the power.

The point is that the engine has the potential to look better than anything else we've seen so far and the Elemental demo is irrelevant in comparisons with KZSF or BF4 or whatever.

Obviously the actual poly counts and scene complexity and animation fidelity will be dictated by the nextgen console hardware performance envelopes. But Epic is - at this time - ahead of the rest in the showcase department. BF4 may have better daytime lighting but the assets don't look this good and the realistic warfare setting limits them to an extent, too.

The blur and other post processing helps a great deal in making the demo look less like realtime and more like offline rendered or even live action.

The point is that the engine has the potential to look better than anything else we've seen so far and the Elemental demo is irrelevant in comparisons with KZSF or BF4 or whatever.

Obviously the actual poly counts and scene complexity and animation fidelity will be dictated by the nextgen console hardware performance envelopes. But Epic is - at this time - ahead of the rest in the showcase department. BF4 may have better daytime lighting but the assets don't look this good and the realistic warfare setting limits them to an extent, too.

^I disagree completely. Anything that makes game graphics look less fake is an enormous plus for me.

I actually use FXAA on the PC version of Skyrim deliberately because it kills all those razor sharp polygonal edges.

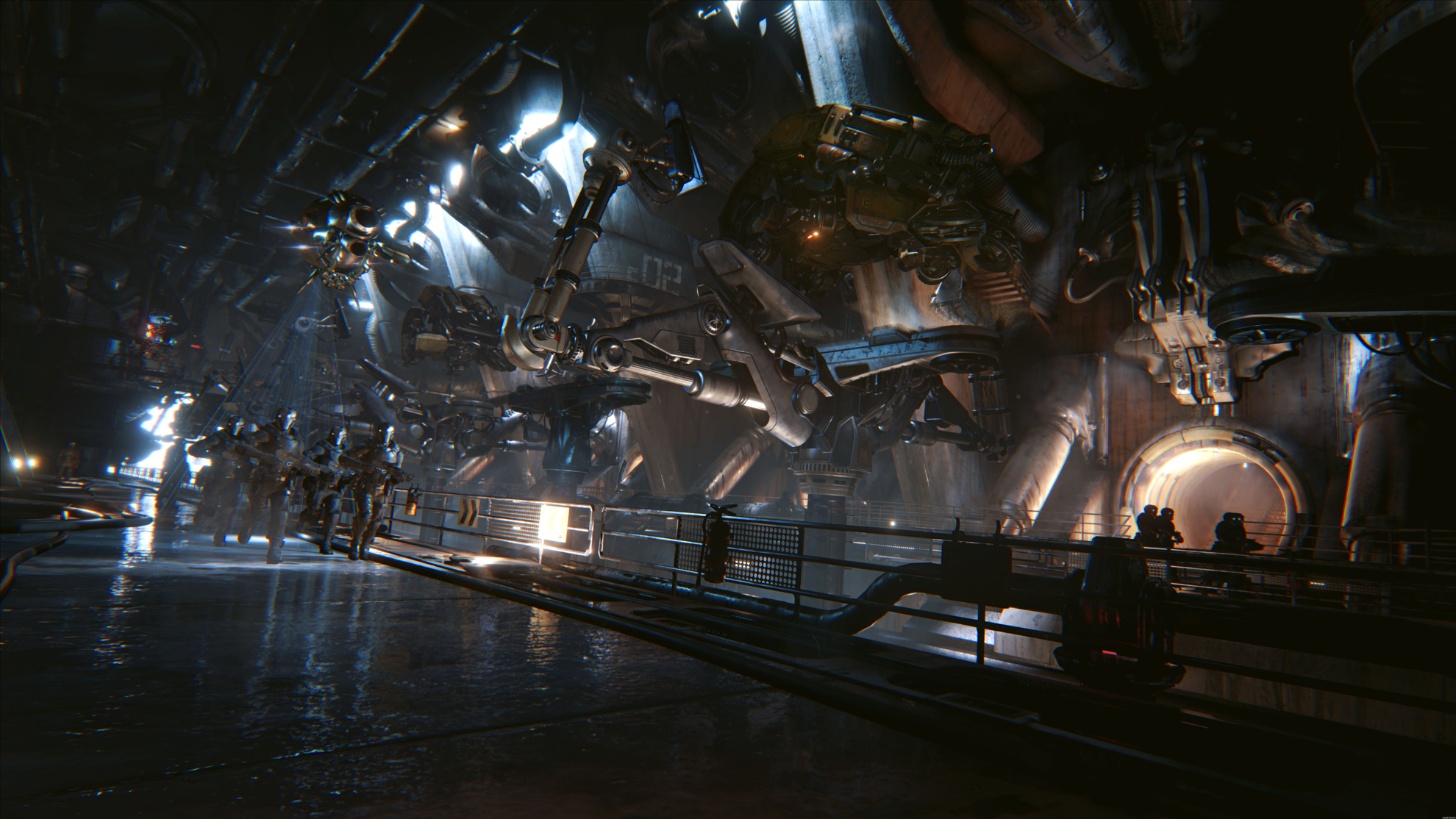

Original shot:

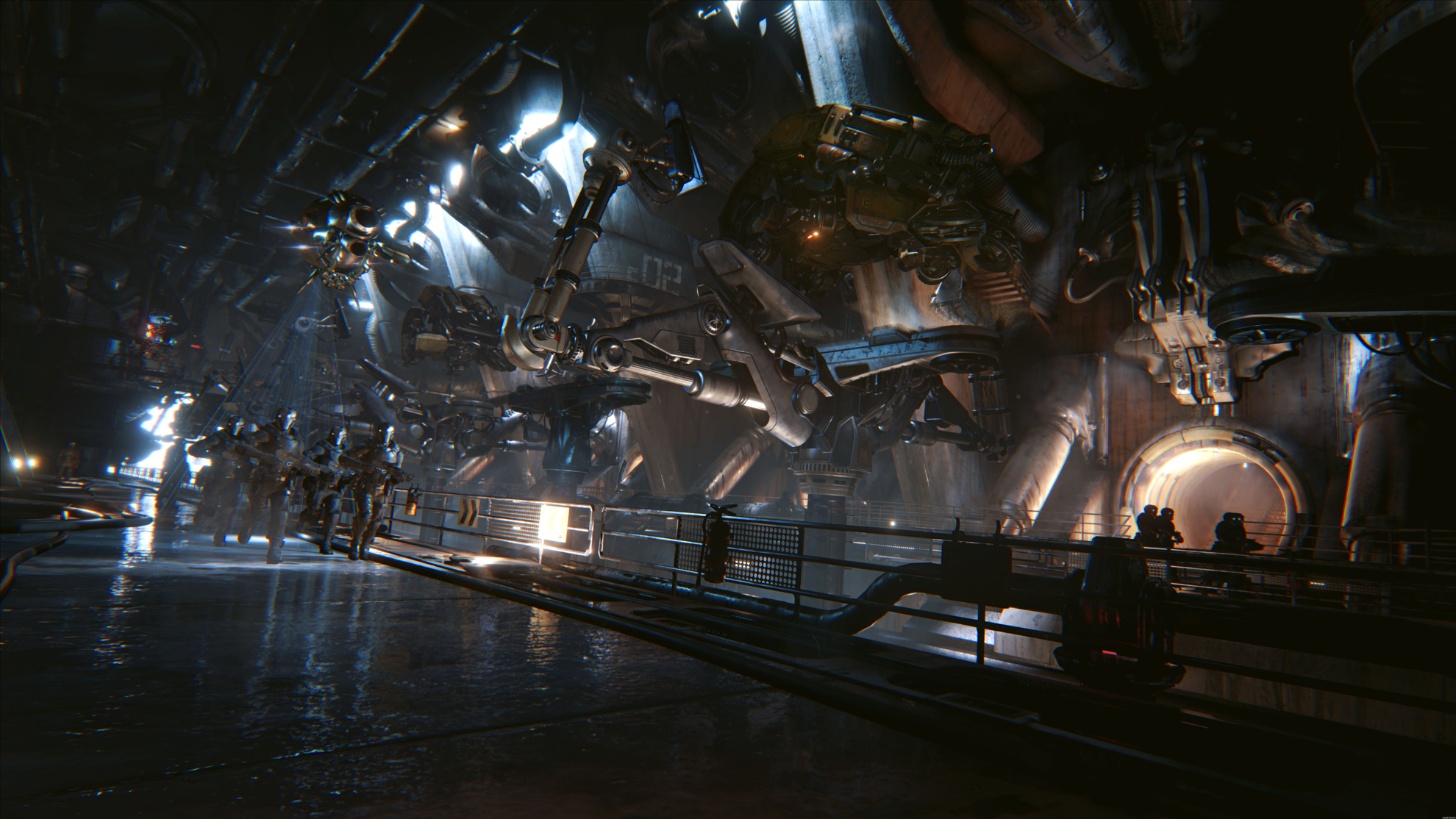

Editor shot without washed out video look and motion blur:

Gamersyde has 11,520 x 6,480 version of this new shot.

http://images.gamersyde.com/image_unreal_engine_4-21778-2539_0001.jpg

Obviously the actual poly counts and scene complexity and animation fidelity will be dictated by the nextgen console hardware performance envelopes. But Epic is - at this time - ahead of the rest in the showcase department. BF4 may have better daytime lighting but the assets don't look this good and the realistic warfare setting limits them to an extent, too.

I would say Deep Down and Agni's Philosophy are at least in the same league if not higher. I'm pretty impressed at what those Japanese companies can do nextgen.

Too much chromatic aberations (and a poor approximation of it too if I'm honest), looks like one of those old school three color projectors miss calibrated.Gamersyde has 11,520 x 6,480 version of this new shot.

http://images.gamersyde.com/image_unreal_engine_4-21778-2539_0001.jpg