Most highend TVs today have internal IVTC/scalers, like DCDi Faraouja chips. The fallacy of the non-benefits of direct 24p->24p mapping is the following:

1) the assumption that discs are encoded properly. Turns out that many aren't (DVD). That's why the HQV tests exist, because many DVDs today lack correct flagging, and many of the IVTC logic on players guesses wrong, mostly on non-standard cadences. If everything was flagged properly, and all IVTC's operated correctly, there would be no issues and no need to buy third party IVTC video processors or special DVD players. A $26 CyberHome DVD player won't do IVTC properly on all discs.

2) Not only do some players have poor implementations, but some progressive TVs have shitty IVTCs (and scalers) which is why IVTC/upscaling DVD players are needed. If a standard DVD player had perfect IVTC and TVs had quality scalers, it would be a moot point.

3) Audio sync issues. Many IVTC/scalers introduce delays which are not compensated for correctly, leading to either fixed audio delay, or an accumulated error over time.

It's not an issue of what's technically possible to do. Sure, perfect loss-free IVTC is possible. The reality is, many players, TVs, set top boxes, etc use cheap commodity chips, or proprietary techniques which introduce the potential for problems, which together,can harms the industry as a whole when consumers get a negative impression from buggy implementations.

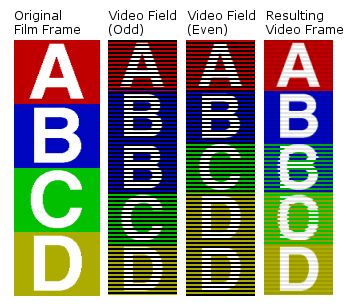

I think systems should be engineered to KISS principles: "Keep it Simple Stupid!" This not only reduces the probability of error/bugs in implementation, but makes implementations cheapers. Since we are saddled with legacy, unfortunately, we can't eliminate the need to support player conversions. However, at the very least, we can provide a path to the future, just like we allow DVI/HDMI to carry progressive signals together. In reality, any progressive stream can be transmitted "losslessly" as an interlaced stream, but it's a pointless conversion only needed for older sets, so the display interconnect standards naturally support non-interlaced modes of transport.

In that regard, IMHO, raw 24p output should be a supported output mode for those with TVs that can support it. Sure, you could transmit it at 30p and let the 24p signal be recovered perfectly by a perfect IVTC HDTV, but why? If the set is capable of 24hz, 48hz, or other refresh rates, why not let the set avoid the overhead. It would reduce latency, as well as eliminate bandwidth required, which would lower bit-errors on the cable, as well as reducing bandwidth by 20% which might be important for future home video distribution products.

From a "purist" point of view, I think it's desirable, just like "pure" audio codecs and output. There are actually people who are sensitive to judder and latency believe it or not.