Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPU Ray-tracing for OpenCL

- Thread starter fellix

- Start date

Hi,

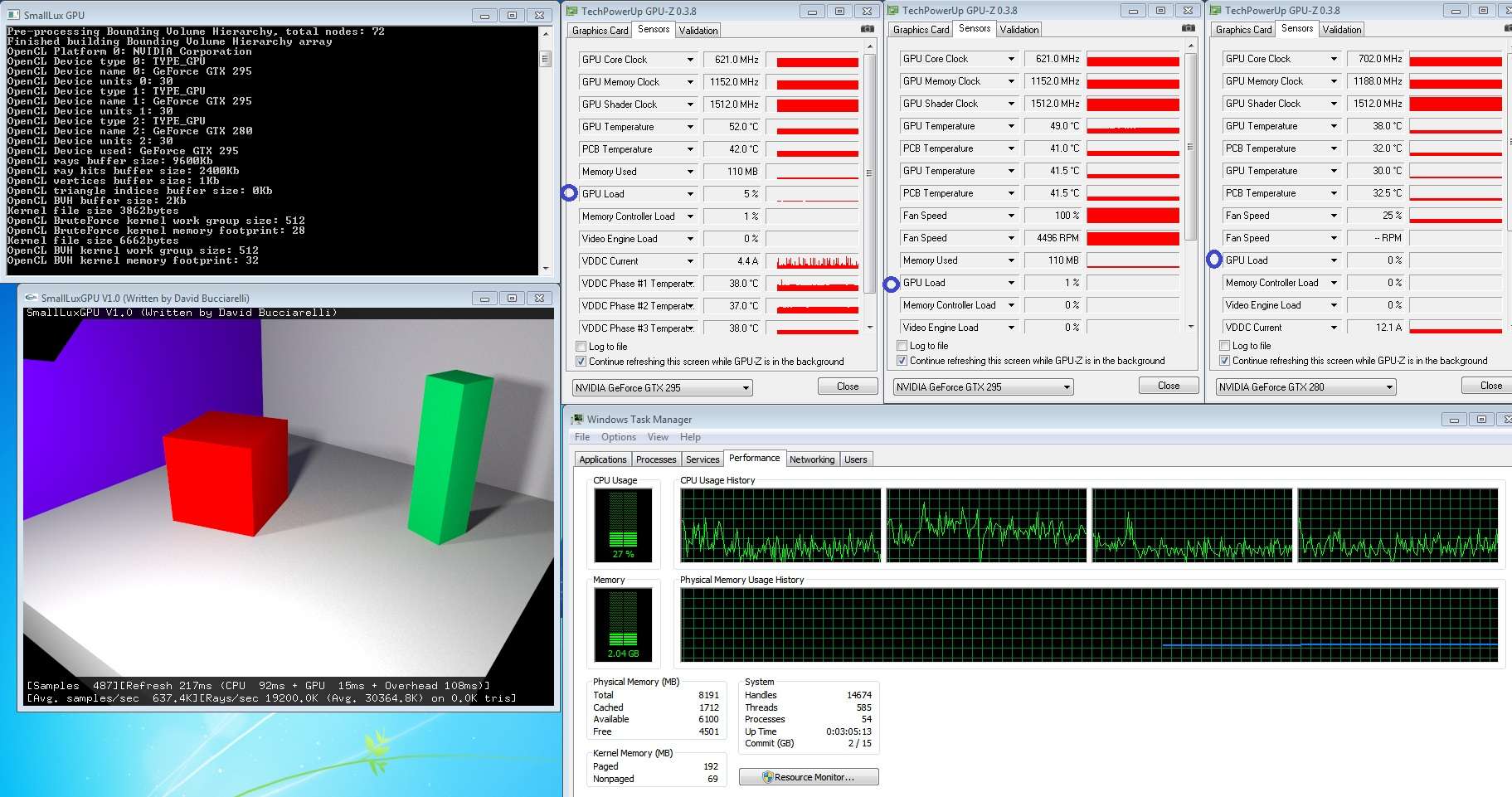

It doesn't look like the app (or rather, Nvidia's OpenCL implementation) actually uses 4 CPU cores, rather that it doesn't have its affinity tied and gets bounced around to different cores. It still only amounts to 100% use of one core, so it might be some single threaded operation which may also be holding the GPU performance back. Wouldn't surprise me if the GPU score increases if you overclock the CPU here.

This one is fresh: OCL accelerated LuxRenderer

The idea is to use the GPGPU only for ray intersections in order to minimize the amount of the brand new code to write and to not loose any of the functionality already available in Luxrender In order to test this idea, I wrote a very simplified path tracer and ported Luxrender's BVH accelerator to OpenCL.

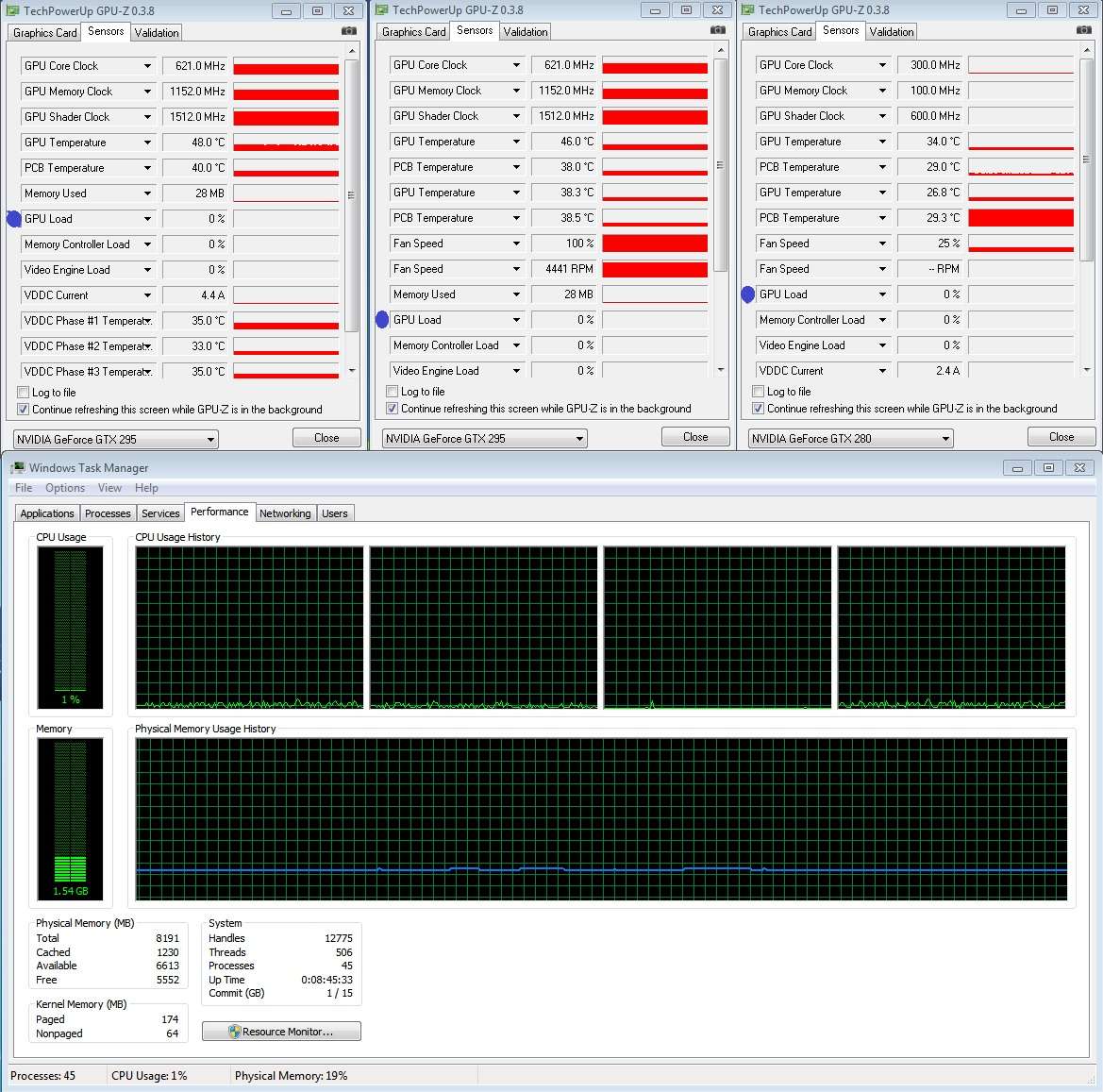

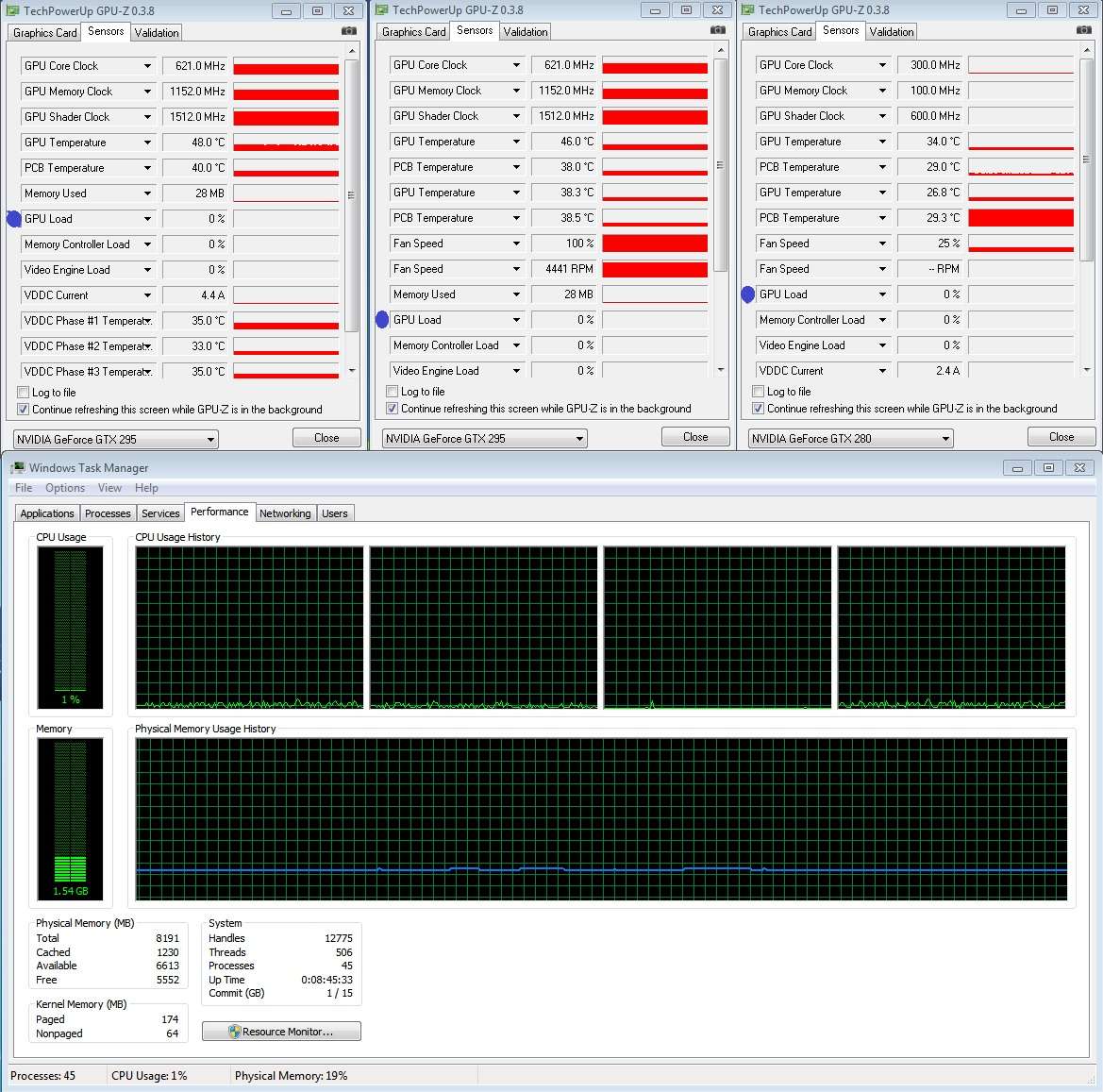

Considering this is my flat line shot, with nothing running...

And this is with the app running:

That all 4 cores are in use... :smile:

I agree affinity could be way better.

The thing is, I don't think we are supposed to be using near that much ideally.

Especially considering Nvidia doesn't support OpenCL on the CPU, that would have to be 100% overhead.

And this is with the app running:

That all 4 cores are in use... :smile:

I agree affinity could be way better.

The thing is, I don't think we are supposed to be using near that much ideally.

Especially considering Nvidia doesn't support OpenCL on the CPU, that would have to be 100% overhead.

I suggest changing the work group size calculation so that it does not use the "maximum". 64 on ATI would be better than 256. 64 is the minimum size on ATI HD5870 or HD4870. Some ATI GPUs (HD43xx HD45xx HD46xx) will work best with a lower size (32 or 16).

NVidia should be happy with 32 or 64.

Might be an idea to expose the workgroup size as a command line parameter. Or, have the program try a few different values. Or, just hard code it to 64.

Thanks Jawed, a lot of interesting information. I'm asking directly to OpenCL the suggested workgroup size for my kernel ... I guess the default answer from the driver isn't that good, I will add a command line option to overwrite the suggested size so we can do some test.

The register usage appears to be 49 vec4 registers. This is reasonable on ATI, resulting in 5 hardware threads (wavefronts). On NVidia it is a disaster (the equivalent of 196 registers if fiddling with the occupancy calculator), meaning that only 2 hardware threads (warps) can occupy each multiprocessor. As it happens 64 is better than 32 for the workgroup size in this scenario.

I'm not sure how NVidia handles the situation when 512 work-items are requested but the hardware can only issue 64 - I'm not sure if the hardware is spilling registers in this situation, if it is, then that compounds the disaster. Hopefully making this change will improve things dramatically.

I assume 196 is the maximum number of registers used during the execution on NVIDIA. Do you have any suggestion on how to reduce this number ? For instance, if I try reduce life span of local variables, it should reduce this number

Are NVIDIA register vec4/float4 as the ATI one ? In this case I could higly reducing the register usage by switching to OpenCL vector types.

P.S. SmallLuxGPU is a quite different beast from SmallptGPU. SmallptGPU is a GPU-only application while SmallLuxGPU is a test on how a large amount of existing code (i.e. Luxrender) could be adapted to take advantage of GPGPU technology.

Jawed

Legend

The spec says this is supposed to take account of the resource requirements of the kernel (table 5.14). It seems that ATI is giving a reasonable answer, if maximal-sharing of local memory would be advantageous to the kernel (but pointless in this case, as local memory is not actually being used as far as I can tell). But it seems NVidia's just responding with a nonsense number, ignoring resource consumption. So, both are suffering from immaturity there. Honestly, I'm dubious this'll ever really be of much use.Thanks Jawed, a lot of interesting information. I'm asking directly to OpenCL the suggested workgroup size for my kernel ... I guess the default answer from the driver isn't that good,

I think any positive number less than maximum device size is technically valid, but I dare say common multiples of powers of 2 are safe.I will add a command line option to overwrite the suggested size so we can do some test.

Actually, this number is a total guess - I simply took the ATI allocation and multiplied by 4. It could be substantially wrong, e.g. 100 registers - it's all down to how the compiler treats the lifetime of variables and whether it decides to use static spillage to global memory. I don't know if NVidia's tools can provide a register count for an OpenCL kernel. NVidia's GPUs also have varying capacities of register file, which affects the count of registers per work-item for the different cards - the Occupancy Calculator can help there, you just need to match up the CUDA Compute Capability with the models of cards.I assume 196 is the maximum number of registers used during the execution on NVIDIA. Do you have any suggestion on how to reduce this number ? For instance, if I try reduce life span of local variables, it should reduce this number

ATI's new profiler for OpenCL, with its ISA listing feature, provides the NUM_GPRs statistic, amongst other things.

Registers are always allocated as vec4 in ATI (128-bit). The compiler will try to pack kernel variables into registers as tightly as possible, but there are plenty of foibles there - e.g. smallptGPU might only need 46 registers with a perfect packing.Are NVIDIA register vec4/float4 as the ATI one ? In this case I could higly reducing the register usage by switching to OpenCL vector types.

NVidia allocates all registers as scalars (32-bit), which is why I multiplied by 4.

I have to admit I've only noticed, today, that float3 is not welcome in much of OpenCL, e.g. the Geometric Functions in 6.11.5.

NVidia tends to advise against vector types (even though Direct3D and OpenGL are the primary APIs), but I have no practical experience for the situations when there's a real benefit in kernels as complex as used in smallptGPU.

So, I'm unsure if switching to OpenCL's vectors is good. I dare say they'd be my starting point, but I don't have the practical experience and the compilers are immature and the float3 gotcha in OpenCL might make things moot anyway.

I noticed that SmallLuxGPU uses quite a few OpenCL float4s, padded with 0.f. In theory these should be fine as the compiler will optimise .w away in adds/muls and intrinsics such as dot-product should ignore .w. But it's just another part of the learning curve I'm afraid...

Yeah, I noticed the GPU is not being worked very hard as yet - it seems that various host side tasks are the major bottleneck. This performance will also vary dramatically depending on the quality of the motherboard chipset, i.e. PCI Express bandwidth looks like it'll cause quite variable results.P.S. SmallLuxGPU is a quite different beast from SmallptGPU. SmallptGPU is a GPU-only application while SmallLuxGPU is a test on how a large amount of existing code (i.e. Luxrender) could be adapted to take advantage of GPGPU technology.

Jawed

TimothyFarrar

Regular

NVidia tends to advise against vector types (even though Direct3D and OpenGL are the primary APIs), ...

Hello Jawed, I would not advise against vector types in general, but rather the best thing to do is to try various options and profile to see what works best in your given situation.

In general, vector loads could be a disadvantage if they increase kernel register count and result in lower warp occupancy, in contrast to using non-vector loads to just fetch data immediately when needed in a computation.

However vector loads do have some important advantage cases, such as when converting data to/from SOA/AOS form.

Jawed

Legend

Hi Tim! I was just paraphrasing section 5.

http://www.nvidia.com/content/cudaz...s/papers/NVIDIA_OpenCL_BestPracticesGuide.pdf

Timo Stich's presentation, page 18, mentions bundling multiple elements per work-item as an optimisation for memory access:

http://sa09.idav.ucdavis.edu/docs/SA09_NVIDIA_IHV_talk.pdf

so I suppose that can be considered a use of vectors, too.

smallptGPU is arithmetic bound so memory accesses (those other than register spills, if register spills are being used) aren't a reason to vectorise.

Anyway, the ATI compiler should be seeing the inherent parallelism even without the use of OpenCL's intrinsic vector types. But, as I said earlier, the register-packing question is still a fiddlesome detail.

Jawed

http://www.nvidia.com/content/cudaz...s/papers/NVIDIA_OpenCL_BestPracticesGuide.pdf

The CUDA architecture is a scalar architecture. Therefore, there is no performance benefit from using vector types and instructions. These should only be used for convenience. It is also in general better to have more work-items than fewer using large vectors.

Timo Stich's presentation, page 18, mentions bundling multiple elements per work-item as an optimisation for memory access:

http://sa09.idav.ucdavis.edu/docs/SA09_NVIDIA_IHV_talk.pdf

so I suppose that can be considered a use of vectors, too.

smallptGPU is arithmetic bound so memory accesses (those other than register spills, if register spills are being used) aren't a reason to vectorise.

Anyway, the ATI compiler should be seeing the inherent parallelism even without the use of OpenCL's intrinsic vector types. But, as I said earlier, the register-packing question is still a fiddlesome detail.

Jawed

I did some of the changes discussed in this thread. As Jawed suggested, hand tuning workgroup size is useful. On Linux 64bit + Q6600 + ATI HD 4870:

Workgroup size 8 => 890K samples/sec

Workgroup size 16 => 1719K samples/sec

Workgroup size 32 => 3373K samples/sec

Workgroup size 64 => 6486K samples/sec

Workgroup size 128 => 5515K samples/sec

Workgroup size 256 => 5436K samples/sec

As side note, Linux 64bit is quite faster than Windows XP 32bit (6486K vs 5500K).

I uploaded a new beta version at http://davibu.interfree.it/opencl/smallptgpu/smallptgpu-v1.5beta.tgz

It includes the new parameter to force workgroup size and few other changes I did in the hope to fix NVIDIA problems. There are few .bat to try different workgroup sizes.

I will appreciate if someone with a NVIDIA GPU can give it a try. You have only to run the following .bat to try different wrokgroup sizes:

RUN_SCENE_CORNELL_32SIZE.bat

RUN_SCENE_CORNELL_64SIZE.bat

RUN_SCENE_CORNELL_128SIZE.bat

Workgroup size 8 => 890K samples/sec

Workgroup size 16 => 1719K samples/sec

Workgroup size 32 => 3373K samples/sec

Workgroup size 64 => 6486K samples/sec

Workgroup size 128 => 5515K samples/sec

Workgroup size 256 => 5436K samples/sec

As side note, Linux 64bit is quite faster than Windows XP 32bit (6486K vs 5500K).

I uploaded a new beta version at http://davibu.interfree.it/opencl/smallptgpu/smallptgpu-v1.5beta.tgz

It includes the new parameter to force workgroup size and few other changes I did in the hope to fix NVIDIA problems. There are few .bat to try different workgroup sizes.

I will appreciate if someone with a NVIDIA GPU can give it a try. You have only to run the following .bat to try different wrokgroup sizes:

RUN_SCENE_CORNELL_32SIZE.bat

RUN_SCENE_CORNELL_64SIZE.bat

RUN_SCENE_CORNELL_128SIZE.bat

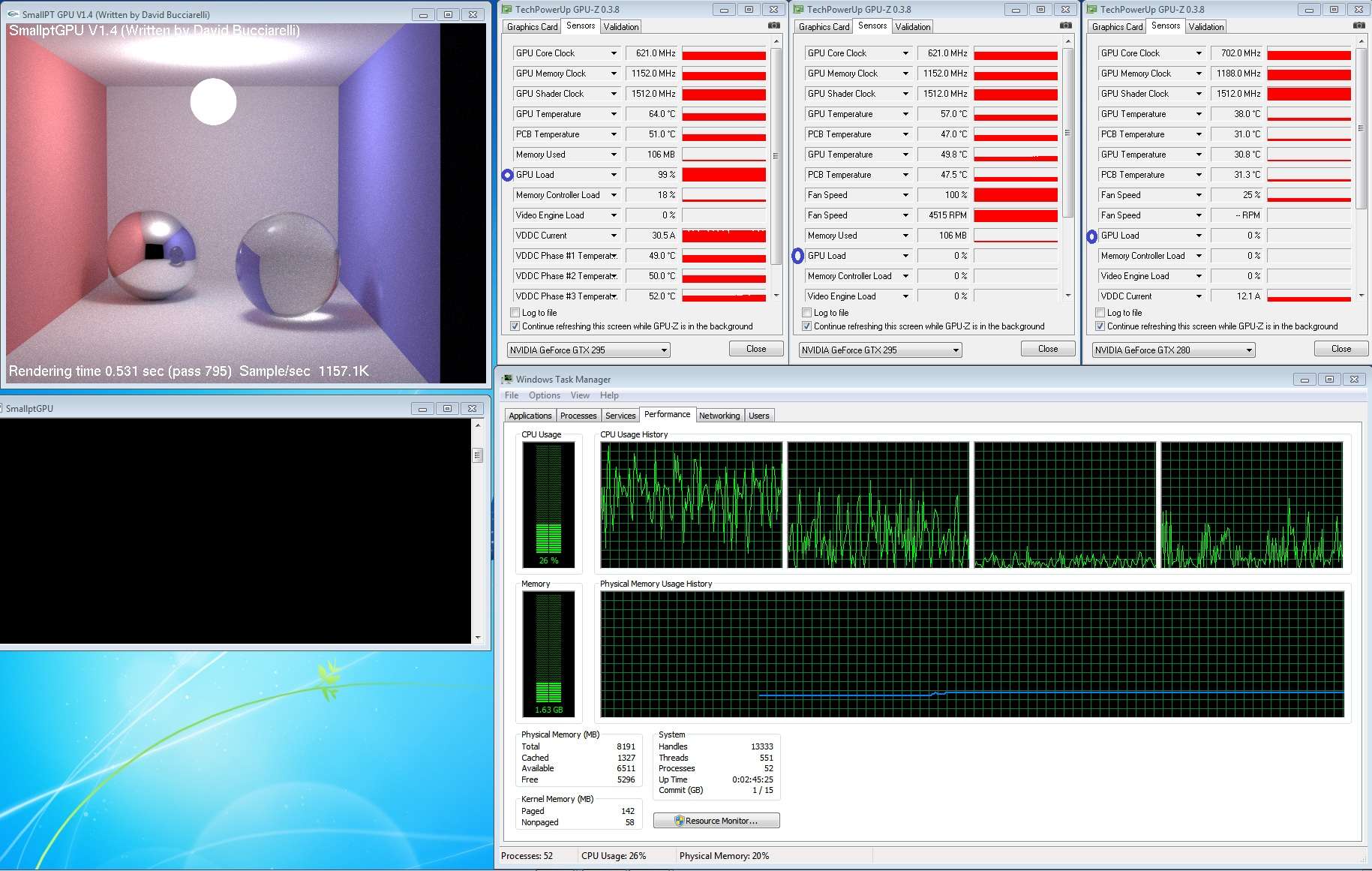

~16700K samples/s with 64 WG size on my 5870 -- a steady improvement up from 13700K with the v1.4.

Chaps with NV hardware need to report, now.

I see no improvement using WG 64-256 and half speed using WG 32?

Same 13333K score ...

W7 64bit OCL final.

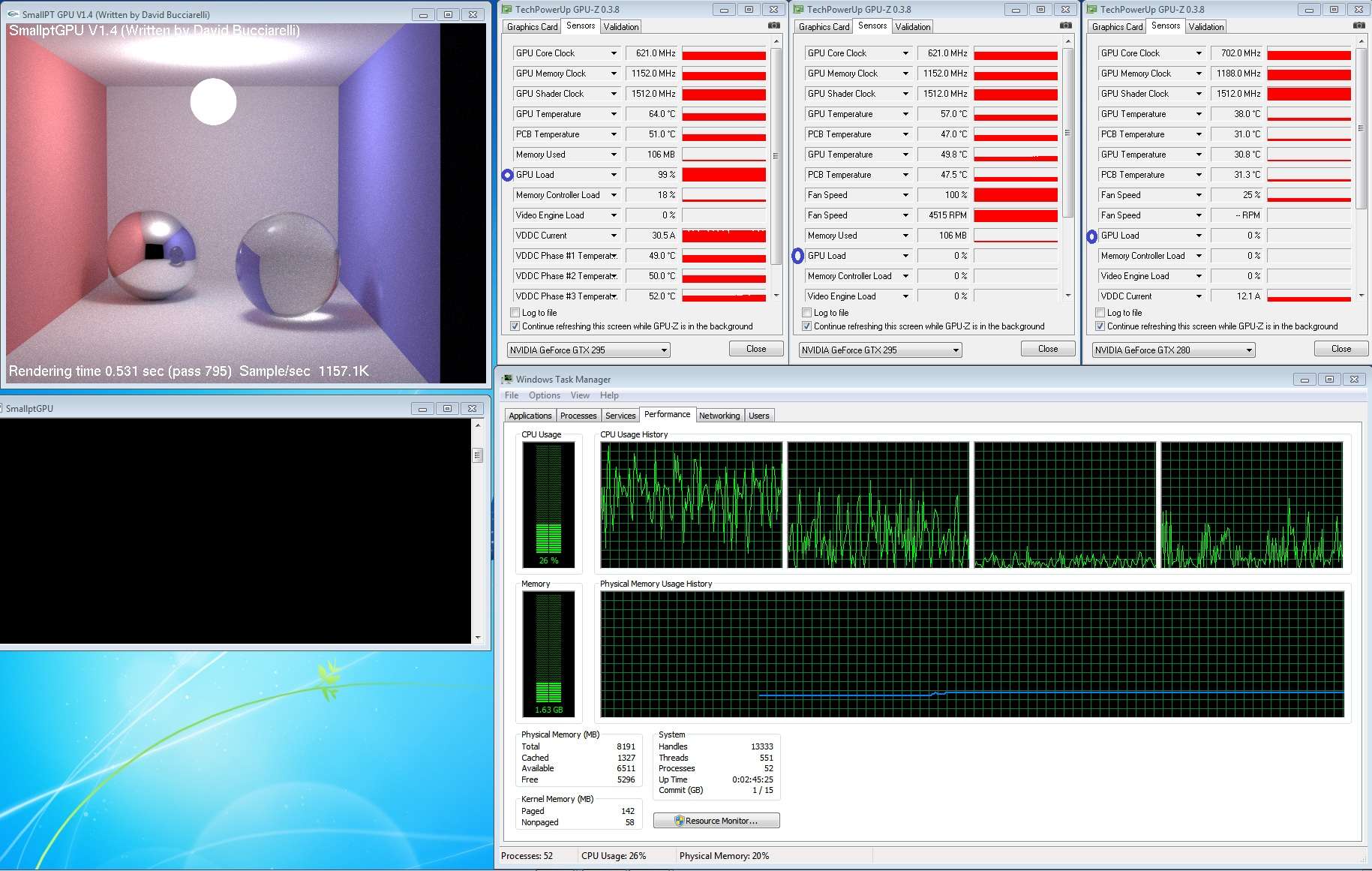

1/2 of a 295:

RUN_SCENE_CORNELL_32SIZE = 2072.2k

RUN_SCENE_CORNELL_64SIZE = 2898.2K

RUN_SCENE_CORNELL_128SIZE = 2898.2K

RUN_SCENE_SIMPLE_64SIZE = 113,564.9K

Is your 295 still OCed? Your sig says EVGA GTX 295 C=621 S=1512 M=1152?

Ooh, that's a nice bump on ATI performance. Nice to see a big jump in NVidia performance

NVidia users might want to create a BAT file with other sizes, e.g. 96, 160, 192, 224, 256, 320 and 384 - just to see where the sweetspot is...

Jawed

You're in luck that there's no new deals on Steam today hehe.

GTX280 / Core i7-860 on Win7 x64:

Size 32 -> 1711.4k

Size 64 -> 2250.5k

Size 96 -> 2129.6k

Size 128 -> 2314.1k

Size 160 -> 2250.5k

Size 192 -> 2250.5k

Size 224 -> 1831.3k

Size 256 -> 2072.2k

Size 320 -> 2250.5k

Size 384 -> 2250.5k

The numbers keep fluctuating a little but tend to stabilise as more passes are done. These are all sort of in the middle, neither the highest nor the lowest.

Other than the notable drops at 32 and 224, workgroup size doesn't seem to affect the score too much on Nvidia.

Something else must've changed between 1.4 and 1.5, because 1.4 has only half the performance at size 384.

run_scene_cornell_96size = 2662.0kooh, that's a nice bump on ati performance. Nice to see a big jump in nvidia performance

Nvidia users might want to create a bat file with other sizes, e.g. 96, 160, 192, 224, 256, 320 and 384 - just to see where the sweetspot is...

Jawed

run_scene_cornell_160size = 2898.1k

run_scene_cornell_192size = 2898.7k

run_scene_cornell_224size = 2318.5k

run_scene_cornell_256size = 2526.3k

run_scene_cornell_320size = 2813.9k

run_scene_cornell_384size = 2813.9k

Similar threads

- Replies

- 126

- Views

- 49K