Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectX 12: The future of it within the console gaming space (specifically the XB1)

- Thread starter Shortbread

- Start date

Hey, bloom made a big difference in early games. E.g. WoW looked really great with that filter years ago. Without bloom it looked really boring.Don't forget bloom. Truly the new "piss filters" of this gen (among others effects...) and they will be remembered as such.

But I know what you mean ... in most games it was just to much ... but hey, if everything glows, you don't need other light sources ;-) ... Bloom is the future ...

Well hope not

GameWorks.That demo was supremely impressive. Unfortunately without 12_1 I don't think you can enable a lot of those features they have going.

haha. well still though without Tier 3 TR and CR, I think it's going to be rough on the hardwareGameWorks.

Epic Games' Ray Davis talks about how DirectX 12 will allow developers to squeeze more out of the Xbox One and the benefits it might bring to the Xbox One.

http://gamingbolt.com/epic-games-un...rs-to-squeeze-out-even-more-from-the-xbox-one

http://gamingbolt.com/epic-games-un...rs-to-squeeze-out-even-more-from-the-xbox-one

Finally, to wrap up the talk we will look at how our pipelines could evolve in the future, especially with upcoming APIs such as DirectX 12.

http://advances.realtimerendering.c...siggraph2015_combined_final_footer_220dpi.pdf

http://advances.realtimerendering.com/s2015/index.html

Rikimaru

Veteran

Render the scene at 2x2

lower resolution (540p) with

ordered grid 4xMSAA pattern

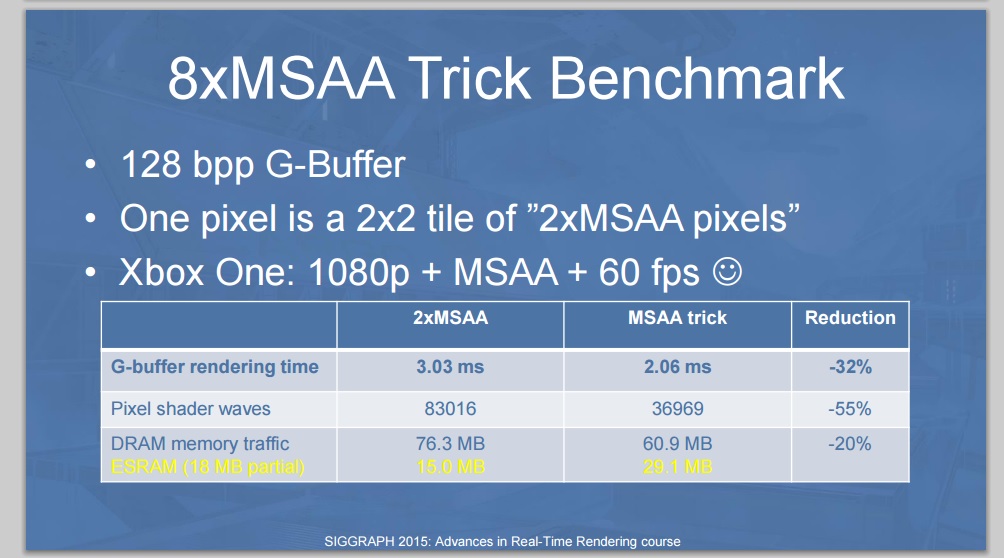

8xMSAA Trick Benchmark

One pixel is a 2x2 tile of ”2xMSAA pixels”

Always good stuff from sebbbi.

Edit: I'm still a bit of an amateur at this stuff, and trying to make sense of the MSAA trick. Looks clever. In the little diagram, the small x's are the 4xMSAA ordered-grid sub-samples, right?

Edit2: The part that's messing me up is the "one pixel is a 2x2 tile of 2xMSAA pixels." I think I understand it creating 4 "interior pixels" for a 1080p render target for every 4 pixels in the 540p buffer. You use the interior sample in the ordered-grid and interpolate the UV and tangent. So why does that end up being a 2x2 tile of 2xMSAA pixels? Is there a step in there that I'm missing? Hopefully what I wrote is not embarrassingly wrong. But also, why would this preserve edge quality so well?

Last edited:

I think he's saying that in addition to treating pixel centers as geometry samples on an ordered 4x grid ("MSAA trick"), there's 2x of "actual MSAA"?Edit2: The part that's messing me up is the "one pixel is a 2x2 tile of 2xMSAA pixels." I think I understand it creating 4 "interior pixels" for a 1080p render target for every 4 pixels in the 540p buffer. You use the interior sample in the ordered-grid and interpolate the UV and tangent. So why does that end up being a 2x2 tile of 2xMSAA pixels? Is there a step in there that I'm missing? Hopefully what I wrote is not embarrassingly wrong.

So each pixel in the 540p buffer would look something like this:

Where green is the pixel center in the 540p buffer, purple is the location of the pixel centers in the implied 1080p buffer, and red is the actual samples in the buffer (two per purple, since 2xMSAA).

Maybe. Or something.

This is correct. Our G-buffer is equivalent to 2xMSAA in quality (with EQAA it is actually very close to 4xMSAA on consoles). This reduces edge crawl compared to other games that don't have any additional samples per pixel. We of course have other antialing methods as well + custom resolve + toksvig mapping to reduce specular aliasing. I am currently at airport with my iPad only. I will provide a detailed answer later.I think he's saying that in addition to treating pixel centers as geometry samples on an ordered 4x grid ("MSAA trick"), there's 2x of "actual MSAA"?

So each pixel in the 540p buffer would look something like this:

Where green is the pixel center in the 540p buffer, purple is the location of the pixel centers in the implied 1080p buffer, and red is the actual samples in the buffer (two per purple, since 2xMSAA).

Maybe. Or something.

If someone wonders why the G-buffer only uses 18 MB of ESRAM, the answer is: we are doing lighting, post processing, VT page generation and particle rendering using async compute in the background. Only culling + g-buffer draws + shadow draws are in the render pipeline. Everything else is async compute. This gives us huge perf gains. Unfortunately I had only a 25 minute slot, meaning that I had to remove all my async compute slides.

This is correct. Our G-buffer is equivalent to 2xMSAA in quality (with EQAA it is actually very close to 4xMSAA on consoles). This reduces edge crawl compared to other games that don't have any additional samples per pixel. We of course have other antialing methods as well + custom resolve + toksvig mapping to reduce specular aliasing. I am currently at airport with my iPad only. I will provide a detailed answer later.

If someone wonders why the G-buffer only uses 18 MB of ESRAM, the answer is: we are doing lighting, post processing, VT page generation and particle rendering using async compute in the background. Only culling + g-buffer draws + shadow draws are in the render pipeline. Everything else is async compute. This gives us huge perf gains. Unfortunately I had only a 25 minute slot, meaning that I had to remove all my async compute slides.

Thanks for clearing that up (and also @HTupolev). I get it now. Looking forward to hearing more. I'm assuming all platforms see the same benefits. Is this a viable way of doing 4k on the PC, rendering first at 1080p?

The thought process goes something like this: What is the minimal amount of data we need to identify each surface pixel in the screen.@sebbbi just a curiosity, but how exactly does the thought process play out for all these new render pipelines and techniques? Is it looking into research? Or just a lot of trial and error with some intuition to guide you ?

Depth only pass would actually be enough. You just need a function (or a lookup) to transform the (x, y, depth) triplet to UV (of the virtual texture). You could for example use a world space sparse 3d grid that describes the UV mapping of each (x, y, z) location. However since you would have N triangles in each grid cell, the grid would require variable size data per pixel (meaning that loop + another indirection is needed). So this kind of method would be only practical for volume rendering (or terrain rendering) with some simple mapping such as triplanar. Unfortunately we are not yet rendering volumes (like media molecule does), so this idea was scrapped.

So in practice, the smallest amount of data you need per pixel is a triangle id. 32 bits is enough for this, and since the triangle id is constant accross a triangle the MSAA trick works perfectly. With 8xMSAA you have 8 pixels per real pixel. You don't need multiple samples per pixel, since the triangle id + screen implicit x, y allows you to calculate perfect analytical AA in the lighting. This method works very well with GPU driven culling, since the culling gives each cluster a number (= the array index of that cluster in the visible cluster list). This way you can numerate each triangle without needing to double pass you geometry (and use atomic counter for visible pixels). Unfortunately this method is incompatible with procedurally generated geometry, such as most terrain rendering implementations and tessellation. Skinning is also awkward. So I scrapped this idea as well. There is actually a Intel paper about this tech (our research was independent of that). I recommend reading it if you are interested.

So we ended up just storing the UV implicitly. This is efficient since our UV address space for currently visible texture data is only 8k * 8k texels, thanks to virtual texturing (with software indirection). This is another reason why we don't use hardware PRT. The other reason is that there is no UpdateTileMappingsIndirect. Only the CPU can change the tile mappings. Hopefully we get this in a future API.

We just used the same tangent presentation as Far Cry did. Tangent frame (instead of normal) allows anisotropic lighting and parallax mapping in the lighting shader. You can also try to reconstruct the tangent from the UV gradient and the depth gradient (calculated in screen space), but the failed pixels will look horrible. In comparison the failed gradient calculation only results in bilinear filtering and that is not clearly noticeable in single pixel wide geometry (check the gradient error image in the slides).

Yes. MSAA trick for 1080p results in 4K.Is this a viable way of doing 4k on the PC, rendering first at 1080p?

However, we need API support for programmable MSAA sampling patterns. Many existing PC GPUs already support this. DX12 unfortunately didn't add this

Yes. MSAA trick for 1080p results in 4K.

However, we need API support for programmable MSAA sampling patterns. Many existing PC GPUs already support this. DX12 unfortunately didn't add this

So this is console only for now, or does Vulcan or Metal support programmable MSAA sampling patterns?

Both Nvidia and AMD already have OpenGL extensions for programmable MSAA pattern. Vulkan supports extensions, so even if it didn't have native support, extensions will surely be available.So this is console only for now, or does Vulcan or Metal support programmable MSAA sampling patterns?

The thought process goes something like this: What is the minimal amount of data we need to identify each surface pixel in the screen.

...

Thinking a little more on this, what are the main drawbacks to this MSAA trick in terms of image quality? The only thing I can think of is potentially missing fine geometry. There shouldn't be any loss in texture resolution or lighting, and edge quality by your account should be very comparable.

Not to discourage you or anything, but you should read this entire thread from the start or at least start many pages back from here where we fully discuss in this thread what aspects Xbox stands to gain from DX12. There's 1600 posts in here lol. You will find things in here that those websites you have quoted have not covered.Although direct12 might give xbox one some improvements, there is not going to be that much of a change since consoles have had low level access apis for a long time

Similar threads

- Replies

- 16

- Views

- 965

- Replies

- 14

- Views

- 4K

- Replies

- 4

- Views

- 2K

- Replies

- 3

- Views

- 13K