And why are RDNA1 numbers on a GPU power virus like furmark even remotely relevant to the amount of time the PS5's RDNA2 GPU will be at 2.23GHz?

Okay, so this is out of context. I never claimed Furmark was relevant to the amount of time the PS5 could boost for.

I was using it to try and estimate a lower bound for XSX locked clocks based on Furmark and supposing similar scaling between 5700XT boost clocks and PS5 boost clocks.

Are Microsoft or Sony going to have power viruses available on their online store? I just don't see how the PS5's boost needs to ever be similar to a desktop graphics card's boost based on a previous architecture. First because Cerny has repeatedly said the GPU spends

most of its time at 2.23GHz whereas

the 5700 XT does not spend most its time at 1905MHz, second because the console's boost needs to work differently due to the fact that the PS5's boost is solely dependent on power consumption whereas whereas a desktop card will just churn out higher clocks if the ambient temperature is lower, and third because it's a new architecture.

A lot to unpack here, but briefly, IMO:

- You need to be able to handle a Furmark like situation, whether it's by having the power and cooling capacity or throttling. Even if it's just for a fraction of a frames rendering time, you need to be able to handle these situations.

- Boost is based on physics, even if you use a model based on activity rather than real time measuring of sensors. There will necessarily be similarities in behaviour, especially between very similar architectures.

- Cerny did

not say the GPU spends most of it's time at 2.23 gHz. He said "most" and "at or close to" and it's a big difference. A huge one really, in the context of this forum. If we're going to use Cerny's words we need to actually use them, and not a partisan "fake news" edit of them.

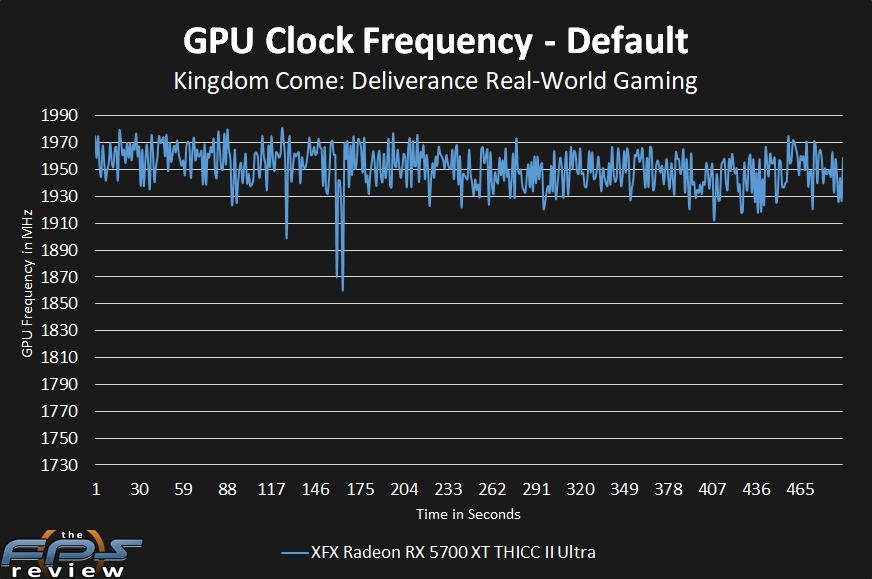

- I think the 5700XT probably does does spend "most" of its time "at or close to" 1905 mhz - depending on what those words mean to you. In the Anandtech review every game's average was within 90% of advertised max boost frequency. Some are above 95%. GTA V is above 100%!

- PC GPU's don't just keep churning out higher clocks based on temperature! AMD cards have power limits which have default values that can limit frequency even when within temperature limits.

And finally, RDNA2 isn't a completely new architecture, and it's only moving to a minor node improvement rather than a completely unknown one. Characteristics should be quite similar. There really isn't a better architecture to base speculation on at this time.

When have console GPUs ever dictated the maximum clock rates of PC GPUs? When the PS4 came out with a 800MHz GPU

we had Pitcairn cards working at 1000MHz, with boost to 1050MHz.

That's a 25% higher base clock with boost giving it a 32% higher clock, but boost has come a long way since GCN1 chips

PS4 didn't have a boost mode that pushed the limits of the chip and the process in the way Cerny claims the PS5 is. You can't read anything into the PS5's peak boost clock from PS4 clocks IMO.

And I didn't actually say PS5 or XSX were

dictating anything, but I do think they might be

indicating something interesting! Those being approximate RDNA2 boost and base (or slightly lower) frequencies respectively.

2.23 ghz as a (conservative) upper limit for the logic comes from Cerny.

"Infact we have to cap the GPU frequency at 2.23 gHz so we can guarantee that the on chip logic operates properly".

So this isn't about power or heat. It's about integrity due to frequency. Some PC RDNA cards will fare a little better in the silicon lottery no doubt, and binning my help for a top end model, but PC RDNA 2 isn't going to see the boost headroom over PS5 that PC's have seen on the last gen systems.

PS5 is already well up there with what the architecture can do on the silicon IME.

What are AMD's recommended power and voltage values for the PS5 SoC, and how do they compare to the discrete PC graphics cards?

I'm not expecting the console silicon to have completely different power and voltage curves just because it's in a console. I don't think AMD's recommendations will be much, if any, different.

The claims are for the architecture, i.e. for a lineup of GPUs that will go from (at least) mid-range to high-end, and not just specifically "Big Navi". I'm pretty sure the Macbook Pro's Navi 12 has a lot more than a 50% perf/watt increase over a Radeon 5700 XT, so making that 50% claim for "wider and slower" doesn't make much sense if they could have done it within the same architecture.

Claims about perf/watt come with conditions and won't be universally the same. I take claims like these as being "up to" figures based on planned chips, at predicted frequencies using selected workloads. I guess we'll find out in November ...

Edit: and just to re-iterate, "relatively slower" is relative to where you can reach with the new chip, and internal, architectural tweaks can reduce power consumption and improve perf/watt independent of clock speeds and IPC (though they often go together).

Nvidia have been particularly good at minimising power usage for a given amount of work at a given frequency.