You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Can DX10 card emulate DX9 card?

- Thread starter DragonAvenger

- Start date

TurnDragoZeroV2G

Regular

Took care of that

BTW, thanks for the link.

BTW, thanks for the link.

chavvdarrr

Veteran

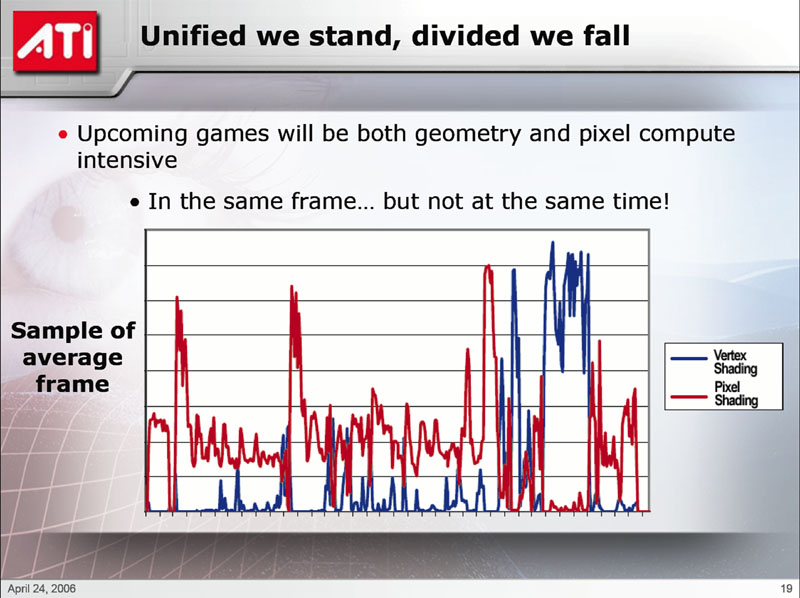

what slide?!Jawed said:This slide got missed in the [H] article:

I measure about 18% of the frametime where the vertex load exceeds the pixel load. That long section at the end is about 10%. If that got 5x faster in a unified GPU, then that's an easy ~8% performance gain.

Jawed

geo said:How's that Vista GUI benchmark coming along, Demirug?

Two different D3D10 projects stealing too much of my time at the moment. The RefRast is so sloooooooo. I need hardware.

Why did they copy my figure?  I guess they got the data points from the embedded excel from a figure in the slides from my Micro paper that or the photoshoped the pdf figure with new colors.

I guess they got the data points from the embedded excel from a figure in the slides from my Micro paper that or the photoshoped the pdf figure with new colors.

For reference, slide 25 in here and figure 7 here.

I don't mind about them using it though.

It was a frame from the 3dcenter's UT2004 Primeval map timedemo.

For reference, slide 25 in here and figure 7 here.

I don't mind about them using it though.

It was a frame from the 3dcenter's UT2004 Primeval map timedemo.

Jawed

Legend

Cheeky buggers! I thought of you when looking at this.

Actually, this kinda worryingly implies that they don't have their own way of graphing rendering workload based upon their own internal simulations (they'd have been able to just pull up one of those graphs if they had them). That can't be right, can it?

Jawed

Actually, this kinda worryingly implies that they don't have their own way of graphing rendering workload based upon their own internal simulations (they'd have been able to just pull up one of those graphs if they had them). That can't be right, can it?

Jawed

Last edited by a moderator:

Or maybe we don't comment on unannouced productRoOoBo said:More likely, and after reading the whole presentation, it looks like someone from marketing (or similar) had my slides at hand and using them was way faster than asking one of their architecture teams to provide their own graphics.

chavvdarrr

Veteran

10x geo

a question:

X1900 pixel shaders are capable of emulating vertex and geometry ones?!

a question:

ehhhhhmmmmmm ?!Guennardi takes over the keyboard and mouse from Will and first tempers my expectations by reminding me that what I will be shown is actually running on current-generation hardware. "It's an X1900. What we've done is take the pixel shader unit and run everything in a Direct3D 10-like fashion on it – vertex shader, geometry shader, pixel shader."

"Essentially, you're emulating the Unified Shader Architecture on just the pixel shader?" I ask.

"Exactly."

X1900 pixel shaders are capable of emulating vertex and geometry ones?!

Jawed

Legend

Basically a geometry shader is able to create/delete triangles (and associated vertices), while a vertex shader cannot.

In X1900XT (like many GPUs) it's possible to write out data instead of merely rendering pixels to the screen. This concept is called render to vertex buffer, R2VB, when used to simulate geometry shading.

In effect a vertex buffer is a set of data items describing triangles. So, if you write a pixel shader that can write out vertex data, and then get X1900XT to read that data back in again as vertices, you have simulated the VS->GS->PS process.

In other words you use VS->PS->VB (R2VB) to generate the final triangles (perform geometry shading). You then re-submit the newly generated triangles, VB->VS->PS, to render the final result.

As well as being able to create/delete triangles, you can also use the fast texturing capability of the PS hardware in X1900XT to perform "vertex texturing". This way you avoid the s-l-o-w (or in X1900XT's case, the nonexistent) vertex texturing capability of SM3 GPUs.

Jawed

In X1900XT (like many GPUs) it's possible to write out data instead of merely rendering pixels to the screen. This concept is called render to vertex buffer, R2VB, when used to simulate geometry shading.

In effect a vertex buffer is a set of data items describing triangles. So, if you write a pixel shader that can write out vertex data, and then get X1900XT to read that data back in again as vertices, you have simulated the VS->GS->PS process.

In other words you use VS->PS->VB (R2VB) to generate the final triangles (perform geometry shading). You then re-submit the newly generated triangles, VB->VS->PS, to render the final result.

As well as being able to create/delete triangles, you can also use the fast texturing capability of the PS hardware in X1900XT to perform "vertex texturing". This way you avoid the s-l-o-w (or in X1900XT's case, the nonexistent) vertex texturing capability of SM3 GPUs.

Jawed

NocturnDragon

Regular

Jawed said:In other words you use VS->PS->VB (R2VB) to generate the final triangles (perform geometry shading). You then re-submit the newly generated triangles, VB->VS->PS, to render the final result.

I had the impression they used the PS also to calculate what usually is calulated by the VS and actually using VS with just a dummy shader to pass data over the PS...

So basically something like this:

Code:

|Setup| |Geom Shad | |Save Results| |dummy| |Vertx Shad | |Save Results| |dummy| |Pixel Shad | | Output |

| VS | ---->| PS | ---->| VB | ---->| VS | ---->| PS | ---->| VB | ---->| VS | ---->| PS | ---->|Frame Buffer|Edit: corrected the pic

Last edited by a moderator:

Jawed

Legend

The first "dummy VS" actually organises the creation of the VB, as I understand it. Since a PS can only render to memory locations determined by the triangle (or quad) it's shading, the initial VS effectively provides the PS with the domain of inputs. e.g. if you want to start with 100 vertices before performing R2VB, then you prolly need something like a 10x10 quad as the starting geometry (or a 100x1 quad). Humus can prolly explain this in English much better...

Once you've generated the VB, you need to do backface culling, viewport clipping etc., all of which are dedicated hardware units in the vertex processing section of the GPU. You could do these in software in the PS, I suppose, but it seems wasteful to ignore the functionality that's already there.

Jawed

Once you've generated the VB, you need to do backface culling, viewport clipping etc., all of which are dedicated hardware units in the vertex processing section of the GPU. You could do these in software in the PS, I suppose, but it seems wasteful to ignore the functionality that's already there.

Jawed

NocturnDragon

Regular

Jawed said:The first "dummy VS" actually organises the creation of the VB, as I understand it. Since a PS can only render to memory locations determined by the triangle (or quad) it's shading, the initial VS effectively provides the PS with the domain of inputs. e.g. if you want to start with 100 vertices before performing R2VB, then you prolly need something like a 10x10 quad as the starting geometry (or a 100x1 quad). Humus can prolly explain this in English much better...

Once you've generated the VB, you need to do backface culling, viewport clipping etc., all of which are dedicated hardware units in the vertex processing section of the GPU. You could do these in software in the PS, I suppose, but it seems wasteful to ignore the functionality that's already there.

Jawed

Got it, thanks!

Whilst I wasn't there to see it in person, I was told that the DX developer day at GDC '06 was running a hacked up version of D3D10's RefRast on current hardware - realtime of course

By no means fully functional, but some of the D3D10 features/API were remapped back to top-end D3D9 hardware. Sounds like something fairly similar to what ATI described (and was discussed in the last few posts)...

Jack

By no means fully functional, but some of the D3D10 features/API were remapped back to top-end D3D9 hardware. Sounds like something fairly similar to what ATI described (and was discussed in the last few posts)...

Jack

Similar threads

- Replies

- 7

- Views

- 3K

- Replies

- 37

- Views

- 5K

- Replies

- 21

- Views

- 3K