OEMs demand yearly refreshes, no matter if the chips are rebrands or new ones.What's up with this: http://techreport.com/news/30133/amd-debuts-radeon-m400-mobile-gpus-with-a-host-of-rebrands

Why all the rebrands when polaris 11 is about to be launched?

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Speculation, Rumors, and Discussion (Archive)

- Thread starter iMacmatician

- Start date

- Status

- Not open for further replies.

Anything that we can compare it to?

OEMs demand yearly refreshes, no matter if the chips are rebrands or new ones.

Specifically, laptop OEMs base their product cycle on when the market buys, and if you don't offer them a line with new model numbers when they want to make a new laptop line, they go to the competitor. Both GPU makers are happy to oblige, so we get things like this or G300M.

gamervivek

Regular

Anything that we can compare it to?

Still beaten by a 390.

http://ranker.sisoftware.net/show_r...efdce4ddedd4eccab885b593f693ae9eb8cbf6ce&l=en

Needs more Ghz.

The earlier Polaris 10 result showed pretty equivalent performance/mhz/shader to Hawaii, while this one shows it much lower (or as if it were only running at 910mhz).Anything that we can compare it to?

http://ranker.sisoftware.net/show_r...dce4d6e4d0e8cebc81b197f297aa9abccff2c3f5&l=en

What is SiSoft performance measuring? Is it just an inconsistent waste of time?

Apparently it was a complete snooze fest that reiterated things that were already known. (14FF is better than 28nm! etc.) But at least AMD warned everybody about that.What happened with Polaris not-presentation?

Then I'm seriously worried. AMD basically said that they have nothing more to offer, even when is so easy to offer more than what Nvidia has showed. Lets hope its just someone that needs to be replaced in AMD marketing department. *cross fingers*Apparently it was a complete snooze fest that reiterated things that were already known. (14FF is better than 28nm! etc.) But at least AMD warned everybody about that.

What happened with Polaris not-presentation?

Couple of new confirmations and rehashing some of what was known.

http://semiaccurate.com/forums/showpost.php?p=262627&postcount=1044Toettoetdaan said:This is what they were allowed to share:

- FreeSync up to 4K120

- DP 1.3 (and probably higher, but as the spec is not accepted they don't advertise with it)

- HDMI 2.0

- HDR Ready

- Designed for DX12 and Vulkan

- VR Ready (They are going to reveal new LiquidVR features on the Polaris launch)

- 14nm FF (2,5 again confirmed)

- Full 4K60 Hardware H.265/HEVC encode & decode

- Low latency 4k game streaming

- AMD TrueAudio technology

Nothing unexpected, but I think it looks good.

They basically made a video presentation of the Anandtech article...Couple of new confirmations and rehashing some of what was known.

http://semiaccurate.com/forums/showpost.php?p=262627&postcount=1044

The SiSoft tests are apparently useless as a point of comparison.Anything that we can compare it to?

http://www.overclock.net/t/1600443/...-of-test-samples-increasing/160#post_25173850

http://www.overclock.net/t/1600443/...-of-test-samples-increasing/170#post_25173961

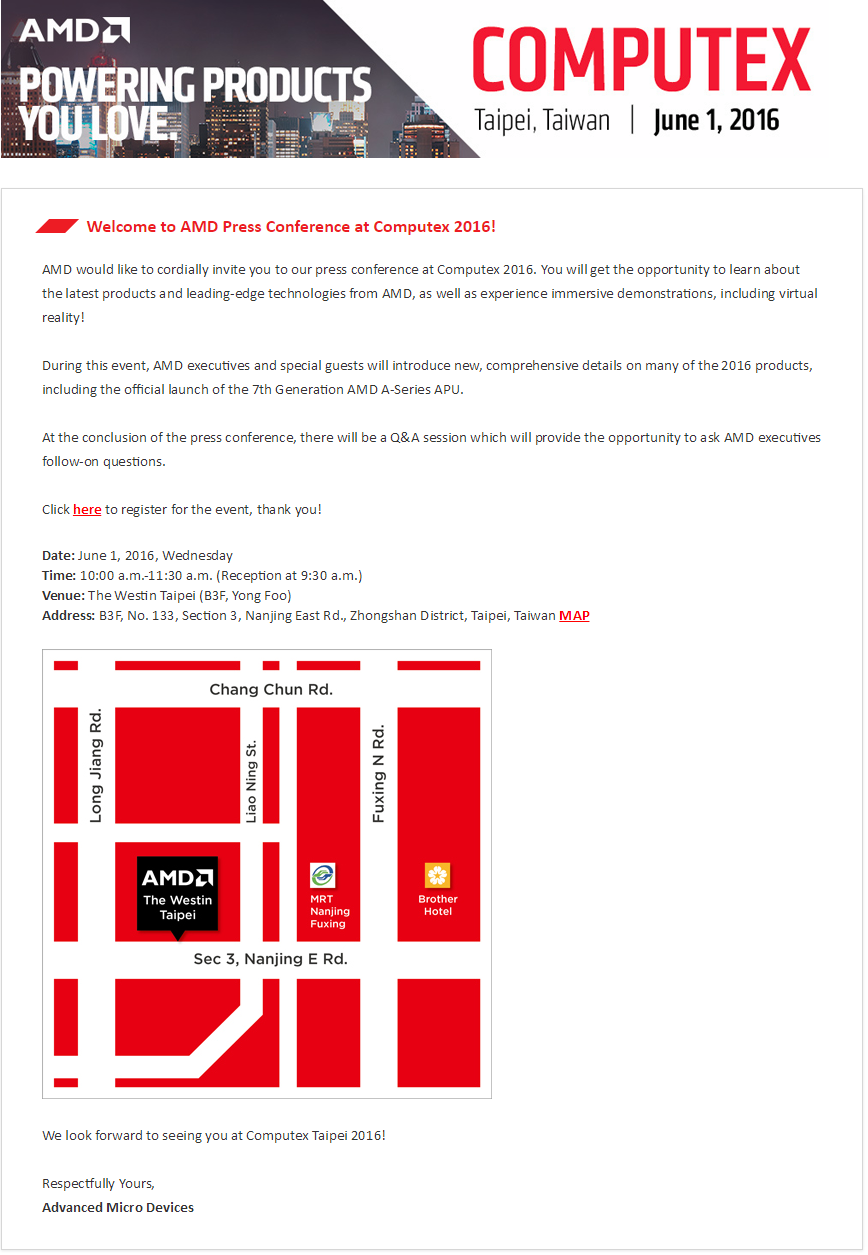

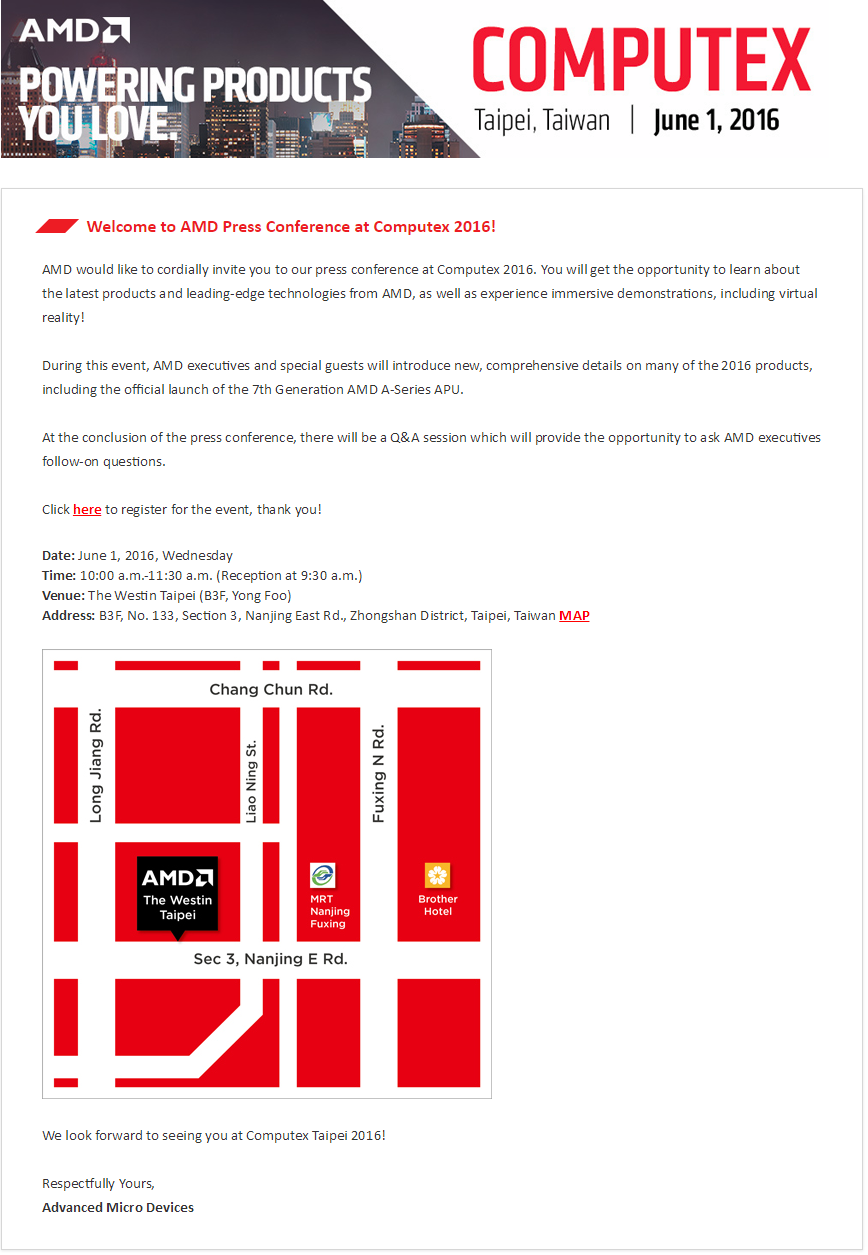

Well Computex for AMD could be great or a disappointment.....

I mentioned before that the Computex invitation was sending out mixed messages as the header mentioned Polaris announcement but the details suggested it was more of an introduction to 2016 products and rather with the official announcement launch for the A-Series APU.

Well, it looks like that header was only a watermark......

This is the actual invitation:

So no idea how AMD is handling their messaging on this, and as I said could either be a great Computex or rather disappointing, but I do not think we can now assume Polaris is launching at this event.

Link to update: http://videocardz.com/59947/amd-to-launch-polaris-10-at-computex

Cheers

I mentioned before that the Computex invitation was sending out mixed messages as the header mentioned Polaris announcement but the details suggested it was more of an introduction to 2016 products and rather with the official announcement launch for the A-Series APU.

Well, it looks like that header was only a watermark......

This is the actual invitation:

So no idea how AMD is handling their messaging on this, and as I said could either be a great Computex or rather disappointing, but I do not think we can now assume Polaris is launching at this event.

Link to update: http://videocardz.com/59947/amd-to-launch-polaris-10-at-computex

Cheers

It seems that people are still confusing terms "async compute", "async shaders" and "compute queue". Marketing and press doesn't seem to understand the terms properly and spread the confusion

Hardware:

AMD: Each compute unit (CUs) on GCN can run multiple shaders concurrently. Each CU can run both compute (CS) and graphics (PS/VS/GS/HS/DS) tasks concurrently. The 64 KB LDS (local data store) inside a CU is dynamically split between currently running shaders. Graphics shaders also use it for intermediate storage. AMD calls this feature "Async shaders".

Intel / Nvidia: These GPUs do not support running graphics + compute concurrently on a single compute unit. One possible reason is the LDS / cache configuration (GPU on chip memory is configured differently when running graphics - CUDA even allows direct control for it). There most likely are other reasons as well. According to Intel documentation it seems that they are running the whole GPU either in compute mode or graphics mode. Nvidia is not as clear about this. Maxwell likely can run compute and graphics simultaneously, but not both in the same "shader multiprocessor" (SM).

Async compute = running shaders in the compute queue. Compute queue is like another "CPU thread". It doesn't have any ties to the main queue. You can use fences to synchronize between queues, but this is a very heavy operation and likely causes stalls. You don't want to do more than a few fences (preferably one) per frame. Just like "CPU threads", compute queue doesn't guarantee any concurrent execution. Driver can time slice queues (just like OS does for CPU threads when you have more threads than the CPU core count). This can still be beneficial if you have big stalls (GPU waiting for CPU for instance). AMDs hardware works a bit like hyperthreading. It can feed multiple queues concurrently to all the compute units. If a compute units has stalls (even small stalls can be exploited), the CU will immediately switches to another shader (also graphics<->compute). This results in higher GPU utilization.

You don't need to use the compute queue in order to execute multiple shaders concurrently. DirectX 12 and Vulkan are by default running all commands concurrently, even from a single queue (at the level of concurrency supported by the hardware). The developer needs to manually insert barriers in the queue to represent synchronization points for each resource (to prevent read<->write hazards). All modern GPUs are able to execute multiple shaders concurrently. However on Intel and Nvidia, the GPU is running either graphics or compute at a time (but can run multiple compute shaders or multiple graphics shaders concurrently). So in order to maximize the performance, you'd want submit large batches of either graphics or compute to the queue at once (not alternating between both rapidly). You get a GPU stall ("wait until idle") on each graphics<->compute switch (unless you are AMD of course).

Nice post, however I have still a couple of questions..

At the begin sebbi says that it is not clear how things work for Nvidia, however then he says Maxwell can run compute and graphics simultaneously on different SMs.. so it is not clear only about Kepler and Pascal or what?

And later he says that Nvidia gpu runs either in graphic or compute mode at a time... doesn't this contradict the first sentency?

I don't get it..

Love_In_Rio

Veteran

The A-Series APU is ZEN and Polaris conformed?. Has little sense to make the presentation of an APU with Polaris inside without having presented first the GPU architecture (nor the ZEN one).Well Computex for AMD could be great or a disappointment.....

I mentioned before that the Computex invitation was sending out mixed messages as the header mentioned Polaris announcement but the details suggested it was more of an introduction to 2016 products and rather with the official announcement launch for the A-Series APU.

Well, it looks like that header was only a watermark......

This is the actual invitation:

So no idea how AMD is handling their messaging on this, and as I said could either be a great Computex or rather disappointing, but I do not think we can now assume Polaris is launching at this event.

Link to update: http://videocardz.com/59947/amd-to-launch-polaris-10-at-computex

Cheers

The only explanation could be this is a priority foundry project due to consoles' requirement to be out with big volumes for October (rumored PS4 Neo launch).

Ext3h

Regular

Both Maxwell and Pascal can split this at either SMM or TPC granularity (we actually don't know), running it in parallel. We don't know about earlier generations. Compute and graphics are still exclusive on Pascal, but the GPU may re-allocate without a complete shutdown, and not only at command list boundaries.At the begin sebbi says that it is not clear how things work for Nvidia, however then he says Maxwell can run compute and graphics simultaneously on different SMs.. so it is not clear only about Kepler and Pascal or what?

And later he says that Nvidia gpu runs either in graphic or compute mode at a time... doesn't this contradict the first sentency?

Starting a compute only command list switches the entire GPU (all SMMs/TPCs) into compute mode, so that was effectively exclusive in Maxwell. A mixed allocation is still possible though for a 3D command list.

7th gen APU is Carrizo on FM4 with DDR4. It was 'pre-announced' a month ago, but specific SKUs and design wins will be presented around/at Computex.The A-Series APU is ZEN and Polaris conformed?. Has little sense to make the presentation of an APU with Polaris inside without having presented first the GPU architecture (nor the ZEN one).

The only explanation could be this is a priority foundry project due to consoles' requirement to be out with big volumes for October (rumored PS4 Neo launch).

D

Deleted member 13524

Guest

It was 'pre-announced' a month ago, but specific SKUs and design wins will be presented around/at Computex.

Let's hope those exist, outside the very rare and completely butchered examples we saw with Carrizo design "wins".

AMD should try to make special bundles for Bristol Ridge + Polaris 11, or give away an Oland with every Bristol Ridge sale.

That and develop a number of small laptop motherboard and cooling designs that guarantee the usage of dual-channel memory, PCIe x16 connection to the discrete GPU and PCIe+SATA M.2 slots. If you want it to be done right, do it yourself.

- Status

- Not open for further replies.

Similar threads

- Replies

- 90

- Views

- 17K

- Replies

- 2K

- Views

- 230K

- Replies

- 20

- Views

- 6K