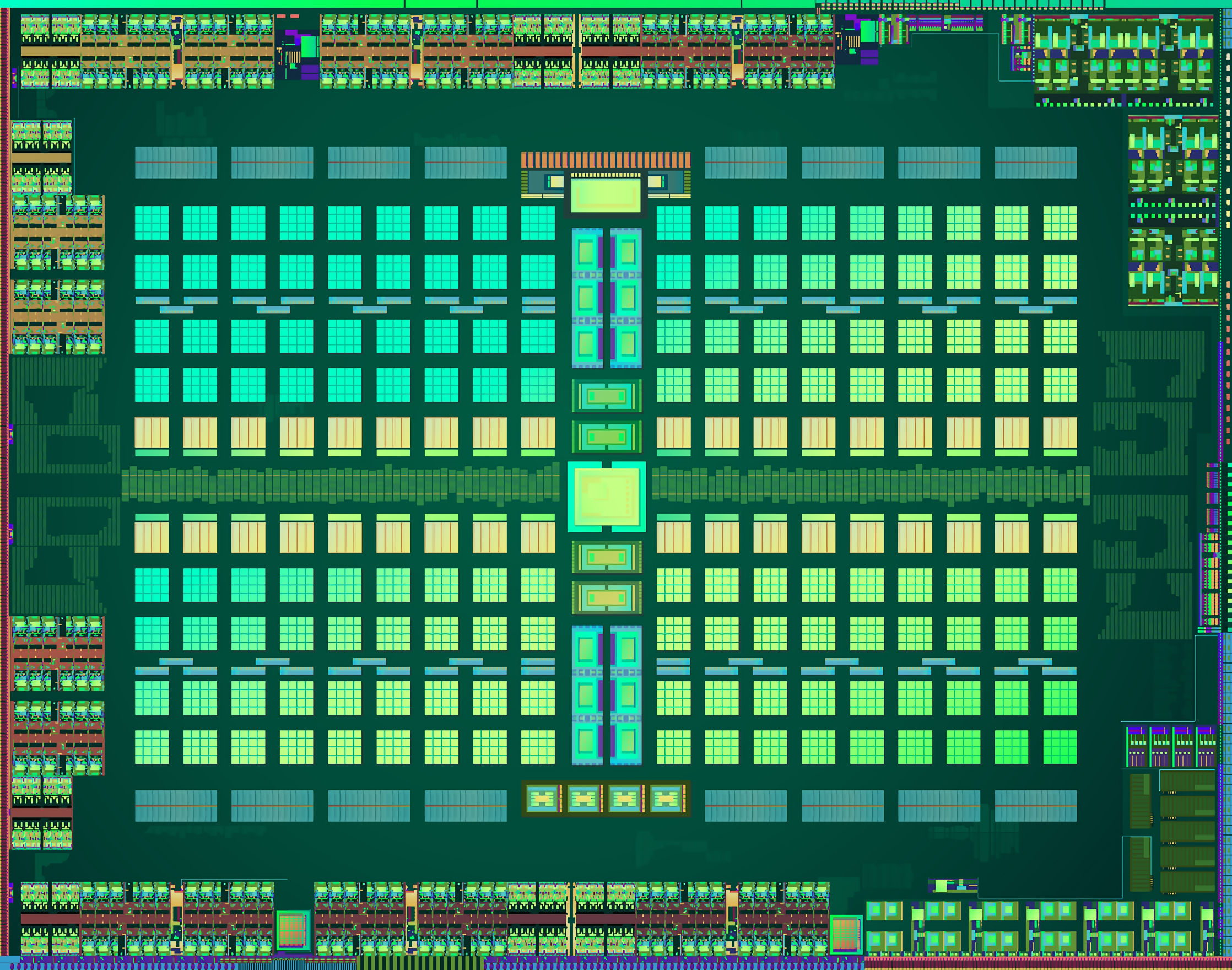

Polaris CU block diagram is identical to previous GCNs (and they have that same one scalar there), the ISA hasn't changed either.This entire topic has been missed with all the power discussion. Shader clusters aside, I haven't seen much in the way of what is inside a cluster. Historically AMD hasn't counted scalars, but they could be playing a larger role with Polaris and there could be more of them.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Speculation, Rumors, and Discussion (Archive)

- Thread starter iMacmatician

- Start date

- Status

- Not open for further replies.

OK one of the better extreme OC'ers has gone to town with the 480.

They managed to hit 1500MHz, the caveat being and they said this "it is not a 24/7 setting", this brought it up to the performance of a 980 (not a reference model).

Enjoy the video, just appreciate English is not his first language.

And to hit that 1500MHz, yeah quite a lot of DIY modding required including software accessing the PWM controller - his changes are well outside of 'normal' operating parameters but this is extreme OC.

Also to stress, he mentions the PCIe slot being OK, but what he focused on was the ATX12V 24-pin and not the actual x16 slot, so yeah its potential for being an issue still applies.

Cheers

They managed to hit 1500MHz, the caveat being and they said this "it is not a 24/7 setting", this brought it up to the performance of a 980 (not a reference model).

Enjoy the video, just appreciate English is not his first language.

And to hit that 1500MHz, yeah quite a lot of DIY modding required including software accessing the PWM controller - his changes are well outside of 'normal' operating parameters but this is extreme OC.

Also to stress, he mentions the PCIe slot being OK, but what he focused on was the ATX12V 24-pin and not the actual x16 slot, so yeah its potential for being an issue still applies.

Cheers

Last edited:

So despite the fact that AMD 'shopped it with a different color, people still think those yellow fake rectangles are spare CUs?

...

OK one of the better extreme OC'ers has gone to town with the 480.

They managed to hit 1500MHz, the caveat being and they said this "it is not a 24/7 setting", this brought it up to the performance of a 980 (not a reference model).

Enjoy the video, just appreciate English is not his first language.

And to hit that 1500MHz, yeah quite a lot of DIY modding required including software accessing the PWM controller - his changes are well outside of 'normal' operating parameters but this is extreme OC.

Also to stress, he mentions the PCIe slot being OK, but what he focused on was the ATX12V 24-pin and not the actual x16 slot, so yeah its potential for being an issue still applies.

Cheers

Have you seen the power consumption. This is hugely disappointing because it seems like Polaris needs the same level of power to achieve the same performance as a R9 390X.

itsmydamnation

Veteran

Have you seen the power consumption. This is hugely disappointing because it seems like Polaris needs the same level of power to achieve the same performance as a R9 390X.

While in many cases having close to 1/2 the resources. the 480 is just pushed higher then ideal in clock given the cooler and power delivery. It will be interesting to revisit in a years time with many more DX12 games to see where hawaii and polaris 10 sit vs Maxwell and pascal.

But i think people have ignored the fact Polaris 10 must be having pretty good yields when there is no rush to release 470, and there is more stock then you can poke a stick at. One interesting thing that Raja has said several times that i don't understand the maths of is that on 14nm small chips are still very close to moore's law. Could we be under-estimating the cost difference between 230mm and 310mm?

It will be interesting then also, to revisit the then-competitive landscape and pricing. IOW: Don't buy hardware now for workloads you expect to happen in the future.While in many cases having close to 1/2 the resources. the 480 is just pushed higher then ideal in clock given the cooler and power delivery. It will be interesting to revisit in a years time with many more DX12 games to see where hawaii and polaris 10 sit vs Maxwell and pascal.

While in many cases having close to 1/2 the resources. the 480 is just pushed higher then ideal in clock given the cooler and power delivery. It will be interesting to revisit in a years time with many more DX12 games to see where hawaii and polaris 10 sit vs Maxwell and pascal.

But i think people have ignored the fact Polaris 10 must be having pretty good yields when there is no rush to release 470, and there is more stock then you can poke a stick at. One interesting thing that Raja has said several times that i don't understand the maths of is that on 14nm small chips are still very close to moore's law. Could we be under-estimating the cost difference between 230mm and 310mm?

Again, power delivery with the 480 is not the problem, i think the problem is just coming on their new voltage "software" regulator system, you can power 2 480 with it without probem. something have not be checked as it should at first. Maybe their test vehicules who are not " standard" motherboard was not exhibit the problem.

I dont know if you have allready seen thoses green boards tests.

It will be interesting then also, to revisit the then-competitive landscape and pricing. IOW: Don't buy hardware now for workloads you expect to happen in the future.

Im not sure i will call that future, when it should allready be there. ( if we are speaking about DX12 ).. The fact is it is way less easy than expected to change the way games are coded with DX12.. But how, dont worry it was even worst with DX10.. i remember AMD and MS who was initiate tons of conference for gaming programmer as they was completely lost, with the result we know, most DX10 games have become DX11 finally. ( and DX10 have been nearly abandonned )

Last edited:

While in many cases having close to 1/2 the resources. the 480 is just pushed higher then ideal in clock given the cooler and power delivery. It will be interesting to revisit in a years time with many more DX12 games to see where hawaii and polaris 10 sit vs Maxwell and pascal.

But i think people have ignored the fact Polaris 10 must be having pretty good yields when there is no rush to release 470, and there is more stock then you can poke a stick at. One interesting thing that Raja has said several times that i don't understand the maths of is that on 14nm small chips are still very close to moore's law. Could we be under-estimating the cost difference between 230mm and 310mm?

I would understand it in a way to larger chips do not allow a similar tight packing of transistors as small chips. If you look at the power consumption, transistor count and die size, I would dare say that even Polaris 10 is having some power leakage problems.

I am pretty sure that a timeframe one year from now actually and verifyably is the future.

Im pretty sure that we could advance this future to the end of 2016.. and Sebbi ( who is now, responsible of the "Unity rendering research ( wow men ) coud maybe confirm it, i dont want to speak for him.. i see all the lights passing to the green for the end of 2016.

And what a surprise it is exactly when is expected Vega and GP100 to arrive.

But yes, it was finaly more difficult than expected at first. Well i dont know why at first peoples ( too enthusiast maybe ) have think it will be easy and quick.. The good thing with this " future" as you call it, is AMD have allways got the luck ( outsie the R600 and again this can be called as caution, with tesseation and other new SP engines ) that their "view" have finally match the time.. lets hope it continue like this. If we look back, the history have allways give reason to AMD from a view point or another..

Last edited:

FWIW: In my opinion, what we see right now are just the first wave of DX12 titles, most of which features what was called a bolted-on DX12 mode. I agree, in a years time, we might (and i really hope for that to happen!) see much more comprehensive DX12 implementations, where some really cool features are used above and beyond what you can bolt-on with a very limited budget after your renderer is basically finished.

After speaking with Richard Huddy last week and reading what sebbi wrote in these forums, it is clear that with HPCQ you could for example see pathfinding for AI moved to the GPU. Things like this would be not just be a performance feature but enable whole new paradigms in game design. Concurrent compute execution via asynchronous compute queues could really help leverage all the shader ressources available, yessurethankyousir. But it's gonna be hard to tell how the vastly different workloads will turn out in terms of performance on different architectures, hell, even within one family of architectures. Add to the mix, that Nvidia probably will push their own strengths with Gameworks and use things like ROV/CV in order to save processing time or even devise new algorithms for... things.

After speaking with Richard Huddy last week and reading what sebbi wrote in these forums, it is clear that with HPCQ you could for example see pathfinding for AI moved to the GPU. Things like this would be not just be a performance feature but enable whole new paradigms in game design. Concurrent compute execution via asynchronous compute queues could really help leverage all the shader ressources available, yessurethankyousir. But it's gonna be hard to tell how the vastly different workloads will turn out in terms of performance on different architectures, hell, even within one family of architectures. Add to the mix, that Nvidia probably will push their own strengths with Gameworks and use things like ROV/CV in order to save processing time or even devise new algorithms for... things.

FWIW: In my opinion, what we see right now are just the first wave of DX12 titles, most of which features what was called a bolted-on DX12 mode. I agree, in a years time, we might (and i really hope for that to happen!) see much more comprehensive DX12 implementations, where some really cool features are used above and beyond what you can bolt-on with a very limited budget after your renderer is basically finished.

After speaking with Richard Huddy last week and reading what sebbi wrote in these forums, it is clear that with HPCQ you could for example see pathfinding for AI moved to the GPU. Things like this would be not just be a performance feature but enable whole new paradigms in game design. Concurrent compute execution via asynchronous compute queues could really help leverage all the shader ressources available, yessurethankyousir. But it's gonna be hard to tell how the vastly different workloads will turn out in terms of performance on different architectures, hell, even within one family of architectures. Add to the mix, that Nvidia probably will push their own strengths with Gameworks and use things like ROV/CV in order to save processing time or even devise new algorithms for... things.

I think the first mismatch with DX12, maybe due to Mantle was to think " free performance gain" ( but who care of winning 10fps here and there ), what was missing is the "use of this effiency" for include new features ( as you cite AI ), offcourse, when you see the result of some actual and the state of the gaming industry whoo is largely dominated by immediate profit ( banking as fast they can ), its a bit so.. well confusing right now.

The problem is allways the same, games, and specificaly engine for run the games, take time to be developped, and you can " port " them to a different engine ( DX11>DX12) but this surely just be the same engine. when used in a short time ..

At least AOTS have the merit to use an engine who is basically developped for Mantle /Vulllkan/DX12 from scratch ( i dont say it is a good, just precise this ).. But at the same time it is really engine who use the harder visibility of any games style, the RTS one who can meet the worst graphics ( units ) with the best code efficiency ( or the worst if developpers do errors ).. If you do a wonderfulll main characters in a third person shooters, and add a lot of details on an game, it is immediatlly visible and applaud, with RTS, thats way different.

Last edited:

For every single D3D release after general crowd started to actually pay attention to D3D people are expecting performance gains as in higher fps solely due to change of API. This has never worked out as public has been expecting. For example no one wants to ship a game that will be unplayable on D3D11 but work like a charm on D3D12. Developers will work around restrictions of API (batching in pre D3D12 world). They will complain about it obviously. But at the end of the day Ashes of Singularity is the closest to unplayable in D3D11 as we'll probably ever see.

- Status

- Not open for further replies.

Similar threads

- Replies

- 90

- Views

- 17K

- Replies

- 2K

- Views

- 229K

- Replies

- 20

- Views

- 6K