Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Speculation, Rumors, and Discussion (Archive)

- Thread starter iMacmatician

- Start date

- Status

- Not open for further replies.

Well, there is such a thing as physics, and the properties of passing current through various metals is generally well understood. But yes, this is the precise reason why specifications exist and why they are very often extremely conservative.And as one usually can't do a negative proof (that's a general thing), the only way to show something actually will cause problems is to demonstrate them and try to narrow down the circumstances under which this will occur.

The problem (in this specific case) isn't really with the physics though, it is the breaking of a promise. If a power supply promises to provide at least 75w to a device and it in fact provides at least 80w but is confronted with a sustained 85w load (from a device which promised not to pull more than 75w) and shuts down to protect itself, who is at fault? The one breaking the promise. Always. You might say that is a pretty sad power supply, and I might agree. But at the end of the day it fulfilled its obligation and is not liable for the result. The device on the other hand is, because it made a promise it did not keep. No sane company selling thousands/millions of products wants to expose itself to any more liability than is absolutely necessary.

A1xLLcqAgt0qc2RyMz0y

Veteran

Well the specs were put together by the very companies that makes and sells the products and systems, they know what is best to keep their business stable from a warranty stand point. Its all about the money at the end.

You are assuming that no one bends the rules which in this case AMD is doing.

There have been lots of cases where recalls have happened when products are buggy. The Pentium divide bug or Nvidia's underfill problem caused products to be recalled or replaced.

I expect motherboard makers will not indemnify AMD and may in fact state the warranty is void if a PCI-e non-complient card is installed.

PCI-E only specifies a top ceiling of 75W at boot time, after which a series of negotiation between the card and the mobo determine how much power that specific slot will use (up to 300watts I think) the rest of the session. Motherboards will not burn.

This info is also on reddit.

This info is also on reddit.

The over-spec PCIE power use seems pretty substantiated, but most people are focusing on anticipated dramatic consequences like blown motherboards.

More relevant is understanding why this situation occured. Some possibilities:

More relevant is understanding why this situation occured. Some possibilities:

- AMD realized it was over spec, hid it from PCIE qualification, and decided not to fix it

- AMD did not realize it was over spec, PCIE qualification missed it, and only reviewers discovered it

- After manufacturing began, AMD realized it was over spec, is working on a fix, but shipped the first batch of out-of-spec stock anyway

- AMD was in spec, but a last-minute BIOS change to increase clocks/voltages pushed its power use over spec and nobody caught the PCIE consequences

You are assuming that no one bends the rules which in this case AMD is doing.

There have been lots of cases where recalls have happened when products are buggy. The Pentium divide bug or Nvidia's underfill problem caused products to be recalled or replaced.

I expect motherboard makers will not indemnify AMD and may in fact state the warranty is void if a PCI-e non-complient card is installed.

Well that is the consequence AMD has to take if they don't fix this problem (well after verifying the problem)

D

Deleted member 2197

Guest

RX 480 Crossfire review ...

http://www.hardwareunboxed.com/rx-480-crossfire-performance-gtx-1070-killer/

On average we saw total system power consumption of 437 watts when gaming. This was up from the 235 watts of the single card, and 240 watts of the GTX 1070. A lot of gamers couldn’t give two stuffs about how much power their rig uses when gaming, but I think it’s an important factor to consider that the cards will use 85% more power than the 1070 system.

Now a lot of you will be interested in how these cards fared against the GTX 1070 in particular. With two RX 480s, performance was on average 14% faster than our single card results with the 1070, where it was 35% slower without its twin. Out of interest, if you take away the games where performance was jittery, this average didn’t change. So I suppose for around the same money you can get slightly better performance from this pair of AMD cards, however keep in mind there are some major titles where you’ll be stuck with single card performance, and some others where the gameplay is far from smooth.

Some of these titles mentioned where Crossfire is flat out broken or jittery are very popular games, and if it came down to choosing between the slightly faster overall dual 480s, or the reliable and consistent 1070, then I’d honestly be leaning towards the faster single GPU option, as I always have. I just like to be able to KNOW that my expensive hardware is going to work well with virtually every game.

http://www.hardwareunboxed.com/rx-480-crossfire-performance-gtx-1070-killer/

Anarchist4000

Veteran

Or just take their thermal imaging camera, point it at the mobo and see if any of the traces are glowing. We are after all arguing the merits of moving enough current to liquify copper or something nearby.Wasn't Tom's review saying something along the lines of 'it's so bad we're afraid our high end mobo may blow up so we won't risk it". Well that's great, get a 30 EUR mobo and test it. If it blows up you just uncovered something equivalent to VW emission specs cheating in GPU space. Surely a massive recall would follow. Might even destroy AMD.

Hard to know without a proper diagram for the card. There could be a common plane biased with resistors and diodes, VRMs tied to the different rails, or a combination where one or more is shared. In theory someone with a card could test the input voltage of each VRM to likely figure it out. VRMs are basically switching power supplies.How does the distribution of load between different power inputs work?

Do they have, say, 2 VRMs on one and 2 on the other?

If anything it will burn out one of the traces or melt some plastic. They would need a high voltage line ran to their computer to really blow it up.Actually, the statement that it can lead to blowing up mainboards is very far from an established fact. It's pure conjecture at this point. If someone wants to show it is a real danger, he has to test it, preferably on the cheapest, lowest quality mainboard he can find.

Like taking an old analog phone on an aircraft to mess up cockpit communication or analog landing guidance systems? Maybe in some third world nations.This is important not because it would happen but because it can happen. Same thing as why you can't use your cellphone in a plane.

Different, not necessarily better. 14nm is the low power variant of what is basically the same thing.Just asking...but is AMD 14nm process 15% better than Nvidia 16nm process..as the numbers suggest?

Not sure #2 is something you can't miss deliberately. #3 is possible, might also be a shoddy component. #4, while possible, seems unlikely as the board should have been equal or biased towards the 6 pin.The over-spec PCIE power use seems pretty substantiated, but most people are focusing on anticipated dramatic consequences like blown motherboards.

More relevant is understanding why this situation occured. Some possibilities:

Each possibility has its own interpretation and consequences. AMD and its engineers are skilled professionals, so I would place my bet on #4 instead of an engineering failure like #1-3.

- AMD realized it was over spec, hid it from PCIE qualification, and decided not to fix it

- AMD did not realize it was over spec, PCIE qualification missed it, and only reviewers discovered it

- After manufacturing began, AMD realized it was over spec, is working on a fix, but shipped the first batch of out-of-spec stock anyway

- AMD was in spec, but a last-minute BIOS change to increase clocks/voltages pushed its power use over spec and nobody caught the PCIE consequences

Not sure why people think an 8 pin would make any difference here. More in spec power delivery is about the only reason and that still wouldn't fix the issue.

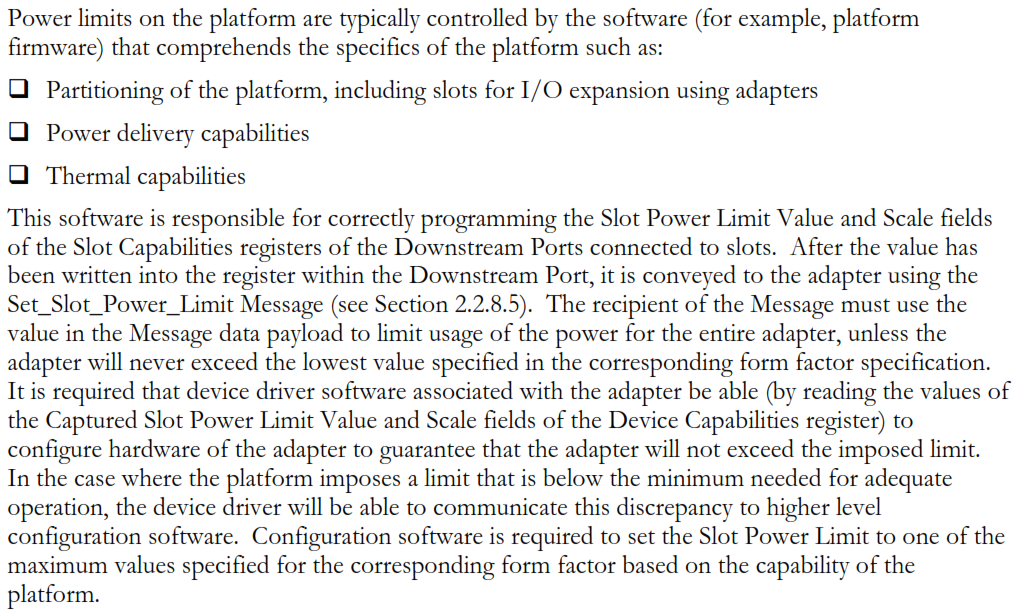

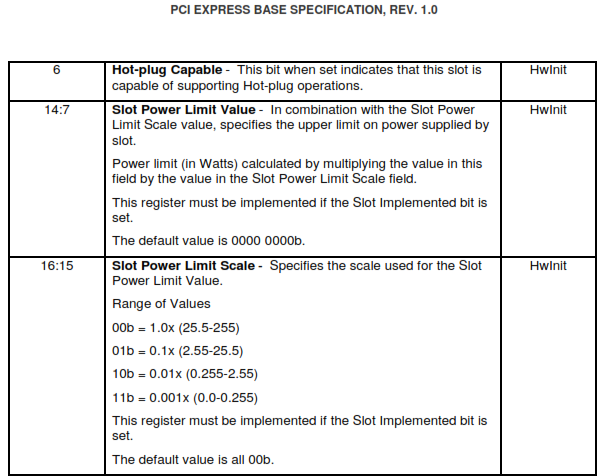

There is a pretty old spec for the electromechanical design where that 75W (as the minimum requirement?) is stated. But the PCIe spec actually includes a slot capabilities register which should reflect, well, the capabilities for each slot in the system (and probably/hopefully set in a platform specific way by the BIOS). And apparently this capabilities register includes a value for the "slot power limit" with a range up to 240W in 1 W steps and then 250W, 275W, 300W and even some reserved values for above 300W. Would be interesting to check how this is configured on usual mainboards (as the spec stipulates the card has to limit its consumption to the programmed value as long as it wants to use more than the form factor spec [75W for PEG], it is allowed to use max[form_factor_spec, slot_power_limit] as I understand the spec). I would guess the very high values are used for these MXM like modules for the Tesla cards (where 250+W are supplied over the [non-standard] slots).What does the spec actually say? Has any mobo makers said anything? You'd think they be the most worried since the are the ones who have to serve the rma if a board does fail.

edit:

PCIe 3.0 base spec, section 6.9:

But no idea how relevant this really is as one can read it also like it should limit the complete consumption of the card not just the amount supplied by the slot. Earlier versions (like 1.0, which also misses the 250W, 275W, 300W, and the reserved above 300W encodings) appear to more clearly specify just supply through the slot, though.

Last edited:

What does the spec actually say?

"A standard height x16 add-in card intended for server I/O applications must limit its power dissipation to 25 W. A standard height x16 add-in card intended for graphics applications must, at initial power-up, not exceed 25 W of power dissipation, until configured as a high power device, at which time it must not 30 exceed 75 W of power dissipation."

"The 75 W maximum can be drawn via the combination of +12V and +3.3V rails, but each rail draw is limited as defined in Table 4-1, and the sum of the draw on the two rails cannot exceed 75 W."

About as clear as it gets...

From an old revision though...

D

Deleted member 13524

Guest

Wow, that was a rather nasty little group of AMD-hater circlejerkers back in the pages 171-172 of this thread.

Thank you Rys for stopping it through sheer sanity. The card is getting glowing reviews and winning performance/price ratios pretty much everywhere while at the same time offering very good power consumption, yet we're seeing the usual suspects trying to turn it into humanity's greatest failure.

Regarding the competition part, I can only guess these same people really enjoy how nVidia's full ~300mm^2 GPU debuted at $230 in their first 40nm line, then at $500 in their first 28nm line and now we can't seem to find the 16FF one at less than ~$900 in Europe (thanks to a completely fake MSRP that no one is following because of that FE marketing ploy).

They're either more reliant on nVidia's stock price than they are on GPU price needs, or this is a totally new form of masochism.

The PCIe armchair-concerns seems is a bit ridiculous from an electric engineer's POV. The motherboard's 12V feed comes from the PSU (the ATX24 spec includes a number of 12V pins) so if there is more current coming from the slot than from the dedicated 6pin connector then it's simply because it's the path of less resistance in that specific case. Multiple GPUs in one motherboard will probably end up just relying more on the 6-pin connector for the 12V than the PCIe slot.

At 12V, pushing 75W means a current of 6.25A. At 83W it goes towards 6.92A. Thinking the motherboard "might blow up" because it's pushing 0.67A more on that specific power pathway is a bit ridiculous IMO.

Regardless, a standard is a standard and although they're almost always over-engineered, they should be followed. Perhaps AMD can issue some driver update that will just tell the bios to force a different power distribution. Though If they don't, I think the consequences will be... none at all.

Thank you Rys for stopping it through sheer sanity. The card is getting glowing reviews and winning performance/price ratios pretty much everywhere while at the same time offering very good power consumption, yet we're seeing the usual suspects trying to turn it into humanity's greatest failure.

Regarding the competition part, I can only guess these same people really enjoy how nVidia's full ~300mm^2 GPU debuted at $230 in their first 40nm line, then at $500 in their first 28nm line and now we can't seem to find the 16FF one at less than ~$900 in Europe (thanks to a completely fake MSRP that no one is following because of that FE marketing ploy).

They're either more reliant on nVidia's stock price than they are on GPU price needs, or this is a totally new form of masochism.

The PCIe armchair-concerns seems is a bit ridiculous from an electric engineer's POV. The motherboard's 12V feed comes from the PSU (the ATX24 spec includes a number of 12V pins) so if there is more current coming from the slot than from the dedicated 6pin connector then it's simply because it's the path of less resistance in that specific case. Multiple GPUs in one motherboard will probably end up just relying more on the 6-pin connector for the 12V than the PCIe slot.

At 12V, pushing 75W means a current of 6.25A. At 83W it goes towards 6.92A. Thinking the motherboard "might blow up" because it's pushing 0.67A more on that specific power pathway is a bit ridiculous IMO.

Regardless, a standard is a standard and although they're almost always over-engineered, they should be followed. Perhaps AMD can issue some driver update that will just tell the bios to force a different power distribution. Though If they don't, I think the consequences will be... none at all.

The official PCIE specification says that an x16 graphics card can consume a maximum of 9.9 watts from the 3.3V slot supply, a maximum of 66 watts from the slot 12V supply, and a maximum of 75W from both combined. Tom's Hardware measurement showed a 1-minute in-game average of 82 watts from the 12V supply, with frequent transient peaks of over 100 watts. The 3.3V draw stayed in spec.What does the spec actually say?

Infinisearch

Veteran

PCI-E only specifies a top ceiling of 75W at boot time, after which a series of negotiation between the card and the mobo determine how much power that specific slot will use (up to 300watts I think) the rest of the session. Motherboards will not burn.

Who's right?"A standard height x16 add-in card intended for server I/O applications must limit its power dissipation to 25 W. A standard height x16 add-in card intended for graphics applications must, at initial power-up, not exceed 25 W of power dissipation, until configured as a high power device, at which time it must not 30 exceed 75 W of power dissipation."

"The 75 W maximum can be drawn via the combination of +12V and +3.3V rails, but each rail draw is limited as defined in Table 4-1, and the sum of the draw on the two rails cannot exceed 75 W."

As I understand it, the larger numbers are the max an individual card in that slot can draw from all sources (e.g. 8 + 6 pin for a 300 watt card). 8 pin = 150 W, + 75W 6 pin, + 75W board = 300W total.

So you can certainly have a 300W (max) card in a slot, but it should not draw more than 75W max from the board.

So you can certainly have a 300W (max) card in a slot, but it should not draw more than 75W max from the board.

The problem with ratings is that one has to make assumptions because there are several design options and implementations a manufacturer can go, anywhere from 6A up to high current solutions around 13A.Of course. But stepping a bit outside of some specs doesn't mean automatically, that there will be trouble. And the question was, will there be some problems?

We all know the 6pin PCIe plugs are good for only 75W according to spec. 8pin plugs are good for 150W, even if the amount of 12V conductors is exactly the same. Forgetting the PCIe or ATX spec for a moment and looking just at the ratings of the actual plugs, one learns it shouldn't be much of a problem to supply (way) more than 200W through a 6pin plug (if the power supply is built to deliver that much). So can we expect problems of burnt 6 pin plugs if a graphics card draws more than 75W through that plug? Very likely not. And the same can be very well true for the delivery through the PCIe slot. I agree maybe one shouldn't try to built a 3 or 4way crossfire system (and overclock the GPU on top of it) and expect no problems as it may strain the power delivery on the board. But as someone has explained already, it may even work without damaging the board (as the card starts to draw more through the 6pin plugs). But this is also just conjecture. We just don't know at this point.

.

Same with cabling, the common supplied gauge is 18AWG in this context (especially at the more budget end) where with high current you will want 16AWG cable, and there is nothing stopping a manufacturer reducing this to 20AWG that is also spec/rated by Molex.

If you want to go with standard rating spec, that would give you roughly 144W Max from the mainboard but this has to be shared with all devices using the PCI express slots and some other devices, on top of this you need to consider the riser-slot that may have its own current limitations (I know some are only even rated to 5A).

For auxiliary PEG 6-pin gives you 192W max, and 288W max for 8-pin.

But then you need to accomodate de-rating (meaning you do not want to be too near the max), needs to be 18AWG minimum for all 12V wires, and a reasonable PSU if OC or importantly going 2x480.

That said it is clear Tom's Hardware was also taking this into consideration and not just PCIe spec because they did not get overly concerned about the power distribution when context is using a single card and not overclocking, even without OCing they had measurements that went beyond not just PCIe spec but also ratings based upon standard components (not HCS).

They measured peaks at 155W and commented that thankfully they were brief burst in behaviour.

Where they were not happy is when they OC and it went to average of 100W and peak 200W, and also dubious about 2x480 setup that would again go above ratings for standard components, here I bet it needs a good motherboard/PSU that are not budget-mainstream.

However as I mentioned you do not want to be too near the max as modern GPUs have a pretty high temp that also influences derating for maximum current (would not be significant but would reduce that max a bit).

While I doubt a motherboard/PSU will 'blow' but maybe more impact for budget products, the OC and 2x480 power demand/distribution could cause problems that initially would only be noticed if measured with a scope but with long term failure possibilities or unwanted trait behaviours from a power perspective.

But the caveat is whether this applies to just a few cards or is a more general power behaviour.

Cheers

D

Deleted member 2197

Guest

Either this is a misinterpretation, or it has literally NEVER come up before ever, in any discussion here on B3D that I've seen, or in any hardware website article or GPU review.PCI-E only specifies a top ceiling of 75W at boot time, after which a series of negotiation between the card and the mobo determine how much power that specific slot will use (up to 300watts I think) the rest of the session.

I'm dubious as to the veracity of this information, as it would mean a high-end GPU might not need ANY auxiliary power connectors, and that's - as I mentioned - something that has never been brought up for discussion that I have seen. Also, PCIe socket pins are incredibly thin gauge - I wouldn't want to pull 300W through them; I'd be wary of welding the pins to the card edge connector...

- Status

- Not open for further replies.

Similar threads

- Replies

- 90

- Views

- 17K

- Replies

- 2K

- Views

- 228K

- Replies

- 20

- Views

- 6K