After a skim on the paper, I would say it might not as good as it seems. Don't even mention that it only accelerates greatly a few workloads. PIM is undoubtedly an interesting idea, but integrating stacked memory for general-purpose use is already a huge leap after all, which makes PIM just a point of specialization to the extreme.

I didn't add emphasis to a line where I noted that AMD may not choose to go down this path. I've gone into more detail elsewhere on that, and my opinion of their level of committment would only add noise.

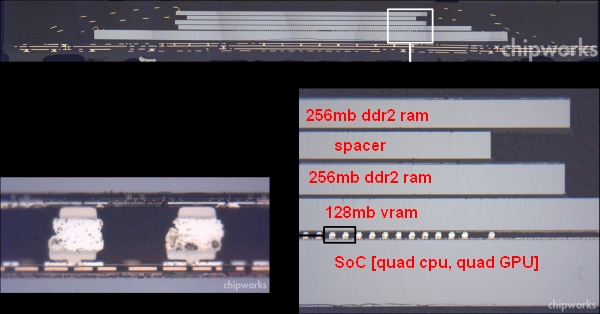

There are curious elements of the research, like the use of old process node designations and some stock media/old chip images that don't point to this being a huge production on the part of AMD.

There were a few benefits, aside from the higher bandwidth in the stack. There was slide that mentioned taking advantage of limited interposer area (slide 8).

A HMC-derived system is going to have logic there anyway, and exascale demands power efficiency scaling beyond what AMD--or possibly anyone--has been doing recently.

The whole PIM paradigm the paper suggested is based on HSA and NUMA, which means now the memory of the node (if we consider all PIMs tightly coupled to the host chip a node) is fragmented into several big, non-interleaved chunk.

It assumes a HMC-type arrangement, which already abstracts memory behind a packetized bus where each cube is its own node.

At the likely capacities and bandwidths involved, it may not be a significant problem for some possible AMD products, like game devices.

The host links are given an assumed 160GB/s. If we assume a 4GB stack in the same time frame (maybe), then something like a 60 FPS game would be incapable of accessing the whole DRAM anyway. It would require a double-capacity stack to say the same for 30 FPS, which might be a stretch for a generation or two.

I'm not sure of the impact that the proposal only gives the on-stack bandwidth as 4x higher.

Then the middleware built on top of the HSA runtime will be responsible of ping-ponging things and managing data placement among the PIMs.

It probably could, but probably should avoid doing that frequently.

These are multi-GB slices, which is a lot to move around and a lot of leeway for blocking or tiling.

GPU architectures are already tiled, and their tile sizes and even massive chunks of working set would find a decent fit. ROP-like functionality and various forms of atomic ops would be right at home.

The paper seemingly does not address the cache coherency issues, by the way. But as the whole thing is in a NUMA paradigm and the accesses to the memory is always destined to a single stack, perhaps a directory in the PIM would be needed... eh?

The likely way around this is the use of HSA, which has a more relaxed memory model.

It doesn't seem like the PIMs can access each other's nodes, so the stack logic has a different and local view of the stack's GBs of memory, making their share a form of mapped device memory.

Only the host would be able to see a much larger address space.

The host would need to be aware of how to interact with the memory allocated in this fashion.

There's already differing mapping behavior and interleaving settings handled by the memory controller and APU logic already for Garlic and standard memory, although this would be another layer of complication.

P.S. The inter-PIM interconnect wasn't mentioned in the written paper, it seems. Or did I miss something?

Slide 11 has it in its diagram. It's a very tiny mention, but consistent with an HMC-like design.

Is there more tho this than reducing power by moving the compute/memory closer? The logic dies in PIM stack seem suspiciously like garden varierty SoCs.

Bandwidth can be higher as well, direct stacking can have higher connection density, greater speeds, and lower signalling distance relative to an interposer.

The other is finding a way to get more out of limited interposer area.

It basically favors certain operation types that need a lot of memory movement, but can skimp on logic activity. Other workloads that don't have this requirement can have the host die that is unencumbered by the thermal and power limitations of the stack.