Insomniac's method is bonkers good and its being done on 36CU rdna.

Care to share to others, if its not behind NDA?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Insomniac's method is bonkers good and its being done on 36CU rdna.

Depends what you mean by equivalent.I am very curious about this. DLSS requires Machine learning.

FidelityFX is the equivalent of DLSS and supposedly coming to both platforms. But we know the PS5 doesnt have ML. So how is it supposed to have it?

On how they are doing it? same as other devs I presume. No NDA on the results. The results are on DF review of the spiderman PS5 review. The 1080p RT mode video I believe.Care to share to others, if its not behind NDA?

On how they are doing it? same as other devs I presume. No NDA on the results. The results are on DF review of the spiderman PS5 review. The 1080p RT mode video I believe.

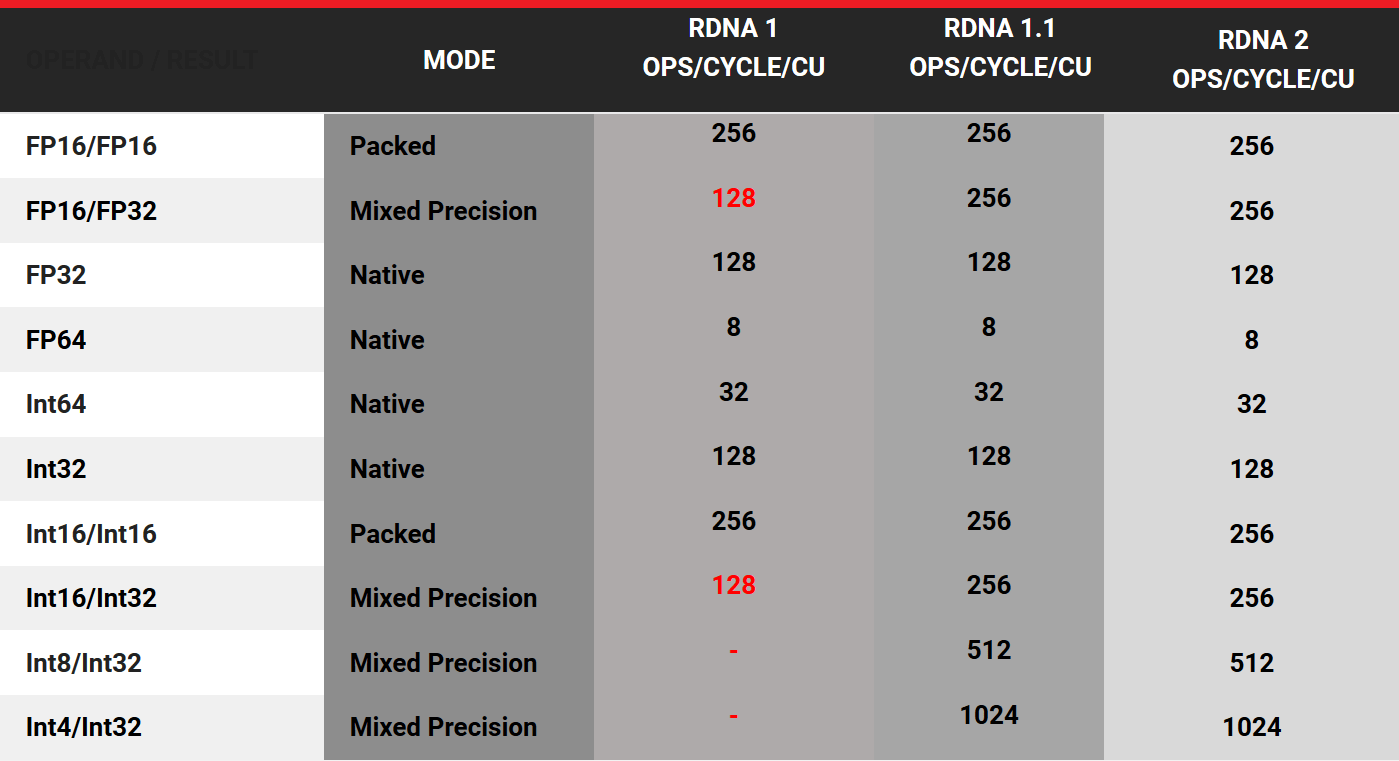

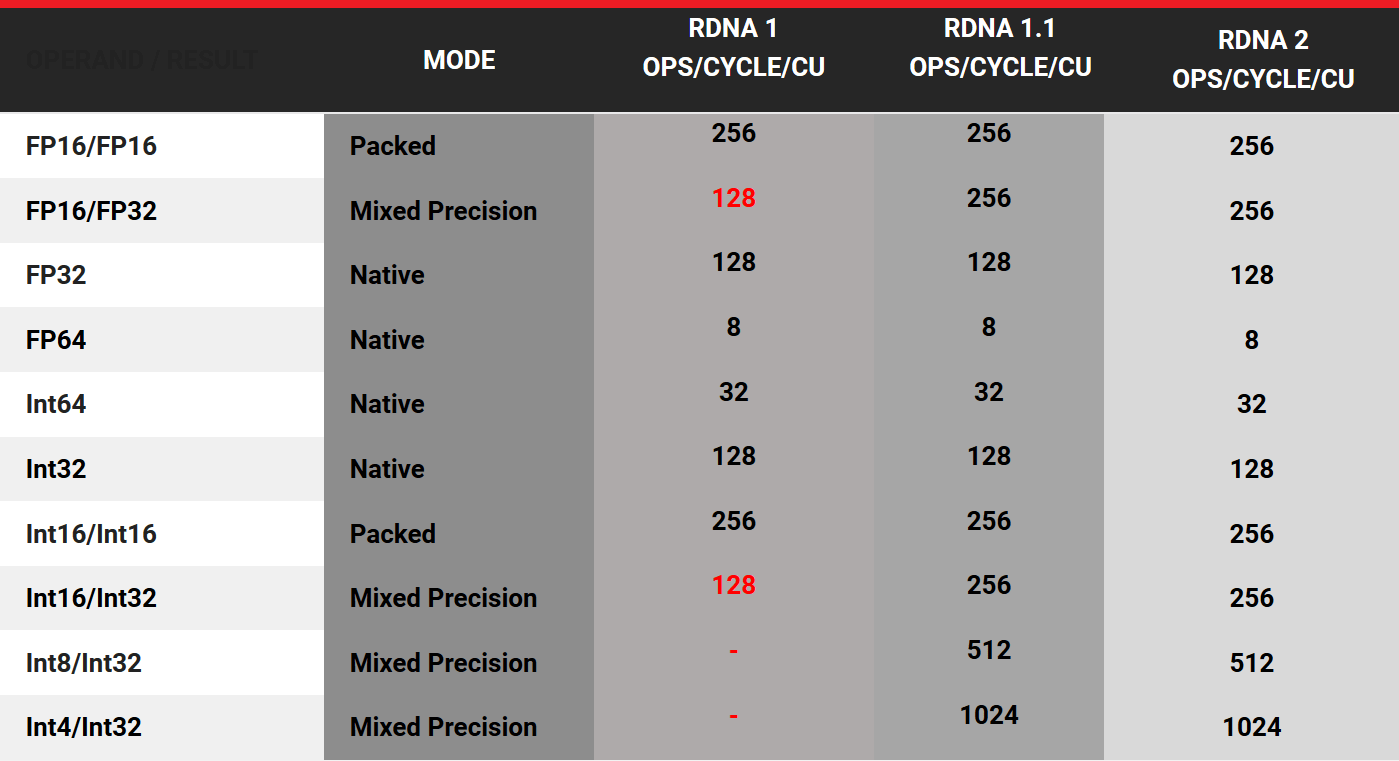

Wasn't PS5 said to be RDNA 1.1? If so, then it would appear to have INT8/4 support.How about you send me some confirmation from Sony that the PS5 GPU has Int8 and Int4 precision then?

So what ml advantage has xsx over ps5 that David Cage was talking about if ps5 has also increased perf for int8/4 ?Wasn't PS5 said to be RDNA 1.1? If so, then it would appear to have INT8/4 support.

In any case, it is highly unlikely for PS5 as a next generation consoles to not support INT8/4. We also have to remember this is a custom chip, so of course many things would be different compared to RDNA1 on PC, like the geometry engine, Raytracing capabilities and machine learning support.

Oh well, thought you had some insider information regarding a tech that would match DLSS2. Clearly the spiderman '4k' doesnt match what DLSS2.0 is doing to achieve 4k regarding IQ and performance.

So what ml advantage has xsx over ps5 that David Cage was talking about if ps5 has also increased perf for int8/4 ?

very interesting, in which games you wren't happy with dlss2 results ?What kind of IQ are you referring to? one where doesn't exist any movement like all the screenshots you see, or one where you see actual movement? I tell you what, It wasn't until I bought a 3080 and tried DLSS that I saw how flawed it was. The difference from what I saw online and what I saw in real life, was that all the defects were camouflaged by youtube compression and by most comparisons only using still images.

Lets wait and see, shall we.

where did you find this 57 ?Maybe because the XSX GPU is stronger overall.

Just speculation, but perhaps XSX also has some aditional ML hardware that allows for example AutoHDR without any FPS loss. MS said XSX has 57 INT8 TOPS. However, when you calculate how much compute performance the shaders can output at INT8, that would be around 48 INT8 TOPS, so additional hardware to that would have to deliver these remaining 9 INT8 TOPS.

https://news.xbox.com/en-us/2020/03/16/xbox-series-x-glossary/DirectML – Xbox Series X and Xbox Series S support Machine Learning for games with DirectML, a component of DirectX. DirectML leverages unprecedented hardware performance in a console, with Xbox Series X benefiting from over 24 TFLOPS of 16-bit float performance and over 97 TOPS (trillion operations per second) of 4-bit integer performance on Xbox Series X.

I tell you what, It wasn't until I bought a 3080 and tried DLSS that I saw how flawed it was.

Nothing has been seen about FidelityFX

a glimpse into an all Nvidia future, if peoples intelligence keeps being evaporated by marketing and fanboy argumentation.

Yes, most definitely. That's the mentality I see here.Abit like Apple then?

And that is the problem. From NV we can actually try and see it for ourselfs. DLSS, in special 2.0 does a really good job. It does have its flaws, but the advantages greatly outweigh them.

Seriously, most cant tell the difference between a reconstruced 1080p to 4k versus a native one, but the performance differences sure can be noticed. Seeing how much DLSS has improved in such a short time (from dlss1 to 2.0), it sure is a tech that cant be unseen anymore. Same for ray tracing.

AMD now wants to chase both RT and reconstruction tech but is behind.

Abit like Apple then?

Guess where VRS comes from.The patent for Foveated Rendering is in relation to VR, not the PS5.

There's no Sony patent showing unified L3 cache in the CPU.There was a Sony patent that showed the console having unified L3 Cache in the CPU

You mean you couldn't find a single official statement from Nvidia proving your "We know DLSS uses INT4 and INT8" statement, which is why you linked to a page with zero mention of DLSS, upscaling or supersampling.I'm not sure Nvidia go around putting out official statements on everything they do, but there's plenty of articles on them using Int8 and Int4 and the benefits they get over higher precision.

https://developer.nvidia.com/blog/int4-for-ai-inference/

Yeah sure, just for "texture sampling" and "nothing to do with compute".The RDNA white paper refers to Int8 texture sampling which has nothing to do Int8 for compute.

Some variants of the dual compute unit expose additional mixed-precision dot-product modes in the ALUs, primarily for accelerating machine learning inference. A mixed-precision FMA dot2 will compute two half-precision multiplications and then add the results to a single-precision accumulator. For even greater throughput, some ALUs will support 8-bit integer dot4 operations and 4-bit dot8 operations, all of which use 32-bit accumulators to avoid any overflows.

Changing goalposts from "Microsoft changed the GPU to get INT8/4 on RDNA2" to "it was on RDNA1 but not all of them".Also, not all RDNA cards had int8 and int4 capabilities, it was only on some cards. It was not a stock feature found in all of them. That's the same situation with RDNA 2.

Here it is, the trolling and flamebaiting.which sent all the fanboys into a rage

(...)

The constant "push for victory" as you put it is to correct misinformation that was put out there.

(...)

You really have to be willfully ignorant at this point to continue thinking that the PS 5 has VRS.

Custom instructions for ML inferencing.So what ml advantage has xsx over ps5 that David Cage was talking about if ps5 has also increased perf for int8/4 ?

There's no Sony patent showing unified L3 cache in the CPU.

did Microsoft talk anything about this custom instructions ?Custom instructions for ML inferencing.