TopSpoiler

Regular

Speaking of which, I wanna discuss the difference between H100 and MI300X die size and cost wise

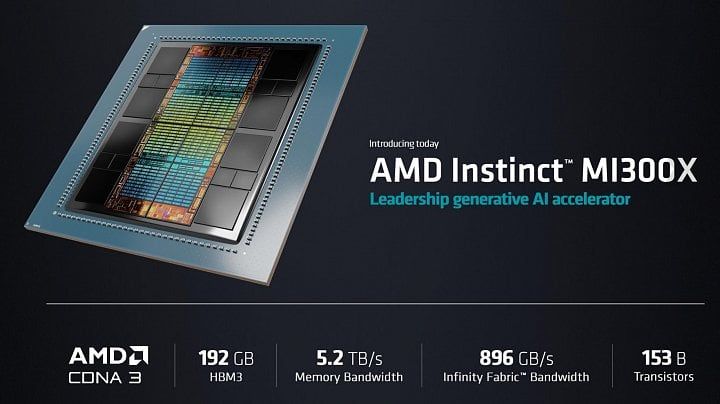

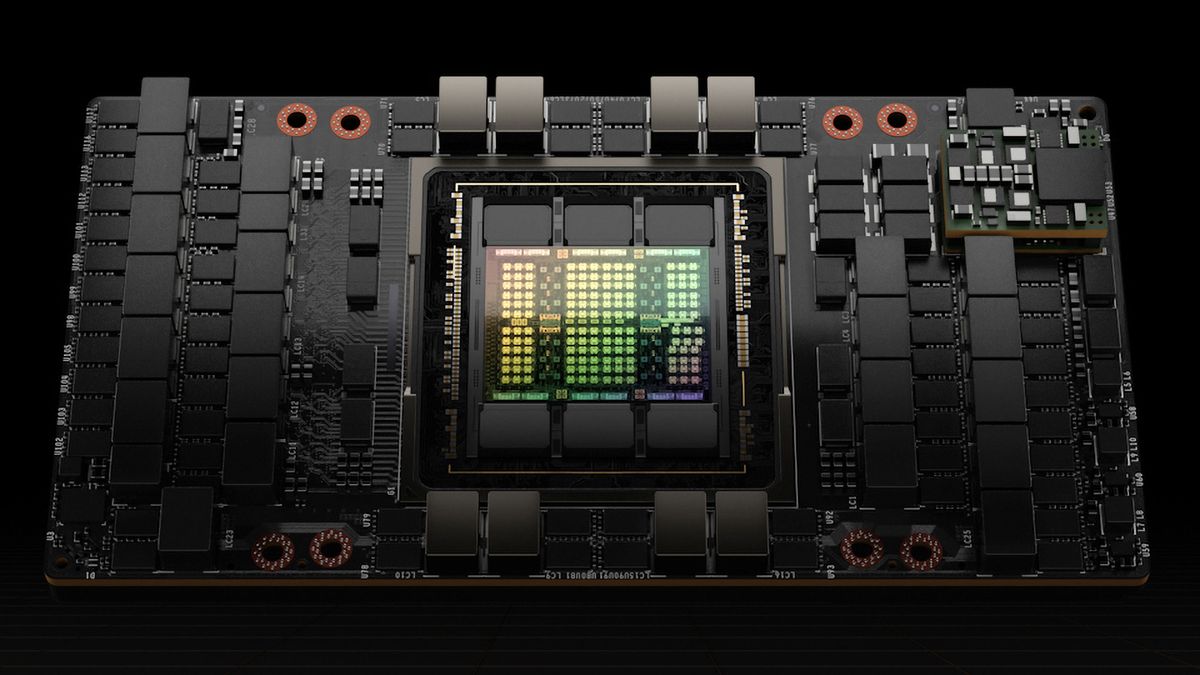

We know MI300X uses about 920mm² of combined TSMC 5nm chiplets (8 compute chiplets) stacked on top of 1460mm² of TSMC 6nm chiplets (4 IO chiplets). Combined this makes for a total silicon area of 2380mm², this is compared to a 814mm² single die of the H100.

https://www.semianalysis.com/p/amd-mi300-taming-the-hype-ai-performance

NVIDIA's transistor footprint is much smaller, but yield is worse, however the H100 is not a full die product, it has about 20% of it disabled to improve yields. So the difference in yields between the two may not be that large.

MI300X costs significantly more due to it's larger overall size, more complex packaging and the need for additional 6nm chiplets.

Any thoughts?

This could be a hint or not, MI300's yield seems somewhat low due to it's complex packaging and early stage of ramp.

Advanced Micro Devices Q1 2024 Earnings Call Transcript

Read the full transcript for Advanced Micro Devices' Q1 2024 earnings call at MarketBeat. No login or account required.

Jean Hu

Executive Vice President, Chief Financial Officer and Treasurer at Advanced Micro Devices

Yes. Thank you, Joe. Our team has done an incredible job to ramp MI300. As you probably know, it's very complex product and we are still at the first year of the ramp, both from EO [Phonetic], the testing time and the process improvement. Those things are still ongoing. We do think, over time, the gross margin should be accretive to corporate average.

Jean Hu

Executive Vice President, Chief Financial Officer and Treasurer at Advanced Micro Devices

Yes. I think you're right. It's the GPU gross margin right now is below the data center gross margin level. I think there are two reasons -- actually, the major reason is we actually increased the investment quite significantly to, as Lisa mentioned, to expand and accelerating our road map in the AI side. That's one of the major drivers for the operating income coming down slightly. On the gross margin side, going back to your question, we said in the past and we continue to believe the case is, data center GPU gross margin over time will be accretive to corporate average, but it will take a while to get to the server level for gross margin.