DegustatoR

Legend

Well dohIs there a market for that? not really.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Well dohIs there a market for that? not really.

I thought we were referring to just CPUs as opposed to APUs.Yeah vanilla Raphael has a 230W PPT or therein (it kinda does not much there Vs 160W PPT but I digress).

Stagnation on HW is no excuse for bad performance. Instead path tracing and Lumen they need to develop software which runs well on the affordable HW of the time.Sure but photorealism is still a long ways off. The immediate problem is price / performance stagnation in all market segments so even incremental IQ gains are increasingly expensive.

HW is not meant to solve or even compensate failure on SW development. Devs will learn it the hard way sooner or later.Gaming at 1080p medium settings is getting more expensive not less. I don’t see how APUs solve that problem.

That's not even remotely historically accurate. PC gaming has always thrived on state of the art hardware, people quickly forget the days of the 90s and 2000s, where APIs, video accelerators and GPUs were changing at a breakneck pace, you had to change hardware every two years just to keep up, now you want potato hardware to run latest games and last you a decade. That's just not possible, never has been.But the increasing majority will say goodbye to chasing photorealism for premium costs. They just want to play some games for fun.

Even consoles don't agree with your assessment, PS4 alone wasn't enough, PS4 Pro, Xbox One X were created to push things further, and now we are having a PS5 Pro, people want more, devs want more, potato hardware is no longer enough, even in console space.If Switch2 looks better than Switch1, it makes them just as satisfied as comparing PS5 to 4.

If devs learned to make Starfield run on a 1060 at 4K max settings through breakthroughs in software, why would you think they will settle for this? I mean they have 4090s to play around with, they will just jack up game complexities to make use of all that power. We would be talking about gaming at 8K and 16K, or 4K120 Path Traced games. You would be back at square 1, your potato hardware becoming irrelevant image quality wise.HW is not meant to solve or even compensate failure on SW development. Devs will learn it the hard way sooner or later.

I think you are forgetting a serious fact here, PC is scalable, no one forced anyone to play at Ultra settings, if you can't afford it, then play at medium settings, if you can't afford that then buy used hardware and play at medium settings. Your PC experience is highly customizable to your budget. APUs will solve what exactly? cheaper hardware? we already have that, low resolution/low settings gameplay? we already have that!The problem APUs can solve is establishing an affordable mainstream, that's all. And devs will target this mainstream as usual.

I will believe that when I see it. But even if it's gets released with it's rumored specs, you are looking at a sub 3060 class GPU arriving in late 2023.Bro wtf is Strix-Halo then?

You talk about historical accuracy, but then your arguments are personal conviction, ideals, and perception.PC gaming has always thrived on state of the art hardware, people quickly forget the days of the 90s and 2000s, where APIs, video accelerators and GPUs were changing at a breakneck pace

Basically yes, although i hope we still see enough HW progress to justify an upgrade cycle of say 5 years.now you want potato hardware to run latest games and last you a decade.

It was never maxed out at all.PS4 alone wasn't enough

Game consoles are not potato HW. They are optimized for the gaming workload. And they illustrate what a PC APU can achieve at least, if they overcome the BW limit imposed by dated platform standards.potato hardware is no longer enough, even in console space.

This entirely depends on the person who looks on the image.You would be back at square 1, your potato hardware becoming irrelevant image quality wise.

But i don't want used, power hungry, and bulky crap. So no, we don't have it. We are far from it, if we look at the traditional PC.APUs will solve what exactly? cheaper hardware? we already have that, low resolution/low settings gameplay? we already have that!

Doesn't change much, 1 mid-range vs 6 high-end. Even if we account for the 2022 restart of Turing production, 1630, 3050 and 3060 fit your criteria of sub-$400 MSRP, and 3060 Ti and 3080 are over that - so 4 mid-range vs 8 high-end models.4060 is $300. They also make a bunch of older stuff like 1050 and 3050.

I don't see that, AMD used similar or smaller die sizes across the entire range, and similar or smaller manufacturing nodes as well.AMD's h/w has been trailing Nv's in perf/transistor since Kepler/Maxwell so they had to compensate by selling bigger dies at similar price points, not smaller.

GCN/Fiji and Kepler/Maxwell were produced on the 28 nm TSMC process node.It is also a bit moot if some dies being smaller means lower production costs in comparison as they tend to use different production processes.

GPUs like GM200 certainly weren't made for DC or AI.

Also it's not like Nv is making only 600 mm^2 GPUs right now.

It's been going up much faster for high end because high end doesn't have an upper pricing ceiling.

Meaning that you can make a fully enabled 600 mm^2 GPU on N4 and sell it to gamers for some $2500.

They want it because it's how it used to be during the entire 27 years of 3D graphics revolution.people want fast GPUs to be cheaper because... they want it. That's the extent of reasoning.

...Well, yeah, nobody says otherwise.

Stagnation on HW is no excuse for bad performance. Instead path tracing and Lumen they need to develop software which runs well on the affordable HW of the time.

This does not mean enforced stagnation on IQ at all.

The problem APUs can solve is establishing an affordable mainstream, that's all. And devs will target this mainstream as usual.

Nothing comes close to Portal RTX. Dont know why people cant accept that Pathtracing its the solution to every problem and not a reason to create new problems.I know a PS4 can do visuals very close to Portal RTX. Because i did this 10 yeras ago already.

Now that's just pedantic. You can easily have efficient CPUs and GPUs on PC right now if you want to have it. Build a 7800X3D + 4060 and play at 1080p DLSS3 and you will have the highest performance in path tracing/ray tracing/rasterization at minimal power draw than any system on earth. DLSS3 already decreases power consumption by the way and Ada is more power effecient than anything else.But i don't want used, power hungry, and bulky crap. So no, we don't have it. We are far from it, if we look at the traditional PC.

That's irrelevant, consumers were willing to pay frequently back then, today it's slowed down, but it's the same principle, PC people want good hardware.We can no longer effort fast paced progress, period. Groundbreaking progress on chip tech would be needed, which is not in sight.

Such is the case for every peice of hardware.PS4 was never maxed out at all.

Base consoles are not enough, that's why you have higher tier consoles, because APUs gets outdated fast. They are fixed and can't be upgraded to last longer.In other words: We already have proof that APU is actually enough from consoles (or even better, Apple).

That split is already there, in hardware, in settings and everything.The PC platform needs refinement. It needs to split up into performance segments. And we need social awareness about those segments, so people can pick their camp.

Rumor: Kepler is claiming RDNA4 is not going to have any high end options, just like RDNA1.

AMD is slowly becoming irrelevant

PT is not efficient because it lacks a radiance cache, thus you need to recalculate all bounces every frame.Nothing comes close to Portal RTX. Dont know why people cant accept that Pathtracing its the solution to every problem and not a reason to create new problems.

That's what should be said, yes. But it's not. What they tell us instead is:Build a 7800X3D + 4060 and play at 1080p DLSS3 and you will have the highest performance in path tracing/ray tracing/rasterization at minimal power draw than any system on earth.

No, because the end is nigh. Upgrading also was an investment into a dream about awesome future games in our imagination. This was key for motivation to invest.That's irrelevant, consumers were willing to pay frequently back then, today it's slowed down, but it's the same principle, PC people want good hardware.

It's more expensive than a x86 CPU, but less expensive than CPU+GPU+RAM. Costs for GPUs really went through the roof. It's no longer some cool gadget people want to have - it's a requirement they hate to have.Apple silicon is expensive to make and wouldn't fit your cost model, they are built on the latest nodes, with billions and billions of transistors (67 billion for M2 Max) with huge bus (512-bit). So no, that doesn't apply either.

Not everything. There is no separation between high end tech enthusiasm people and others who don't care about tech but just want to play games at smooth enough framerate.That split is already there, in hardware, in settings and everything.

It fits well into this once APUs reach console level performance, to say it practically.I just don't see how APUs fit into this

It has designs affixed for it.I will believe that when I see it

Ughhhyou are looking at a sub 3060 class GPU arriving in late 2023.

Rumor: Kepler is claiming RDNA4 is not going to have any high end options, just like RDNA1.

https://videocardz.com/newz/amd-rumored-to-be-skipping-high-end-radeon-rx-8000-rdna4-gpu-series

originally RDNA4 consist of four chip configuration ? I still remember original rumours, about RDNA3 being chiplet arch and so that high end solution is made of two GCD , which still mean that there´s three different die config (N31,N32,N33)

With RDNA4 4 I would expect the same number of die configurations ( N43 , N42 and N41 with two GCD´s )

Makes no sense to me with their expected move into multi chip modules for GPUs unless they canceled these plans.

seems they did https://forum.beyond3d.com/threads/amd-execution-thread-2023.63186/page-9#post-2308957

to resume RTG strategy :

*they don´t want to build huge die GPU aka Fiji

*they don´t want their GPU become too complex (more GCD´s chiplets, interposer and expensive interconnect)

*they don´t need direct competition to all Nvidia GPU

*they can build faster GPU, but they don´t want to

*they can fix RDNA3 but they won´t bother

*they only target max. $999 price as acceptable by gamers

*they don´t care about dGPU market share because they have console

If Navi 43 remains on 48 CUs (3072 ALUs), then it should be around RX 7900. Navi 23 / Navi 33 only have 32 CUs (2048 ALUs) though, and a 32 CUs would be similar to RX 7800. Unless they also doubled the FP32 blocks again with 4-issue ALUs, however it's not the same as doubling the number of CUs (WGPs).Maybe RDNA4 won't have high end because it will do just that - put high end performance into mid range segment?

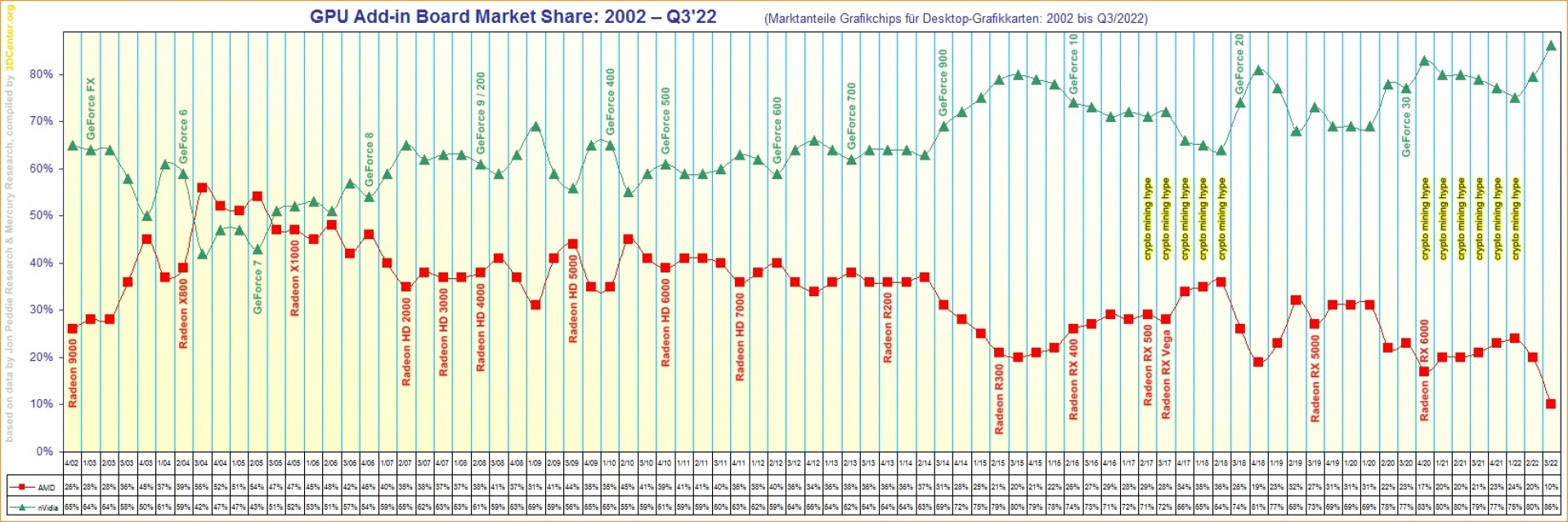

slowly ? they managed from 50% to 10% market share just in 17 years...AMD is slowly becoming irrelevant

It's possible that they weren't able to beat their own previous high end parts - without blowing up their costs at least. This is already somewhat true for the upcoming N32, and the stagnation of perf/price of lower pricing tiers was always bound to expand into the higher ones due to production realities.Until some specific details of what exactly could go wrong are leaked, I find it hard to believe that AMD would just skip Navi 41/42 altogether and effectively give up mid-range and high-end market for the entirety of 2024-2027.

AMD's high end is so small in market share that them leaving it won't have much effect on their overall market share.If true, they would have to start from the ground up again with maybe 5% market share.

If you want efficiency, you should see this. A 4060 desktop GPU beating a mobile RDNA3 GPU in efficiency by two times.That's what should be said, yes. But it's not.

And you think APUs will give you any more than 8GB of VRAM?* You should not buy any 8 GB GPU at all anymore, because that's no longer enough for gaming.

Why are you even considering PT? APUs won't get you anywhere near RT performance. Especially AMD APUs. You are locked out of the entire ray tracing market, Lumen is not for you either, whether in software or hardware.* CP2077 lists minimal specs for PT above 4060. (Although it runs quite fine with it, according to benchmarks.)

I don't believe it is, It's probably more expensive than all of those combined.It's more expensive than a x86 CPU, but less expensive than CPU+GPU+RAM.

I did mean total dGPU market share, not just high-end.AMD's high end is so small in market share that them leaving it won't have much effect on their overall market share.

That's what these leaks are about. The question is why they wouldn't reach their performance/watt targets.It's possible that they weren't able to beat their own previous high end parts - without blowing up their costs at least.