I doubt SONY has any "secret sauce". Again, the anomaly is the XSX. XSX is performing worse than the 5700 XT for goodness. That can't be right or healthy. Even without the bells and whistles of RDNA 2.0, it should at least be on par with 5700 XT, but it isn't. I am sure MS has been aware of this for a while now. We just need to wait and see if MS will catch up to 5700 XT let alone PS5, and if yes, will it also surpass it and when. I personally would expect that in late 2021 if XSX is not performing measurable better than it is now, then it won't perform substantially better in 2023 and so on either. I doubt very much this is a PS3 situation either... that was very different IMO.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Hardware Speculation with a Technical Spin [post GDC 2020] [XBSX, PS5]

- Thread starter Proelite

- Start date

- Status

- Not open for further replies.

D

Deleted member 11852

Guest

This is kind of my point. Last gen - with TVs getting larger and larger - some people (but not me) could notice differences between 1080p and 900p, or 900p and 720p or whatever. I think this gen, for most folks, we're well into the realm of diminishing returns where you want to throw CUs at marginally higher resolutions that are imperceptible, but you might want to use that headroom for something more interesting.The PS4 advantage was visible day one and it stay during all the generation out of a few outliers running at 720p on Xbox One and 1080p on PS4 most of the time before the consoles begin to show their limit it was 900p against 1080p at 30 fps or 720p against 900p at 60 fps.

What is that? No idea, the thing I often gravitate to was the technically impression but oft overlooked MLAA in The Saboteur on PS3. Mark Cerny talked about the extra CUs in PS4 being there for the future but really they had to make up for the poor CPU in many cases. This gen things are different, we don't need a CPU making up for GPU (PS3) or GPU making up for CPU (PS4) we've got to pretty well balanced systems meaning excess CUs really can be used for whatever the developer can imagine and Series X (again... discounting secret-Sony-sauce) has more overhead for future cool stuff(tm) but this gen, it may actually get used for something cool and not deployed to overcome some other short-coming.

Last edited by a moderator:

AbsoluteBeginner

Regular

Yes obviously, from top of my head PS3 was vertex limited, it was crawling so much so that you had to dedicate quite a chunk of SPEs to cull triangles before sending it to RSX. Then fixed vertex/pixel shaders and problems with alphas duo to eDRAM etc.I doubt SONY has any "secret sauce". Again, the anomaly is the XSX. XSX is performing worse than the 5700 XT for goodness. That can't be right or healthy. Even without the bells and whistles of RDNA 2.0, it should at least be on par with 5700 XT, but it isn't. I am sure MS has been aware of this for a while now. We just need to wait and see if MS will catch up to 5700 XT let alone PS5, and if yes, will it also surpass it and when. I personally would expect that in late 2021 if XSX is not performing measurable better than it is now, then it won't perform substantially better in 2023 and so on either. I doubt very much this is a PS3 situation either... that was very different IMO.

That, plus all the issues with tools which were light years behind MS.

Series X (again... discounting secret-Sony-sauce) has more overhead for future cool stuff(tm) but this gen, it may actually get uses for something cool and not deployed to overcome some other shortcoming.

That short coming is the series S.

The meltdowns and goalpost shifting that's happening across certain gaming boards is ridiculous. Both systems are performing quite nicely and will definitely get better over time.

I'm still amazed we have any form of RayTracing, even if right now it's being used like God Rays and Bloom when first introduced or in a J. J. Abrams movie. I so can't wait until every game stops constructing "mirror worlds" to show off the effect.

Also amazing to see 60 FPS targets, let alone these 120 FPS modes.

Also amazing to see 60 FPS targets, let alone these 120 FPS modes.

We were sitting on this for some time. We didn't know what to make of it. But it's out there now.Well I think one thing we can say for certain is that XSX might have more flops and more bandwidths, but it ain't really showing 'em!

Being ROP limited particularly at high frame rates is reasonable I guess. MS specifically mentioned RT (and not raster) as being the driver for their fat bus. It would be funny if PS5 turned out to be better at 120hz after MS had been banging on about it for months.

---------

Anyway, even when MS get their tools to a better place, I think we're going to continue see some of what we've already seen : that for cross gen games at least, the maths per pixel ratio is a better fit for the PS5 arrangement.

And MS had better hope games start making use of mesh shaders to keep things efficient on the front end.

This might be causing some issues here we think on the front end. It's worth discussing, but it's not going to explain all of their issues.

You may want to reach out to Al, or if he wants to post here. But you're looking at (if we understand it) 32 ROPS for XSX but it's doubled pumped.

Thread here: https://forum.beyond3d.com/posts/2176264/

edit: help? picture is failing to show for people not logged in. Seems to be a permission issue.

Last edited:

I see 2 possible culprits here (or a combination of these):

N6 is a partial EUV node that uses the same tools and design rules as N7 (DUV only), and TSMC expected many N7 designs to transition to N6. Performance is expected to be actually similar to N7 (and inferior to N7+ BTW), and density is just 18% higher.

Changing the SoC from N7 to N6 should come from the fact that the transition should be pretty cheap, yields should be somewhat better due to the EUV layers and 18% density improvement should lead to a ~260mm^2 SoC.

N6 is most of all a cost saving measure for the SoC on Sony's part, and not for the PSU or cooling system.

That said, I think we should expect Microsoft to make the transition to N6 on their consoles as well, and most of AMD's GPU/CPU designs that will last for another year or two.

I don't know if a N6 waffer is 20% more expensive than a N7 one, but the 18% transistor density improvement alone would cover most of that price difference, meaning a $90 SoC on N7 wouldn't cost much more on N6.

Understand more clearly on that, thanks! So that actually does make N6 a good qualifier potentially for a PS5 Slim for example. Only issue is, as you just brought up, it performs worst than N7+, and IIRC PS5 is on 7nm+ (as is Series X, most likely), so if that's the case how does moving the design to N6 affect the performance? Is it a trade-off of smaller chip size but slightly worst power consumption at the same clocks, but perhaps the savings gained on a smaller node with better density could bring cooling costs down enough to offset that?

At least on cursory glance, it sounds like N6 would be better served for something else, like a PS5 Pro, but at that point 5nm would be better since you get better power reduction, a perf boost and a bigger density shrink...although the costs increase to compliment that. Personally I still think if there're mid-gen refreshes, they'll be on 5nm at least, probably 5nm EUVL for at least one of them.

Miles Morales is doing a good job. 30fps ray trace, 60fps without. I would like to be given this choice in more titles.

Honestly raw RT performance won't be the way to go for next-gen, but I think combinations of RT and other things like better SSR, plus artistic choices (if you look at Demon's Souls for example there's parts you'd of sworn were RT'd but IIRC the game has no RT in it), on combination of hardware and software approaches, can give really smart results.

Personally I'm still curious about Project Mara because the RT in the interior shots was amazing, and I wonder if scale of the game (i.e a smaller game) brings with it better budget margins for pushing RT without compromising performance too much. All the same, if things like DirectML work as well as intended that should free up a ton more of the performance budget to push RT. I hope that ends up being the case.

That said, relying on DirectML or equivalent would mean a game-by-game approach, similar to what Nvidia are doing with DLSS now on PC, therefore probably best to temper expectations on that level of RT except for 1P games and massive 3P games that can afford that type of approach on multiple platforms, like the next GTA (or Cyberpunk, at least for what I saw yesterday).

Kind of agree, or at least hoping developers find ways to make RT more efficient through software over time. Every console gen it seems like the aim is to reach a higher resolution or higher FPS. Last gen 1080P, 30-60FPS, mid gen 4K 30-60FPS, and now were aiming for 4K again but at 60-120FPS with RT. Wondering if next time around 4K 60-120 will continue to be the goal along with RT hardware being more mature? Maybe we'll finally be able to see new hardware used strictly on improving graphics as opposed to pushing more pixels and more frames per second? I don't see 8K being a thing anytime soon but who knows 7ish years from now.

Honestly I don't know why native resolution keeps ending up as a major push. I think we're good enough @ 4K, even on massive home theater screens 4K provides extremely good clarity. Are these companies expecting us to end up with cinema screens in our homes within 10 years? What about those of us in apartments, real estate is a precious resource xD.

The focus going forward should be on graphical fidelity; I know some people keep saying we're reaching a saturation point of diminishing returns...but are we really? Look, until I get a game with Fifth Element, Blade Runner 2049 or End Game levels of CG fidelity in real-time, I think there's still a good ways to go

That's even knowing the increases in production workforce that'd be needed, but I think smarter algorithms and maybe advances in things like GPT AI (that could assist massively in offloading coding and programming and expediate that process and perhaps even asset creation processes...though would prob still need a human touch involved to give it that bit of personality and guide things along) can help tremendously with that.

Last edited:

D

Deleted member 11852

Guest

It's gaming. You can't simply be happy with performance that is acceptable to you as an individual, you must crush the spirits others other in your pursuit of having one game sprite vanquishing another game sprite, be they Vikings, aliens or cars. It's truly sad that some people cannot just be content with what they have, it has to be "better" (subjective) than what somebody else has.The meltdowns and goalpost shifting that's happening across certain gaming boards is ridiculous. Both systems are performing quite nicely and will definitely get better over time.

As McDonald J Trump would say: Sad!

steveOrino

Regular

The question is why MS decided to blow a lot of the power budget on the weird memory config + controller while clocks suffer. Those GPU clocks is why I never thought the series X was the hands down performance winner. Maybe they weren't confident in cooling like Sony was? TBD I guess, but to me Sony has the far more thought out architecture..... And now that the 6800 benchmarks are up it looks like it clocks like a boss.

GG Cerny, Sony was wise to put you in charge.

GG Cerny, Sony was wise to put you in charge.

The question is why MS decided to blow a lot of the power budget on the weird memory config + controller

The memory configuration has nothing to do with power budget.

D

Deleted member 13524

Guest

Understand more clearly on that, thanks! So that actually does make N6 a good qualifier potentially for a PS5 Slim for example. Only issue is, as you just brought up, it performs worst than N7+, and IIRC PS5 is on 7nm+ (as is Series X, most likely), so if that's the case how does moving the design to N6 affect the performance? Is it a trade-off of smaller chip size but slightly worst power consumption at the same clocks, but perhaps the savings gained on a smaller node with better density could bring cooling costs down enough to offset that?

I believe the PS5 and SeriesS/X are all made on N7, not N7+ as the latter has higher performance characteristics than N6.

N6 wouldn't be a good qualifier for a PS5 Slim because its performance characteristics are similar to the N7 that both consoles are using already.

What N6 brings is higher density and better yields, i.e. each chip would be cheaper to manufacture.

MS has to lead from the front.And MS had better hope games start making use of mesh shaders to keep things efficient on the front end.

And now they have enough first party studios to do so.

(I'm not disagreeing with you though)

I doubt SONY has any "secret sauce". Again, the anomaly is the XSX. XSX is performing worse than the 5700 XT for goodness. That can't be right or healthy. Even without the bells and whistles of RDNA 2.0, it should at least be on par with 5700 XT, but it isn't. I am sure MS has been aware of this for a while now. We just need to wait and see if MS will catch up to 5700 XT let alone PS5, and if yes, will it also surpass it and when. I personally would expect that in late 2021 if XSX is not performing measurable better than it is now, then it won't perform substantially better in 2023 and so on either. I doubt very much this is a PS3 situation either... that was very different IMO.

Custom doesn't mean it will be useful for developers running multiplatform games it can be something only useful for first party studios developing games on the platform. Functionnality been on one platform but not the other if it is not very easy or nearly automatic to use will be only use be first party studos without the pressure to release on another perform.

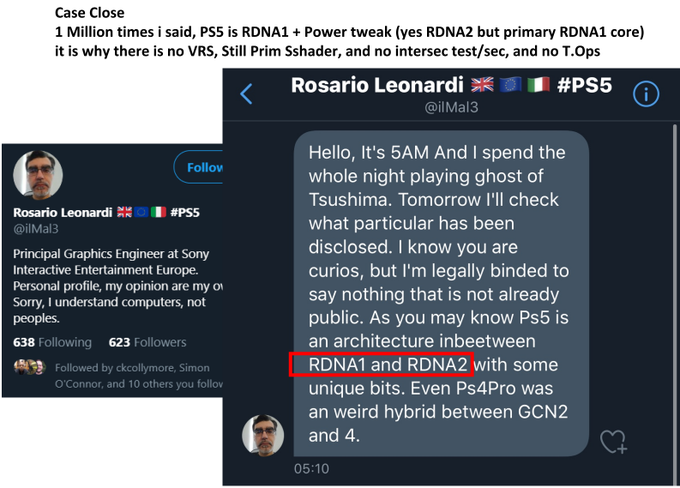

https://tekdeeps.com/ps5-does-not-use-rdna-2-architecture-has-sony-failed/

On this message and another one he said there is some unique functionnalities. It doesn't mean it will help in multiplatform game at all. Imo the custom features are only useful to first party devs out of things automated like cache scrubbers.

Microsoft decided Xbox Series need to fully support Direct X 12 Ultimate. Sony does not have any needs to have the full feature set of a PC API.

For the moment on my side this is not if the XSX will prove it more powerful hardware but when? How much time they need to unlock the XSX power. I am like Saint Thomas I need proof to believe something.

After the games I want to play are on PS5 this is more important than the power of the console.

EDIT: The search of secret sauce reminds me of misterxmedia. PS4 Pro had rapid packed math with double FP16 and it was less powerful than the Xbox One X. I don't believe any secret sauce giving some advantage to Sony or custom feature doing the same out of maybe help first party to do better games.

Last edited:

Both consoles are likely beyond the 'sweet spot' in terms of power to performance curve.The question is why MS decided to blow a lot of the power budget on the weird memory config + controller while clocks suffer. Those GPU clocks is why I never thought the series X was the hands down performance winner. Maybe they weren't confident in cooling like Sony was? TBD I guess, but to me Sony has the far more thought out architecture..... And now that the 6800 benchmarks are up it looks like it clocks like a boss.

GG Cerny, Sony was wise to put you in charge.

AbsoluteBeginner

Regular

No, the clocks are such because MS also shot for ~200W console, but sweet spot for that 52CU GPU is already around 200W. Sony went with 36CU chip which sweet spot would likely be ~40W below of what they shot for as budget (200W). So basically they could go for max GPU clocks at constant 200W and dclock when necessary, while MS couldnt.The question is why MS decided to blow a lot of the power budget on the weird memory config + controller while clocks suffer. Those GPU clocks is why I never thought the series X was the hands down performance winner. Maybe they weren't confident in cooling like Sony was? TBD I guess, but to me Sony has the far more thought out architecture..... And now that the 6800 benchmarks are up it looks like it clocks like a boss.

GG Cerny, Sony was wise to put you in charge.

MS should have shot for 240W console if they went with same power strategy.

AbsoluteBeginner

Regular

Think its pretty simple. Sony had best tools last gen, they only got better this gen and they sent first dev kits almost 2 years ago. First APU based dev kits were at 3rd party studios around June 2019, so basically they had all the time in the world to get best results from already great hardware using similar dev environment they already used last 7 years.

Its a big advantage for small power deficit they have on paper.

Its a big advantage for small power deficit they have on paper.

Unknown Soldier

Veteran

*Cough* That's what I have been saying.That short coming is the series S.

You are doing the same mistake people did for months, you are setting yourselves (and people who read you) up to another disappointement. But the answer is probably not a mystery and is given by Cerny in his presentation:The PS4 advantage was visible day one and it stay during all the generation out of a few outliers running at 720p on Xbox One and 1080p on PS4 most of the time before the consoles begin to show their limit it was 900p against 1080p at 30 fps or 720p against 900p at 60 fps.

Sony has done something but hide it, we know they don't have INT4 and INT 8 "rapid packed math" but the Graphics Sony engineer who said too much said they have many custom advanced functionnality. But for an unknown reason they don't want to talk about the APU.

I was thinking it is because they are ashamed of its inferiority and maybe it is the case and when Gamecore will be finished the XSX will naturally be around 20% faster than the PS5 but this is annoying.

EDIT: Imo the PS5 advantage will be there for a few months to a year and the XSX will naturally be above sooner than later.

- Narrow & fast design and making sure all CUs are busy. There are actually 2 key principles here, not one.

There is a reason that the high performance RDNA2 and RDNA1 GPUs have 8/10 CUs by shader array. Because it's the right sweet spot between compute and efficiency for gaming purpose. It seems RDNA 1 & 2 shader arrays are most efficient at working with 8/10 CUs, not 12/14.

I think MS chose 12/14 because it makes sense for them as they are going to use those in servers to do compute oriented tasks (with ML and such). But they will certainly lose efficiency in gaming tasks.

- Status

- Not open for further replies.

Similar threads

- Replies

- 107

- Views

- 9K

- Locked

- Replies

- 3K

- Views

- 243K

- Replies

- 22

- Views

- 7K

- Replies

- 3K

- Views

- 274K

- Locked

- Replies

- 27

- Views

- 2K