Except it is not $200 more. It's $85 more.They are going to be paying like 200 bucks more for no meaningful amount of extra performance

i5-4570: $192

i5-5675C: $276

Except it is not $200 more. It's $85 more.They are going to be paying like 200 bucks more for no meaningful amount of extra performance

I would actually prefer a low clocked 8 core Xeon with 72 EU GPU + EDRAM. It would both compile fast and be useful for integrated GPU shader optimization.

Except it is not $200 more. It's $85 more.

i5-4570: $192

i5-5675C: $276

Good point. Did not think to check retail prices. And Broadwell is only available on newegg. Let's hope Skylake will have better availability and pricing...Actual retail prices for the 4570's faster follow up 4590 and the 5675C are rather different, and for that $120 difference you can buy a discrete graphics card that will put Broadwell's Iris Pro to shame.

Those CPUs require more expensive motherboards.The price difference that Intel asks for their Iris models is hardly worth it. Plus, I've yet to see benchmarks that prefer the EDRAM models to the similarly priced LGA2011 CPUs capable of quad-channel DDR3/DDR4.

Might be glitchy on certain setups - note that there's an interaction with the BIOS as well so make sure you're all up to date there.Really? That sounds sweet, except when I tried enabling both GPUs in my system, the intel driver created a "virtual monitor" which could not be disabled from what I was able to tell, then windows put another, off-screen, desktop on that monitor which I could not access (but still lose my mouse pointer into since it attached to an edge of the screen), which randomly caused my monitor to display nothing at all but solid black when coming out of power save/turned on.

If you're talking desktop then a dGPU makes sense, but an increasingly large number of gamers don't even have a desktop these days. And as soon as you start segmenting off "well they aren't *real* gamers" if they don't have X/Y/Z hardware and play A/B/C games, then it gets a little bit silly. Also, I'll win that competition you 4 core fake-gamer PEASANTS!I see anybody remotely interested in playing games on pc either going for a high and cpu and high end gpu or or hardly noticeably slower i5 or i7 and spend the 100 ~ 200 bucks they save on a gpu. The latter is undoubtedly going to give you much better performance for the same money.

Sure, but you lose a bunch of the CPU side. It's all a question of priorities. If you are primarily interested in perf/$ in gaming, I completely agree that LGA Iris Pro is not a good trade-off. But a lot of people are not that price sensitive to start with and the cool thing is that you're not really giving up any gaming potential by having a beefer iGPU.Actual retail prices for the 4570's faster follow up 4590 and the 5675C are rather different, and for that $120 difference you can buy a discrete graphics card that will put Broadwell's Iris Pro to shame.

If you're going on ARK prices... don't go on ARK prices. The 5775c is slightly more expensive than a regular high end i7 (in MSRP - given apparent stock issues retail might be skewed for now), but not very much and you do get quite a bit of hardware for that cost.The price difference that Intel asks for their Iris models is hardly worth it.

http://techreport.com/review/28751/intel-core-i7-6700k-skylake-processor-reviewed/14Plus, I've yet to see benchmarks that prefer the EDRAM models to the similarly priced LGA2011 CPUs capable of quad-channel DDR3/DDR4.

Yeah a quick glance at the SKUs should demonstrate that these big GPUs are primarily designed for mobile devices where form factor and power are relevant constraintsI want an Intel 5x5 motherboard and a 72 EU GT4e GPU with a huge cache. A discrete GPU means a bigger case. I think 5x5 is only 65W though.

In my particular (niche) professional use case, I especially need a desktop chip with an Intel integrated GPU, since my workstation already can be configured with a pair of discrete (Crossfire) Radeons or (SLI) Geforces. Discrete Intel GPUs do not exist, meaning that I need to select my CPU based on the integrated GPU. Also the currently available AMD CPU cores are not fast enough for compiling code, compared to the new Skylake cores.So what you actually want is the XBox One's APU with better CPU cores

Sure, but you lose a bunch of the CPU side. It's all a question of priorities. If you are primarily interested in perf/$ in gaming, I completely agree that LGA Iris Pro is not a good trade-off. But a lot of people are not that price sensitive to start with and the cool thing is that you're not really giving up any gaming potential by having a beefer iGPU.

If you're going on ARK prices... don't go on ARK prices. The 5775c is slightly more expensive than a regular high end i7 (in MSRP - given apparent stock issues retail might be skewed for now), but not very much and you do get quite a bit of hardware for that cost.

http://techreport.com/review/28751/intel-core-i7-6700k-skylake-processor-reviewed/14

5775c comes out as the best CPU for gaming - over a 6700K and 5960x - despite a frequency and power deficit.

And yeah, they are not at all similarly priced once you get a LGA2011 motherboard + the quad channel RAM. Don't get me wrong I love the hell out of my 5960x, but if you're primary purpose is gaming it is not really worth the money for current games vs. other options. New games and DX12 may change that of course, we'll have to see.

Oh trust me I constantly advocate for these sorts of SKUs, but it's not an area that I have a huge amount of influence over. This stuff is mostly driven by the market and as such external folks have more influence than internal in most cases. We've made some progress with stuff like the 5775c existing at all - hopefully those sell well as that's a stronger story to the folks that decide these things than my whiningNow that you've convinced us that a CPU with lots of fast L4 is better for gaming than a higher speed CPU without, all you need to do now is convince Intel to release one (on the latest IP and in the 4Ghz+ range) so that we can buy the damn thing

Would be nice if you can pass on sebbbi's wish for an 8 core + L4 + 72EU part too, I'd likes me one of those!

Oh trust me I constantly advocate for these sorts of SKUs, but it's not an area that I have a huge amount of influence over. This stuff is mostly driven by the market and as such external folks have more influence than internal in most cases. We've made some progress with stuff like the 5775c existing at all - hopefully those sell well as that's a stronger story to the folks that decide these things than my whining

Define "support".

I suppose I should note that gen is on the narrower side of architectures and would probably not see the same benefit that other architectures might get.

Hardware support. Maxwell doesn't support Asynchronous Shader in hardware unlike GCN. Does Gen9 support this in hardware like GCN or more similar to Maxwell?

Right, this. I've commented before on this in other threads but the marketing/enthusiast understanding around this issue has gotten extremely confused. AMD obviously has reason to add confusion to the consumer marketing message and I get that, but it's annoying as even some developers are confused.What I'm trying to stress though is a lack of "hardware support" doesn't necessarily mean it's "bad". Perhaps Intel already keeps it's execution units mostly fed. An architecture like GCN is wider so it has a greater likelihood of having idle units (and thus benefiting more from async compute). Hard to say what benefit async compute would bring to Gen (I'm sure some, but "how much" is difficult to answer).

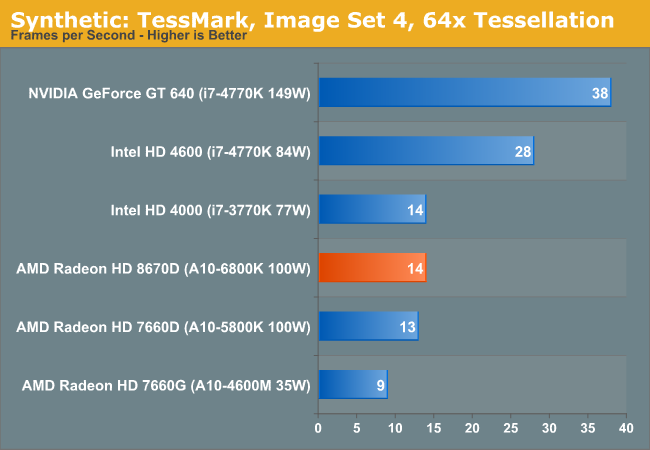

This is true. I wonder if anyone has ever studied Intel's DX11 GPUs on e.g. Tesselation performance.

OTOH, until Skylake's 72 EUs part comes out, there will be little to no incentive to even try enabling tesselation in a game using an Intel iGPU.