This video is inaccurate in many aspects, and I'm not even sure why HWU is referencing to it since they hasn't really shown anything similar to what this video is about.I recommend this video that HU references btw:

It's from 2017 but gives a good background on why we may be seeing this discrepancy now. This may be down to architectural differences that hurt AMD in the past but may be helping them now.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia's 3000 Series RTX GPU [3090s with different memory capacity]

- Thread starter Shortbread

- Start date

-

- Tags

- nvidia

Flappy Pannus

Veteran

It absolutely does, did you watch it in full?This video is inaccurate in many aspects, and I'm not even sure why HWU is referencing to it since they hasn't really shown anything similar to what this video is about.

This video is inaccurate in many aspects, and I'm not even sure why HWU is referencing to it since they hasn't really shown anything similar to what this video is about.

Out of curiosity, what are the inaccuracies in the video? The nerdtechgasm one.

No, it doesn't. Yes, I did.It absolutely does, did you watch it in full?

Well...Out of curiosity, what are the inaccuracies in the video? The nerdtechgasm one.

- Fermi kernel execution has nothing to do with DX12.

- AMD didn't "move to h/w scheduling with GCN" in a sense which can be compared to what NV did scheduling wise in Kepler when compared to Fermi.

- AMD didn't "work with Sony to develop GCN".

- "Driver overhead" being split onto several CPU threads doesn't become "less overhead", it's getting split which allow multicore CPUs to run it faster but it remains the same as a sum; this is why I don't particularly like the whole moniker of "driver overhead" here as it's how their respective drivers operated back then, with NV's simply being better suited for multicore CPUs, doing automatic parallelization even - although I'm not sure if this can actually be titled as "NV DX11 driver is always MT", something tells me that it's not "always" MT.

- GCN doesn't do anything differently to Kepler when draw calls are being sent to the GPU from the API through the driver. The whole explanation of why DX11 MT doesn't fit AMD h/w makes no sense. All GPUs have global thread schedulers in h/w and this has nothing to do with API side work submission. AMD being able to submit work in parallel in DX12 (which is very apparent nowadays) but unable to do so in DX11 due to h/w scheduling is simply incorrect. This was (is?) a pure AMD driver side issue.

- AMD and NV has basically the same "s/w scheduler" since Kepler. He's confusing API/driver side work submission with work distribution on GPU h/w which was rather different between AMD and NV up until Volta. The latter though has no impact on CPU whatsoever.

- DX12 and VK has a different work submission architecture which takes care of the bottleneck which DX11 had on multicore CPUs. This is true for any GPU which support DX12 and VK and has no relation to how GCN was designed. GCN has issues with context switching and single thread performance which can be covered by running multiple streams of different contexts which would allow to fill the bubbles with different workloads - hence the async compute fame which mostly favor GCN still. This is where HWS/ACEs comes in and where the multiple submit queues of DX12/VK help - but this isn't about scheduling, the scheduling of these queues are the same as with one queue.

- AFAIK Nv driver doesn't "reassemble the streams" on Volta+. The results we're seeing are on Turing and Ampere. HWU should've tested GCN and Pascal too btw. I also doubt that "reassembling of streams" which are presumably completely independent would have any sort of noticeable CPU impact.

- Nv GPUs can and do benefit from DX12 and Vulkan in almost any game which is fully CPU limited. It does "take a very competent game developer" to code a game to run better than Nv's DX11 driver does but this isn't due to CPU side work submission - this is arguably the easiest new part of DX12/VK to make use of - which is proven again by the fact that many (most?) DX12/VK games run better on NV h/w than in DX11/OGL when being CPU limited. Where it does matter is in resource usage mostly (resource binding issues were mentioned here already).

- It is immensely more hard to make the auto-parallelization of DX11 work submission stream on driver level than "reassemble the streams" of DX12/VK in the driver. These "streams" do not have a "high thread data dependency" which is precisely why they are separate queues in DX12/VK.

- "On AMD GPUs draw calls submission gets straight to h/w scheduler" - yeah, they magically bypass the API and driver in the process and gets into the h/w scheduler like application's draw calls. I mean c'mon.

Last edited:

Flappy Pannus

Veteran

As for "they need to test this with more games" (like I want):

@DegustatoR Cool. Thanks for the informative post.

"Some people" do understand. "Some people" point out that to make loud claims more effort must be put into the testing.As for "they need to test this with more games" (like I want):

Flappy Pannus

Veteran

Interesting, albeit 3 of the games were the same ones in this recent test.

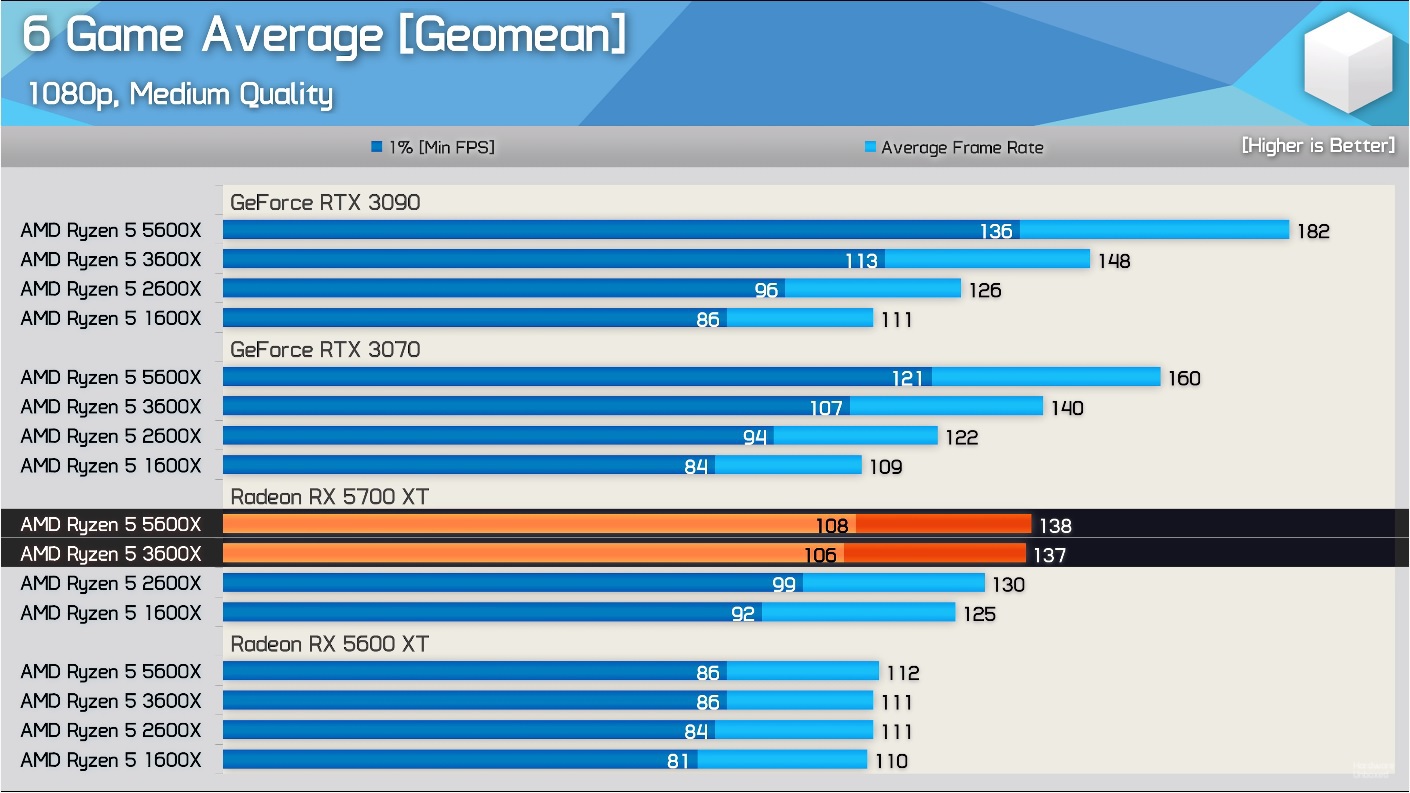

The games tested in this case:

Cyberpunk 2077

Rainbow Six Siege

Horizon Zero Dawn

Shadow of the Tomb Raider

Watch Dogs Legion

Assassin's Creed Valhalla

Still would like to see more DX12/Vulkan titles. As well with CPU graphs - I want to see the average utilization level.

I don't see the same trend in the screenshot above tbh.More games are tested and the trend is the same.

Of course you don't see it in the screenshot that shows GPU limited settings on the AMD cards.I don't see the same trend in the screenshot above tbh.

I guess its too much to expect intellectually honest discussion with some.

This is true, don't see any point discussing this with you further.I guess its too much to expect intellectually honest discussion with some.

To be clear: I see zero point in an "intellectually honest discussion" with someone who starts the discussion with a personal attack.

Flappy Pannus

Veteran

Well to be fair it's largely my fault for choosing the wrong screenshot to illustrate that point. I think people should watch these videos if they care about this stuff sure, but he specifically referenced my screenshot.I guess its too much to expect intellectually honest discussion with some.

So much time wasted testing low end CPUs with high end GPUs while still ignoring Raytracing. Guess HBU viewership align more with people who still play in 1080p with medium settings.

Here is a comparision in WoW with DX11, DX12, window 720p on Ampere:

Do you really believe that Blizzard doesnt know that DX12 is broken on nVidia hardware?

Here is a comparision in WoW with DX11, DX12, window 720p on Ampere:

Do you really believe that Blizzard doesnt know that DX12 is broken on nVidia hardware?

Flappy Pannus

Veteran

Raytracing introduces additional CPU overhead as well, and would not able to be run on the 5600xt/5700xt. The video is specifically about a potential increase in CPU demands from running modern DX12 titles on lower-end CPU's, it's not a review of GPU's.So much time wasted testing low end CPUs with high end GPUs while still ignoring Raytracing. Guess HBU viewership align more with people who still play in 1080p with medium settings.

Which in itself is an interesting area to test now too between RDNA and Turing/Ampere.Raytracing introduces additional CPU overhead as well

Would probably require some seriously low resolution to make RT games run mostly CPU limited.

It would also be interesting to compare typical CPU loads between RT games which run fine on AMD h/w and games which don't.

Flappy Pannus

Veteran

I would like to see those as well! Lord knows it's a better use of time for most end-users at this point I think than seeing another OEM board review that no one can purchase, but understand that you gotta give them airtime if they're sending you product.Which in itself is an interesting area to test now too between RDNA and Turing/Ampere.

Would probably require some seriously low resolution to make RT games run mostly CPU limited.

It would also be interesting to compare typical CPU loads between RT games which run fine on AMD h/w and games which don't.

Which in itself is an interesting area to test now too between RDNA and Turing/Ampere.

Would probably require some seriously low resolution to make RT games run mostly CPU limited.

It would also be interesting to compare typical CPU loads between RT games which run fine on AMD h/w and games which don't.

Was actually very easy for me to find cpu limited scenes in Control. The main hub area of the game was cpu limited for me.

https://forum.beyond3d.com/threads/dxr-performance-cpu-cost.62177/

It's not like any amount of tests would change your mind."Some people" do understand. "Some people" point out that to make loud claims more effort must be put into the testing.

Similar threads

- Replies

- 9

- Views

- 877

- Replies

- 66

- Views

- 7K

- Replies

- 3

- Views

- 1K

- Replies

- 4

- Views

- 563