You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia's 3000 Series RTX GPU [3090s with different memory capacity]

- Thread starter Shortbread

- Start date

-

- Tags

- nvidia

This kind of silly hand-waving platform fanboy stuff is when this forum is at its worst, this is the exact kind of detail-oriented discussion is what Beyond3D should be about. If you think it's irrelevant, maybe just move on

Like the idea some have that RT and reconstruction tech should be omitted in benchmarks and discussions because PS5 doesnt perform all that well at those tasks?

Flappy Pannus

Veteran

What the hell does that have to do with your post, or this thread at all? What does the PS5 have to do with this? If you have a problem with HU* where you think their bias is so prevalent that it's actually caused them to manufacture these benchmarks, then say as much. Otherwise create another thread with this ridiculous resetera content.Like the idea some have that RT and reconstruction tech should be omitted in benchmarks and discussions because PS5 doesnt perform all that well at those tasks?

*Edit: Or assuming this is some other forum drama where you disagreed with me on another topic. Regardless, keep it in that thread.

gamervivek

Regular

Paul's Hardware's 3080 review also showed this issue in one of the games HUB had tested in their previous video. He used a 3950X and only high settings in SoTR leading to below result at 1080p,

Timestamped here:

Timestamped here:

@gamervivek Yah, interesting. He seems to make the assumption that the 5700xt is doing better because it's an AMD gpu paired with an AMD cpu, but I have no idea why he thinks that would actually be a valid assumption. I'm actually surprised that no one has thoroughly investigated this before. I actually thought AMD had a chance with RDNA2 to be the best esports gpu, but I was thinking more because of clock and fillrate advantages, not because of the driver's cpu overhead.

Edit: Also I hope the attention pushes Nvidia to make some improvements if they can, because I'm heavily cpu limited in apex legends and valheim

Edit: Also I hope the attention pushes Nvidia to make some improvements if they can, because I'm heavily cpu limited in apex legends and valheim

You might want to learn how to better understand the deeper complexities of a bar graph.So with a slow CPU a Geforce card is at worst as slow as a radeon card. Then when you add more CPU power so the CPU isn't a bottleneck you get better performance with a Nvidia card. What exactly should be a surprise here?

So with a slow CPU a Geforce card is at worst as slow as a radeon card. Then when you add more CPU power so the CPU isn't a bottleneck you get better performance with a Nvidia card. What exactly should be a surprise here?

5700xt besting the 3070 on older cpus, and the 3070 is a gpu people with older cpus would reasonably be looking at.

Personally I think these are really interesting results and well worth highlighting. This would seem to be quite relevant to esports players and perhaps it helps to explain why Ampere seemingly loses performance to RDNA2 at lower resolutions even on powerful CPU's. It might not be anything inherent to the GPU architecture but rather the drivers holding it back. Hopefully highlighting this will drive Nvidia to fix it (if possible).

It may also become more relevant as this generation moves on and the consoles CPU's become better utilised which ultimately will place a lot of CPU's that are considered quite good today, into the low end category.

It may also become more relevant as this generation moves on and the consoles CPU's become better utilised which ultimately will place a lot of CPU's that are considered quite good today, into the low end category.

DavidGraham

Veteran

Those are interesting findings, I wonder if the same holds true for DX11 games on AMD GPUs? did RDNA fix their high overhead on that API as well?

I would also like to see HUB do an extensive review on the state of RT performance, considering the amount of games releasing with RT these days, they clearly showed they are capable of doing more than a 1000 benchmark over something that they deemed important enough, but I guess that's too much to hope for considering their current "Agenda".

I would also like to see HUB do an extensive review on the state of RT performance, considering the amount of games releasing with RT these days, they clearly showed they are capable of doing more than a 1000 benchmark over something that they deemed important enough, but I guess that's too much to hope for considering their current "Agenda".

DegustatoR

Legend

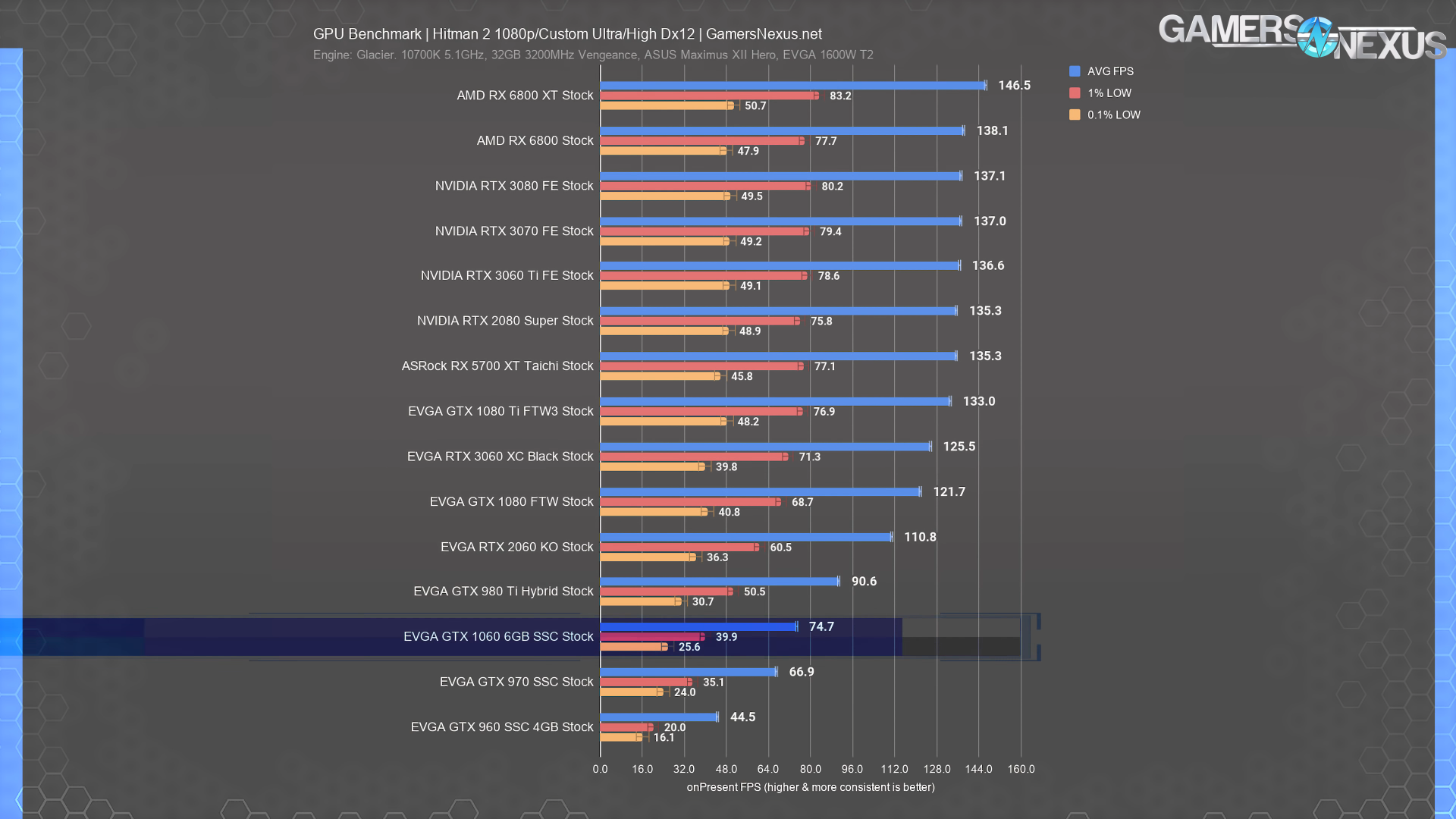

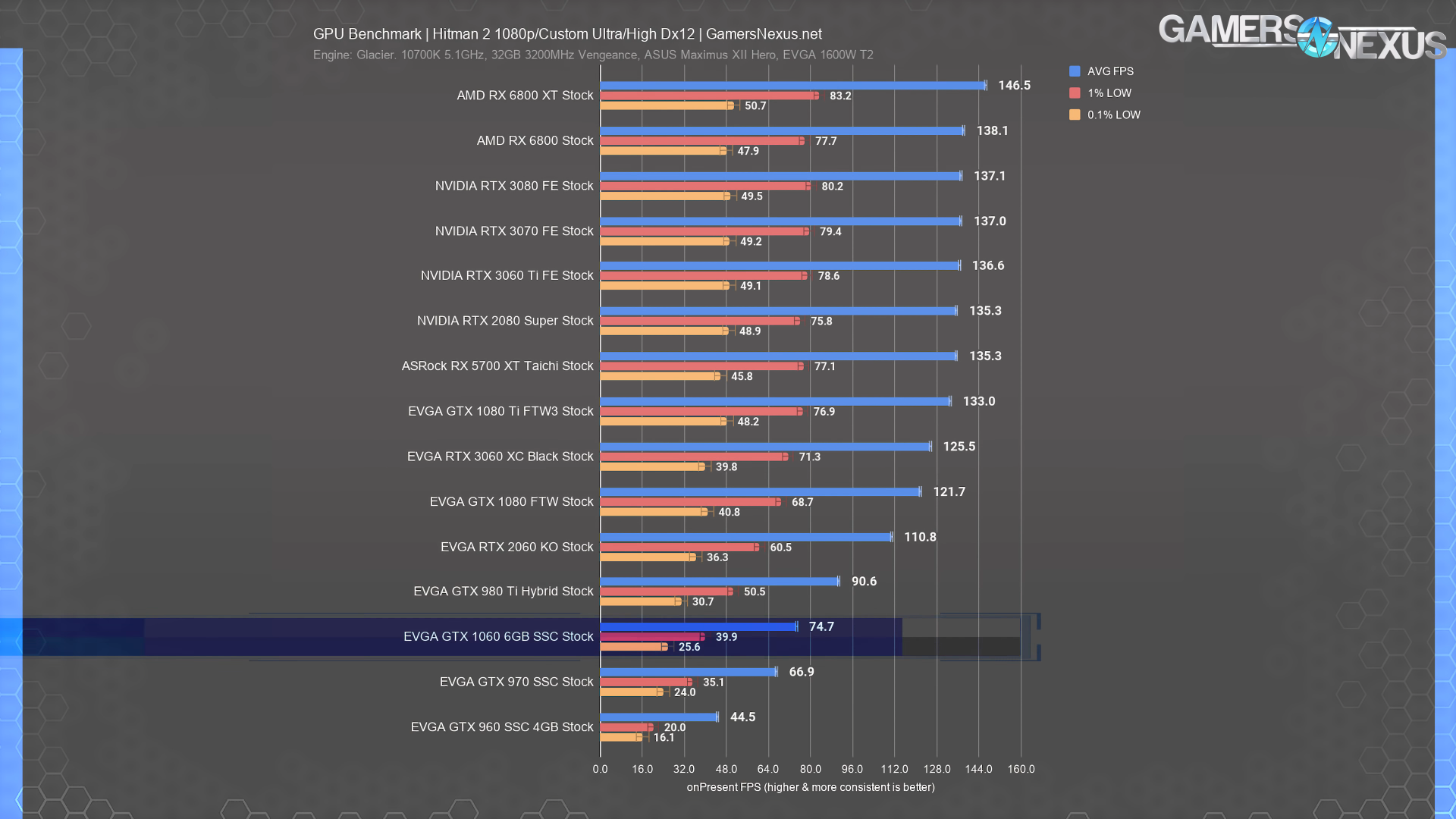

To add some food for thought - from recent GN's 1060 retrospective:

So apparently this shitpost is fine according to moderation team here. I'll quote it again then.It's not like any amount of tests would change your mind.

Last edited:

Flappy Pannus

Veteran

Could you...provide a little more detail on what you believe this is illustrating? It's being run on a 10700k at 5ghz, I'm not sure how it relates.To add some food for thought - from recent GN's 1060 retrospective:

gamervivek

Regular

Those are interesting findings, I wonder if the same holds true for DX11 games on AMD GPUs? did RDNA fix their high overhead on that API as well?

I would also like to see HUB do an extensive review on the state of RT performance, considering the amount of games releasing with RT these days, they clearly showed they are capable of doing more than a 1000 benchmark over something that they deemed important enough, but I guess that's too much to hope for considering their current "Agenda".

That's the thing, AMD didn't have higher overhead on DX11, they just couldn't multithread it like nvidia did and ended up loading a single thread which bottlenecked everything. Now on DX12 which can load more threads independent of the GPU driver, the higher overhead of nvidia drivers becomes a bottleneck.

I doubt that will change now. I moved to 6800XT from 3090 last week and it seems same as before, DX11 games hitch more and perform slightly worse.

Why would you go from a 3090 to 6800xt?That's the thing, AMD didn't have higher overhead on DX11, they just couldn't multithread it like nvidia did and ended up loading a single thread which bottlenecked everything. Now on DX12 which can load more threads independent of the GPU driver, the higher overhead of nvidia drivers becomes a bottleneck.

I doubt that will change now. I moved to 6800XT from 3090 last week and it seems same as before, DX11 games hitch more and perform slightly worse.

I just assumed if he bought a 3090 to begin with he has money to burn.Size, and money (if he sold the 3090 ?) ?

DegustatoR

Legend

What is there to provide? The results show a CPU limited case from a DX12 game which is known to be very CPU limited. They also don't show anything like what HUB is showing.Could you...provide a little more detail on what you believe this is illustrating? It's being run on a 10700k at 5ghz, I'm not sure how it relates.

I've said that more tests are needed. This is another such test which shows that the issue isn't really universal and may not even be CPU related.

gamervivek

Regular

Why would you go from a 3090 to 6800xt?

Bought 3090 for ML stuff and moved it to a separate machine. The prices see-sawed here with AMD cards being too pricey at the start and now nvidia cards being on the higher side.

Also, 6800XT can do eyefinity with ultrawide in between for 8560x1440 resolution and judging by what we're seeing regarding CPU bottleneck now, would be better for higher FoV gaming as well.

What is there to provide? The results show a CPU limited case from a DX12 game which is known to be very CPU limited. They also don't show anything like what HUB is showing.

I've said that more tests are needed. This is another such test which shows that the issue isn't really universal and may not even be CPU related.

That benchmark is custom high/ultra settings so it could be gpu limited at times.

DegustatoR

Legend

These results are obviously CPU limited since everything between 5700XT and 6800 show the same fps. 6800XT bring a bit faster then the rest of the bunch is an interesting artefact though.That benchmark is custom high/ultra settings so it could be gpu limited at times.

Similar threads

- Replies

- 11

- Views

- 2K

- Replies

- 0

- Views

- 548

- Replies

- 81

- Views

- 11K

- Replies

- 3

- Views

- 2K