beyondtest

Newcomer

in 2013 low power 8 core cpu was rare but the PS4 made it way more mainstream in a $ 400 box.

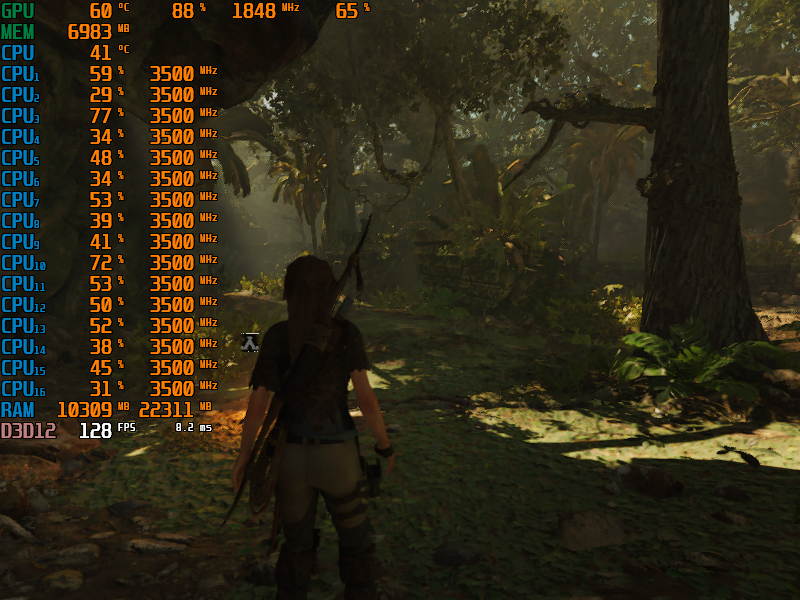

how likely could a low power 16 core cpu be for the PS5 that could cost no more than $ 600 for next gen?

how likely could a low power 16 core cpu be for the PS5 that could cost no more than $ 600 for next gen?