From what I've seen they don't do very well due to 2GB VRAM they causing issues, PS4's GPU would have 3-4GB of it's RAM allocated for VRAM.

HD7950/70 and fair a lot better as that extra 1GB makes all the difference.

that's a common problem for all 2 GB gpus from that era. up until 2017, they were mostly fine, but then we got nextgen games such as ac unity and above which started destroying 2 GB GPUs. either you had to use super extremely blurry ps2-like textures to get somewhat playable performance, or your performance would tank heavily in most cases

you can see here that 960 practically loses more than 2 times performance when it runs into a huge VRAM bottleneck

and devs really did not bother scaling their games for 2 GB GPUs. rdr 2, ac odyssey, origins, and many more AAA titles look absoutely horrible with low texture settings.

a game that maximizes ps4's potential usally requires 3.5-4 GB VRAM, so yeah, gtx 960 2 gb being "more powerful" than PS4 does not mean anything when it has awful amount of VRAM

funny thing is, there were a lot of NVIDIA users who justified 2 GB, saying 960 would not make use of 4 GB anyways. They said the same thing with 1060 6 GB, and now saying with 3060 12 GB, it just became a self fulfilling prophecy at this point.

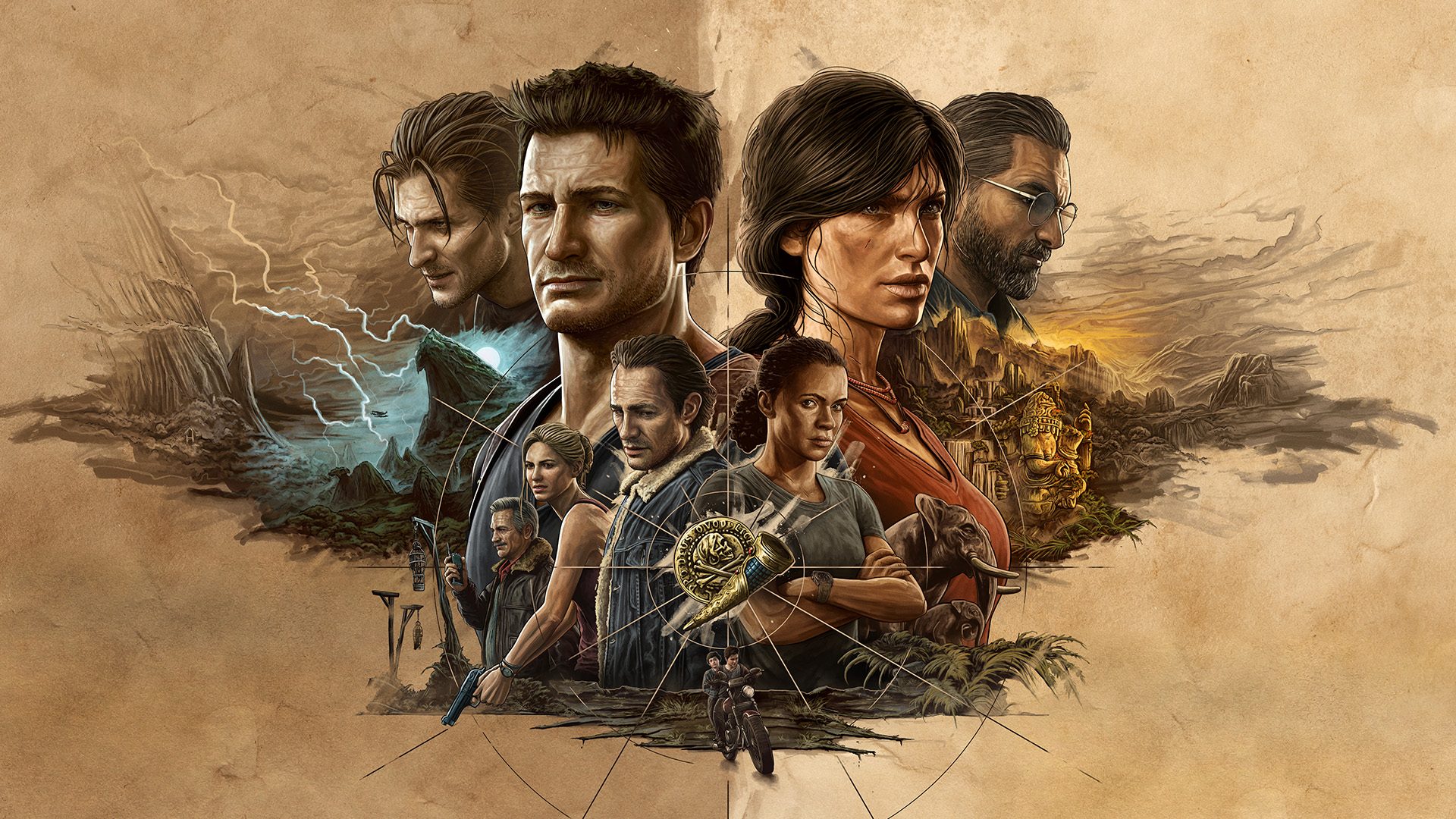

thing is, back in 2015-2016, you had games like Witcher 3 that looked like a mix of ps3 and ps4 games. They did not stress VRAm quite enough, so people felt safe with 2-3 GB back then. but look at uncharted 4 and witcher 3. image quality delta between them is huge. uc4 uses enormously better textures and assets. witcher 3 was made by 2 GB cards in mind, and while it still somehow struggled to run on PS4, it did not max out PS4's memory, most likely. whereas uc4 gracefully runs on PS4, it would not even be possible to release that port in a gaming community full of 2 and 3 GB GPUs without making an extra effort of creating special texture set for lowed end VRAM amounts

i gather same thing will happen to 4-6 GB GPus now but Series S's existence also makes me doubtful. I really look forward to what kind of texture quality will be possible on 8 GB GPUs in nextgen games. One thing I'm sure is that even though not powerful as its brothers, 3060 will have no trouble playing with nextgen textures.

See the 1060, 6 GB felt like an overprovision at its release, but despite using medium settings, you can still use ultra textures in almost every game. And honestly, ultra textures + medium settings would look a hello fa better than medium textures + high settings