Blazkowicz

Legend

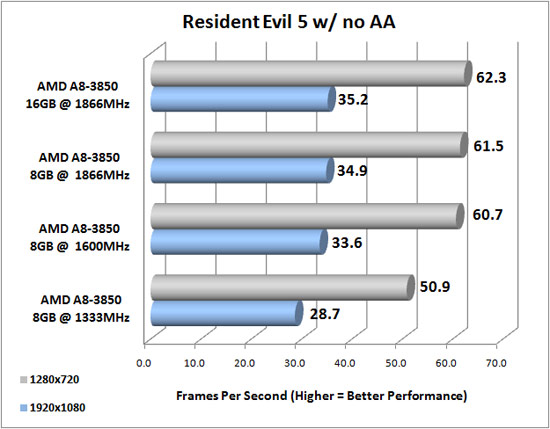

I thought ddr 1866 was the smooth spot already on llano, with the current prices.

I wonder what happens if you overclock the A6 5400K to hell, and how good the stock cooler is.

that CPU at 4.8 or 5GHz, with 8GB 1866 and an SSD, GPU overclock if it can be done, under quiet cooling and with a great < 400W PSU.. it would be fun, "premium low end". I'm curious about how that compares to i3 3220 and 3225.

I wonder what happens if you overclock the A6 5400K to hell, and how good the stock cooler is.

that CPU at 4.8 or 5GHz, with 8GB 1866 and an SSD, GPU overclock if it can be done, under quiet cooling and with a great < 400W PSU.. it would be fun, "premium low end". I'm curious about how that compares to i3 3220 and 3225.