Couldn't you use tracing from the sun to create new lights that mimic the reflection of as large an area as possible? That could be far more efficient than path tracing I think. You reduce the final render problem to a known problem (render a scene with a limited set of a few hundred light sources or less). Of course also less precise, but hey.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Stuff that we'll probably not be seeing in videogames for a long time

- Thread starter L. Scofield

- Start date

Hi, guys. The collision thing we were talking about. Take a look at this gif:

https://giant.gfycat.com/GiftedFlawlessAmericanratsnake.gif

Are the fingers manually animated? Maybe they are programmed to behave this way, with realistic collision detection...

EDIT: the fingers on the rock, I meant.

https://giant.gfycat.com/GiftedFlawlessAmericanratsnake.gif

Are the fingers manually animated? Maybe they are programmed to behave this way, with realistic collision detection...

EDIT: the fingers on the rock, I meant.

Hi, guys. The collision thing we were talking about. Take a look at this gif:

https://giant.gfycat.com/GiftedFlawlessAmericanratsnake.gif

Are the fingers manually animated? Maybe they are programmed to behave this way, with realistic collision detection...

EDIT: the fingers on the rock, I meant.

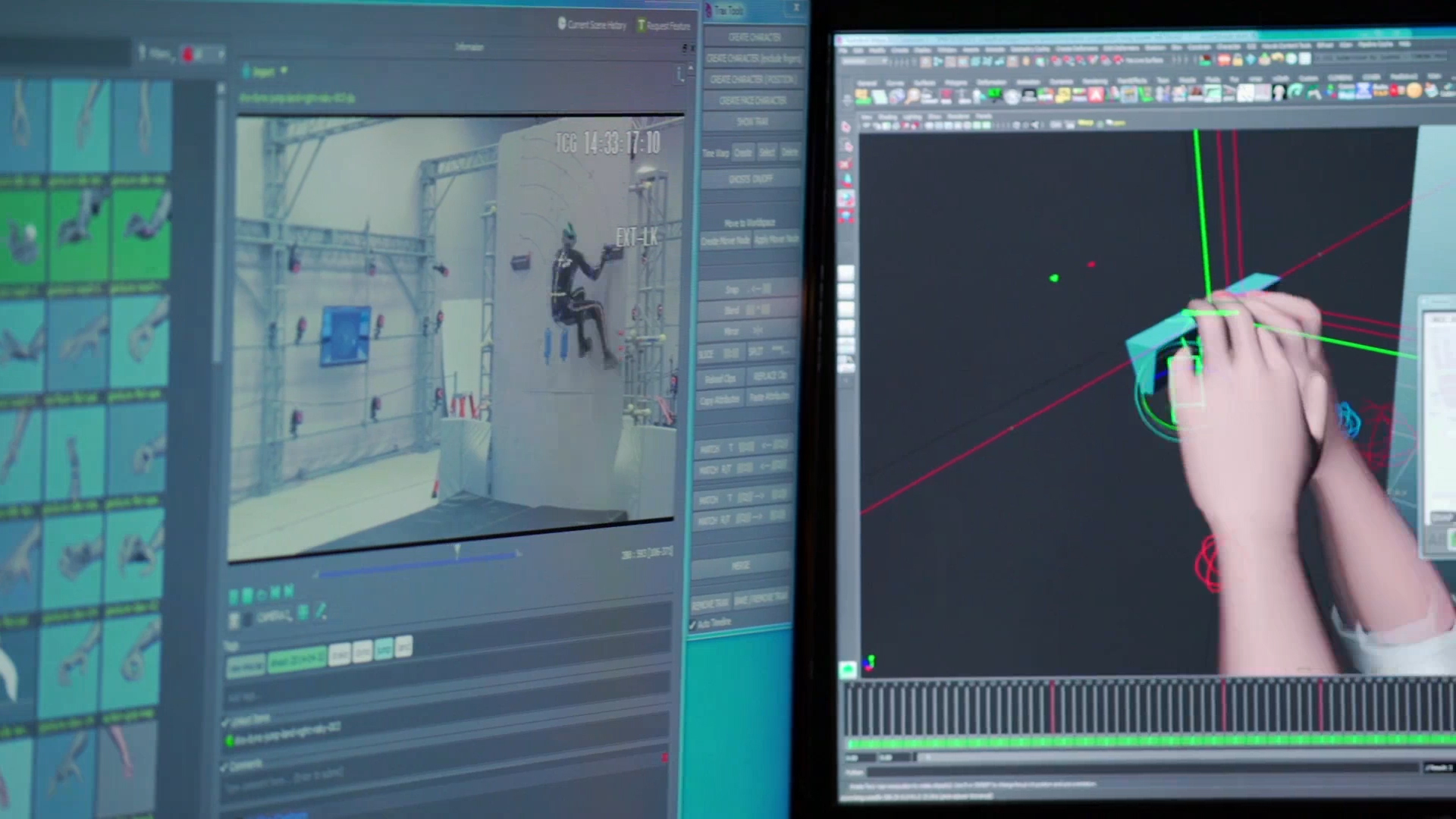

Inverse kinematics, one way to avoid clipping in certain situations, you can also see them used in the FFXV video posted above at 3:33 although the U4 implementation seems to be more precise (linear vs open world, resources allocated differently etc.). It's used heavily in U4 for climbing animations and gun holsters and probably many other things they haven't talked about yet, image from their tools:

Edit: That's also why his lip and cheeks are affected by his hand most probably

Last edited:

Silent_Buddha

Legend

Hi, guys. The collision thing we were talking about. Take a look at this gif:

https://giant.gfycat.com/GiftedFlawlessAmericanratsnake.gif

Are the fingers manually animated? Maybe they are programmed to behave this way, with realistic collision detection...

EDIT: the fingers on the rock, I meant.

No. While the animation is well done, there's still sliding on the rock that, IMO, shouldn't be happening. His fingers also appears to be hovering above the rock face, that's exacerbated by the unrealistic sliding that is going on. If you watch carefully you can still see the relatively coarse bounding boxes (albeit still smaller than what you'd see in gameplay). So the collision detection (assuming it's running in realtime) is pretty good, but not realistic.

That said, it is very well done and a huge step up from what other games are doing. But considering that looks like it's from a cutscene with nothing else in the scene, I was hoping for better.

All of that said, I'm far less impressed with how his hand interacts with the wall than I am with how his face interacts with his hand.

Regards,

SB

All that stuff is preprossed offline guys. Their tools probably have fine colision detection, inerse kinematis and a bunch of other stuff, but for a cutscene, i'm willing to bet its all baked into keyframes and ps4 does none of the work. Finger-object interaction during cutscenes was pretty good already on past ucs on ps3. Maybe not as good, but certaonly above avarege

I also noticed the sliding, but I didn't think it was unrealistic. I liked it (even more than the hand-face interaction) and I took it as something caused due to the rock being mossy/slippery, lol. Yeah, I know, I pushed this too far...No. While the animation is well done, there's still sliding on the rock that, IMO, shouldn't be happening. His fingers also appears to be hovering above the rock face, that's exacerbated by the unrealistic sliding that is going on. If you watch carefully you can still see the relatively coarse bounding boxes (albeit still smaller than what you'd see in gameplay). So the collision detection (assuming it's running in realtime) is pretty good, but not realistic.

That said, it is very well done and a huge step up from what other games are doing. But considering that looks like it's from a cutscene with nothing else in the scene, I was hoping for better.IE - they have a ton of resources available in that scene.

All of that said, I'm far less impressed with how his hand interacts with the wall than I am with how his face interacts with his hand.

Regards,

SB

Maybe, but, why not? I mean, we've been seeing this for ages now, applied to main characters' feet (since PS2 era or even before, if we take the original Virtua Fighter into account, IIRC). Why can't current gen machines process a few more IKs? I know, resource allocation, etc., but still...All that stuff is preprossed offline guys. Their tools probably have fine colision detection, inerse kinematis and a bunch of other stuff, but for a cutscene, i'm willing to bet its all baked into keyframes and ps4 does none of the work. Finger-object interaction during cutscenes was pretty good already on past ucs on ps3. Maybe not as good, but certaonly above avarege

Natural Motion introduced behavioural animation last gen. There were a couple of games that used it - a football game from them (who seem to have given up with their natural motion idea and moved onto being game developers?) and Lucasarts made a SW game with it. Havok have also added behavioural physics I believe. If devs aren't using it, I'm guessing it's either because it's too computationally expensive, ot just plain too buggy to use. My guess is the latter. Or maybe the computational requirements to make it not buggy leave it only available in buggy implementations?Maybe, but, why not? I mean, we've been seeing this for ages now, applied to main characters' feet (since PS2 era or even before, if we take the original Virtua Fighter into account, IIRC). Why can't current gen machines process a few more IKs? I know, resource allocation, etc., but still...

Oh, there'll also be a major control issue. If you want your character to move in an inhuman way (as we all do), the simulation would get in the way. Characters would become sluggish as they have to shift their weight to follow your commands. The result would be a Lair like disconnect from the player. You'd no longer be directly controlling the player, but guiding them and waiting for them to follow, which people seem uncomfortable with (see also KZ2's 'weighty' aiming complaints). And combining two methods, conventional fixed movement for foot placement and behavioural hand waving, is probably way more complex than is worth it.

Oh, there'll also be a major control issue. If you want your character to move in an inhuman way (as we all do), the simulation would get in the way. Characters would become sluggish as they have to shift their weight to follow your commands. The result would be a Lair like disconnect from the player. You'd no longer be directly controlling the player, but guiding them and waiting for them to follow, which people seem uncomfortable with (see also KZ2's 'weighty' aiming complaints).

This worked when you were controlling the horse in Shadow of the Colossus, but wouldn't for an individual player.

D

Deleted member 11852

Guest

More than just a couple last gen. GTA IV and GTA V both use Natural Motion and they published a case study on it's use in GTA IV. I'd like to see it more, animation is still something that lets a lot of games down but it's getting better. Animals in Far Cry Primal for instance.Natural Motion introduced behavioural animation last gen. There were a couple of games that used it - a football game from them (who seem to have given up with their natural motion idea and moved onto being game developers?) and Lucasarts made a SW game with it.

Very interesting animation tech, not sure how this could be applied to video games (maybe in stuff like Dreams?)

They also posted thisComputer-Assisted Animation of Line and Paint in Disney's Paperman: We present a system which allows animators to combine CG animation's strengths -- temporal coherence, spatial stability, and precise control -- with traditional animation's expressive and pleasing line-based aesthetic. Our process begins as an ordinary 3D CG animation, but later steps occur in a light-weight and responsive 2D environment, where an artist can draw lines which the system can then automatically move through time using vector fields derived from the 3D animation, thereby maximizing the benefits of both environments. Unlike with an automated "toon-shader", the final look was directly in the hands of the artists in a familiar workflow, allowing their artistry and creative power to be fully utilized. This process was developed during production of the short film Paperman at Walt Disney Animation Studios, but its application is extensible to other styles of animation as well.

Smoothed Aggregation Multigrid for Cloth Simulation: Existing multigrid methods for cloth simulation are based on geometric multigrid. While good results have been reported, geometric methods are problematic for unstructured grids, widely varying material properties, and varying anisotropies, and they often have difficulty handling constraints.

Last edited:

Maybe, but, why not? I mean, we've been seeing this for ages now, applied to main characters' feet (since PS2 era or even before, if we take the original Virtua Fighter into account, IIRC). Why can't current gen machines process a few more IKs? I know, resource allocation, etc., but still...

Because its a cutscene. They sure have dynamic procedural animation systems in place for gameplay, as they already did in past games, but whenever a canned cutscene plays out, all animations are fully preprocessed. Making a game full of mo-caped cutscenesand set pieces on schedule is hard enough as it is, trying to make those cutscenes animations work with the desired quality with real-time solutions is more trouble than it is worth. They´d rather spend their man-hours elsewhere.

Similar threads

- Replies

- 85

- Views

- 6K

- Replies

- 21

- Views

- 1K

- Replies

- 7

- Views

- 971

- Replies

- 27

- Views

- 3K