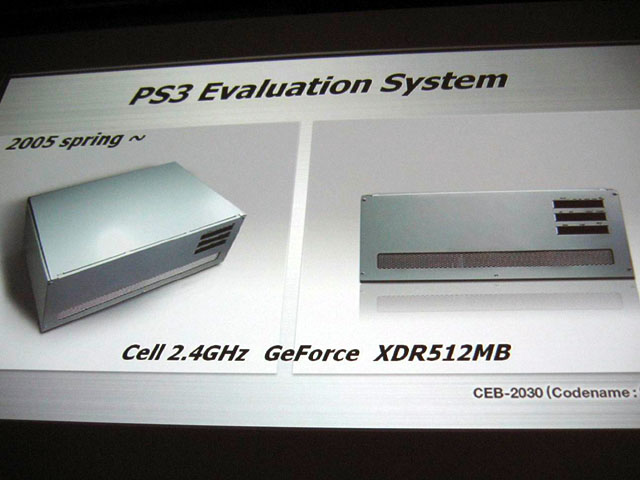

As you can see the Cell processor will be 3.2 ghz in both but in the development system there will be 2x more memory than the console

In the RSX there will also be 2x more memory on the G70 in the development system than on the console and the bandwidth on the Development will be 3x more than the console. This also confirms that the G70 is as powerful as the RSX because they would not put a weaker Graphics chip in development kits.

As you can also see in the final console will be an option to input a HDD which will be also in the development system while development system will not have a bluRay disk while the console will