Flappy Pannus

Veteran

Yes so? As I've said it's targeting 60 on XSS. The fact that it drops down to 30 (and below) doesn't mean that it's CPU limited, in fact it is most likely GPU limited on consoles.

A game is "targetting" 60fps if there's actually a 60fps, or at least an unlocked mode. It's targetting 30/40fps, as those are the caps. The very fact there's not a 60fps or pure-unlocked mode running at 1080p on the SX indicates CPU limitation is indeed a factor, as well as the SS holding 30fps at 1080p quite well vs the SX/PS5 only going up to 1440p at 30fps. If it was purely GPU limited then they would both be absolutely locked at 40fps easily at 1440p when the SS is running the same scenes without a drop at 30.

How is the SX not maintaining 40fps in this scene at 1440p while the SS is reaching 30 at 1080p given the vast disparity in GPU resources between the two if the CPU is not a factor?

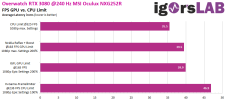

And on the PC, here's the GPU at 84% at 54fps. Again in the video linked, which you refuse to watch.

Last edited: