I think the many of the people who level the charges at DF for being 'Nvidia shills' are silly, and regardless fanboys of that nature are never really going to be dissuaded with anything.

However...I also think taking 27 minutes into a 32 minute video before you even start to get into image quality criticisms isn't exactly going to help matters either.

I understand this is just a 'First Look', and wrt the restrictions in terms of equipment on hand and time, they couldn't do the deep dive that they really wanted to. You do though, have to sit through what feels like an extended promotion before you get to any semblance of analysis - that is not a function of restricted access. If the shackles placed upon you prevent you from doing the critical analysis you've staked your reputation on, maybe...don't do it quite yet? At the very least, edit it down so you've got 10 minutes of "High framerates are good!" material instead of the first ~20. I mean I get it, people are desperate to peek behind the hype curtain and this video will likely do numbers, but I was hoping for a little more than an upturned flap.

Still, there were at least - eventually - some somewhat interesting tidbits to draw from this at this early stage, unfortunately not all of it positive. Bear in mind you might as well mentally preface every observation here with "

At this stage", as I understand this is pre-release and we've certainly seen improvements from Nvidia with DLSS before that can be significant.

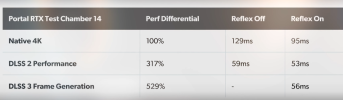

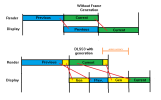

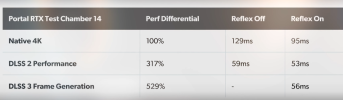

Latency: For one, the wildly varying numbers demonstrate how much of latency is still in the hands of the engine/developer. Secondly, you can't necessarily just factor in framerate improvements and then calculate "Well if this was native and we double framerate, we should get half the latency" - doesn't always scale that way, as this demonstrates:

DLSS 2 here has improved framerates by +300%. However latency 'only' decreased by just around half, and less with Reflex enabled at both native + DLSS. So you can't always just scale a potential latency reduction linearly with framerate. DLSS 3 does relatively well here. There's not necessarily a reason to believe that if it were possible to get a 529% performance improvement without involving DLSS3 that we would be saving any significant latency regardless.

OTOH:

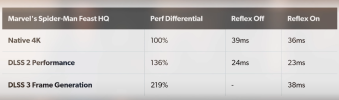

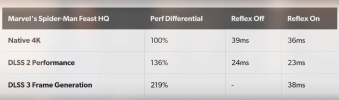

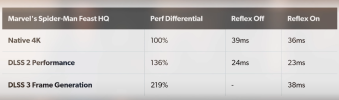

Yikes. I don't think this was necessarily expected, my understanding that DLSS 3 can't really

reduce latency from native (when I say 'native' in the context of DLSS3 wrt latency, I mean DLSS2), but not that it would increase it appreciably. This is a heavily CPU limited game mind you, but still - DLSS performance is showing a significant drop in latency from non-reconstructed 4K. DLSS3's frame generation increases that to the point where it's like pure 4K, but without Reflex.

Now look, 38ms latency is still good - it's certainly good enough to still feel very 'connected' to a 120+fps output. But I think I, and others, felt that perhaps some very slight latency increase over DLSS2 was the 'worst case' kind of scenario, not that it could ever reduce latency to the point where it was

before the performance savings of DLSS were factored in entirely.

In terms of image quality, from a pure technology perspective it was impressive to see how well DLSS3 can perform its motion interpolation compared to far more expensive offline methods, and I get that's largely the point of looking at it in this way. It does speak to Nvidia's experience and the power of dedicated hardware, no doubt. From the perspective of a

gamer though, I'm not quite sure of the utility in this context. I mean motion interpolation, offline or not, is relatively easy to detect in certain scenarios, hence the extreme skepticism from some camps about DLSS3's announcement. So comparing against such technologies is not exactly alleviating these concerns - yes, it's very impressive what it can do in a tiny fraction of the time! But ultimately it's a comparison no one will ever really encounter when using a 40X0 GPU, it's not a

choice they have to even consider. Bear in mind as well as a youtube commentator reminded me, these offline interpolation methods also don't have access to the same motion vector data that games are providing to DLSS. So it's not really comparing the same thing regardless.

Like for example, the early shots from when Spidey is jumping out the window.

Horrible ghosting with the Adobe/Topaz method. Yet the hand area, which from the video, "does not have that error" of those methods (and that's true - it certainly doesn't), still doesn't look that great:

Now, is that from DLSS2 or 3? Dunno. But we see further example further on of DLSS's specific errors, and they can be pretty egregious:

The argument that this kind of error is only on-screen for ~8ms is of course valid, in the greater context of a 120+fps game, the perceived temporal resolution increase from the higher framerate will further mask such errors. But clearly - these are not the same as 'native' frames. I get it as an argument specifically for Spiderman though, as the CPU limitation in that game makes DLSS3 the only way to achieve these frames on any system. But typically, "you would hardly notice the artifacts" isn't something you usually hear in the context of playing something on a high-end GPU, it's something you usually hear more when it's with a budget product where you don't really have any other option.

Speaking of "sacrifices", what's up with every comparison done using Performance DLSS? That's a mode I'm usually loathe to drop down to on my

3060, it is required for DLSS3 at all to function or does it just present it in the best light? DLSS3 frame artifacts when compared to fully native resolution are one thing, but it's another matter if it's being compared to DLSS performance - which have plenty of its own already. Do even 3080 users typically use performance mode DLSS?

Overall, to me this just ultimately further illustrates how utterly ridiculous Nvidia's marketing wrt the 4090's 'performance' has been. DLSS3, as a technology, is very interesting, if not solely for the fact that it can alleviate the CPU burden of reaching such framerates on certain games regardless, we're not getting massively better CPU performance anytime soon and as such, interpolation methods will likely remain the only option to reach these framerates in many cases. But as part of a

product - which is why we're all interested in this in the first place - promoting it as providing a massive % increase over the previous gen was always suspect, and this small peek just makes that look even more laughable. You are getting that performance at considerable sacrifices, it's just comparing two different things.