DavidGraham

Veteran

I don't see how well they performed in any of these games, their performance level is the same RDNA2 vs Ampere or RDNA3 vs Ada.Given how well they've performed in games (Halo Infinite & Starfield)

AMD Radeon RX 7900 XTX Review - Disrupting the GeForce RTX 4080

Navi 31 is here! The new $999 Radeon RX 7900 XTX in this review is AMD's new flagship card based on the wonderful chiplet technology that made the Ryzen Effect possible. In our testing we can confirm that the new RX 7900 XTX is indeed faster than the GeForce RTX 4080, but only with RT disabled.

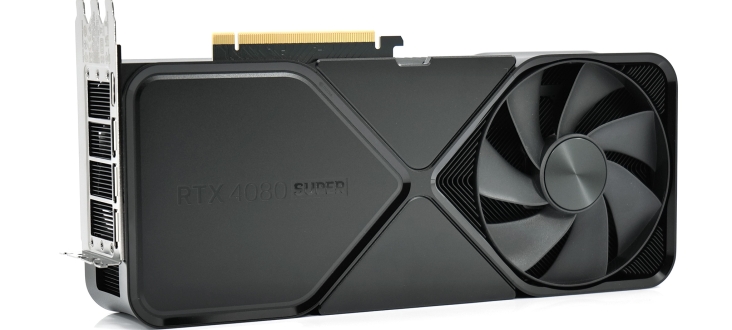

Nvidia RTX 4080 Super Review - KitGuru

Today marks the end of a busy January launch period for the GPU market, as we check out the RTX 4080

www.kitguru.net

www.kitguru.net

You are reaching with your conclusion based on false or insufficient data.

That publicized "beta" preliminary data contained no performance comparisons or even worthy general performance gains, the jury is still far away on this one.and that there's publicized preliminary data from a big ISV (Epic Games) too

These are generalized statements with no current data to back them up, no one said their HW design is bad, just they are lagging in visual and performance features.They're behind feature/integration wise but by no means do they have 'bad' HW design when they can have a smaller physical HW implementation for a given performance profile and they have more freedom to improve this aspect as well ...