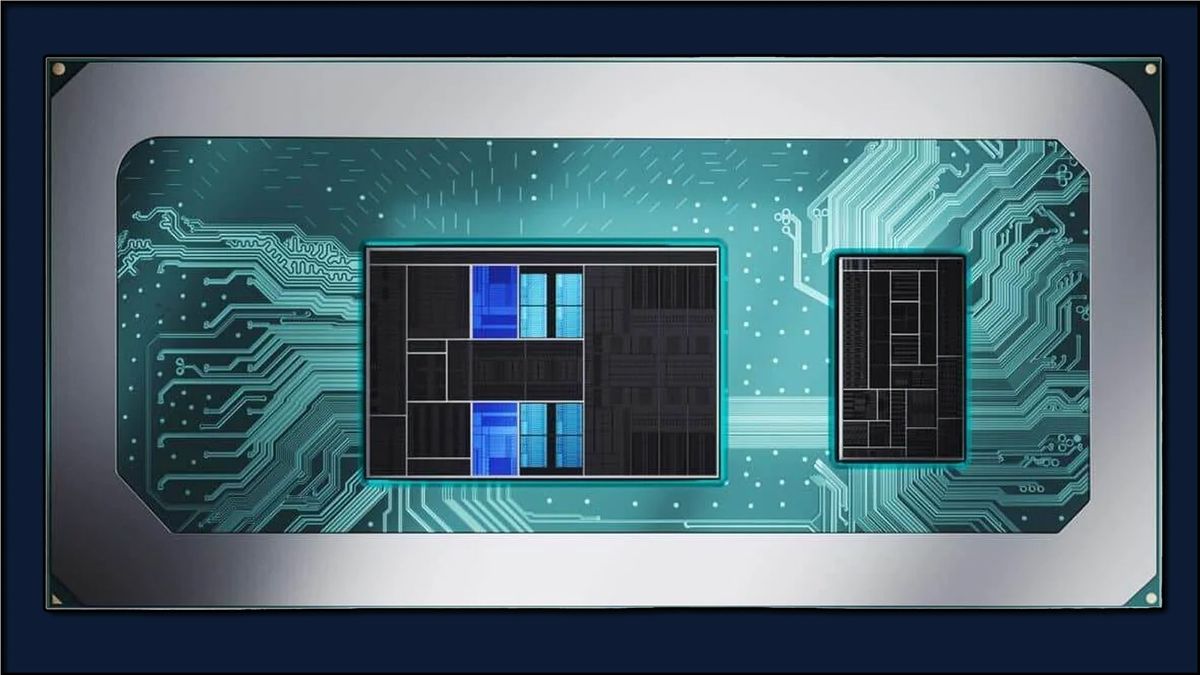

Well, I decided to start this thread to share news on NPUs, and also information about what is a NPU, the best NPUs on the market, etc etc. It's still a new concept now in mid 2024 but it seems to be an unstoppable revolution anticipating what is coming.

Will they replace CPUs and GPUs? They won't, but they are meant to complement them.

There are some articles explaining what is a NPU and the benefits of a NPU.

pureinfotech.com

pureinfotech.com

www.techradar.com

www.techradar.com

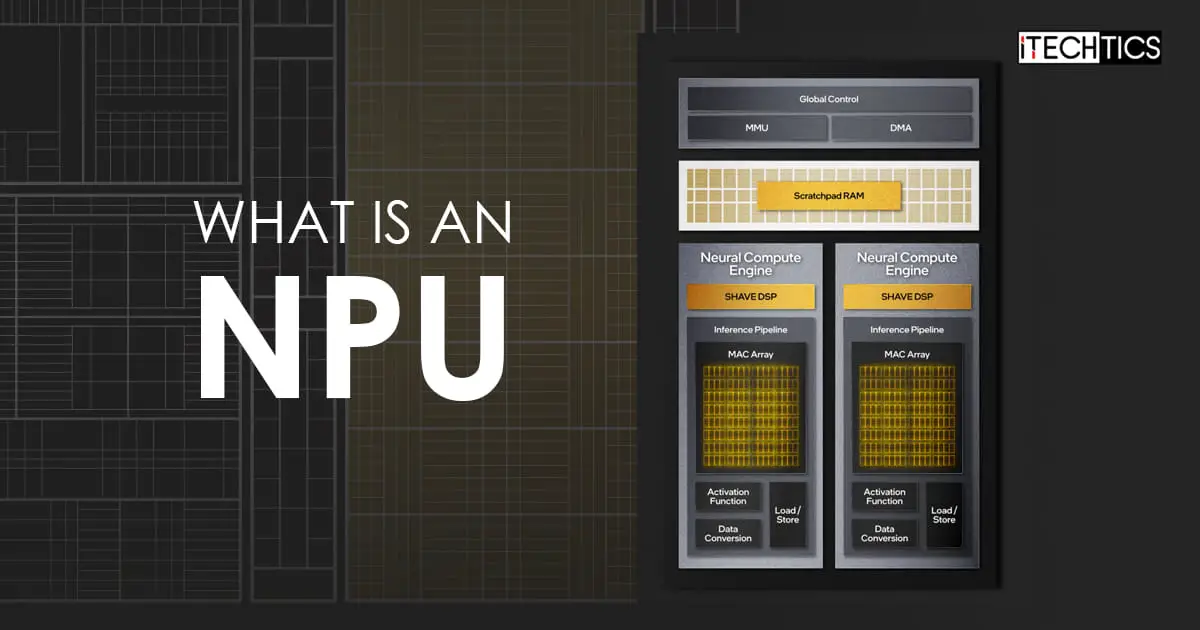

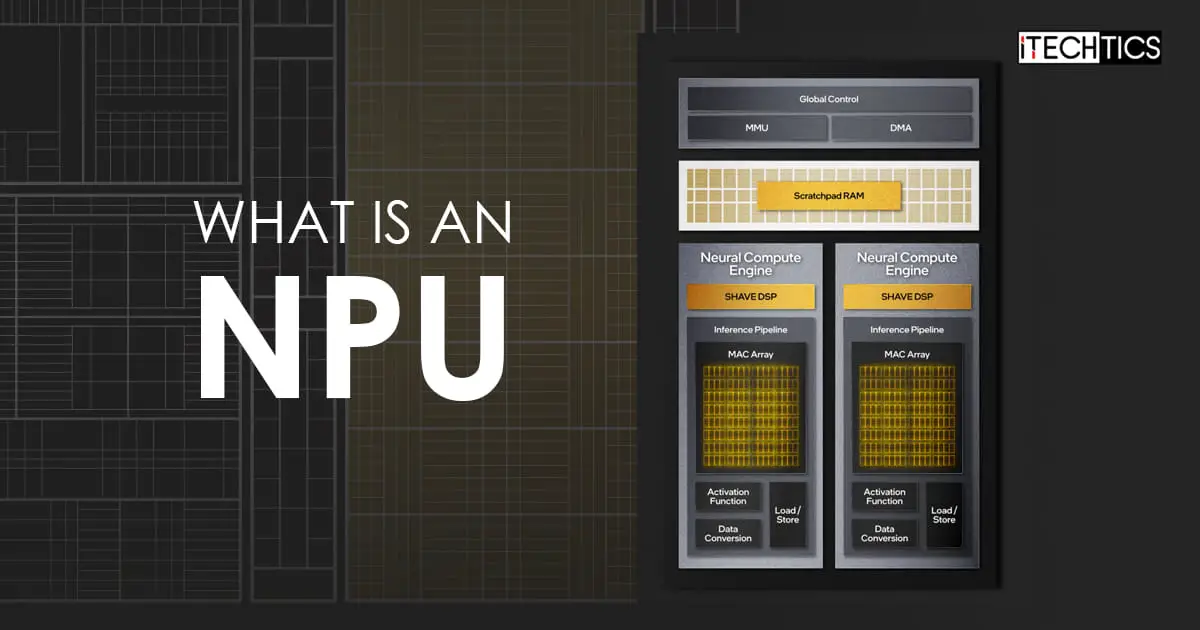

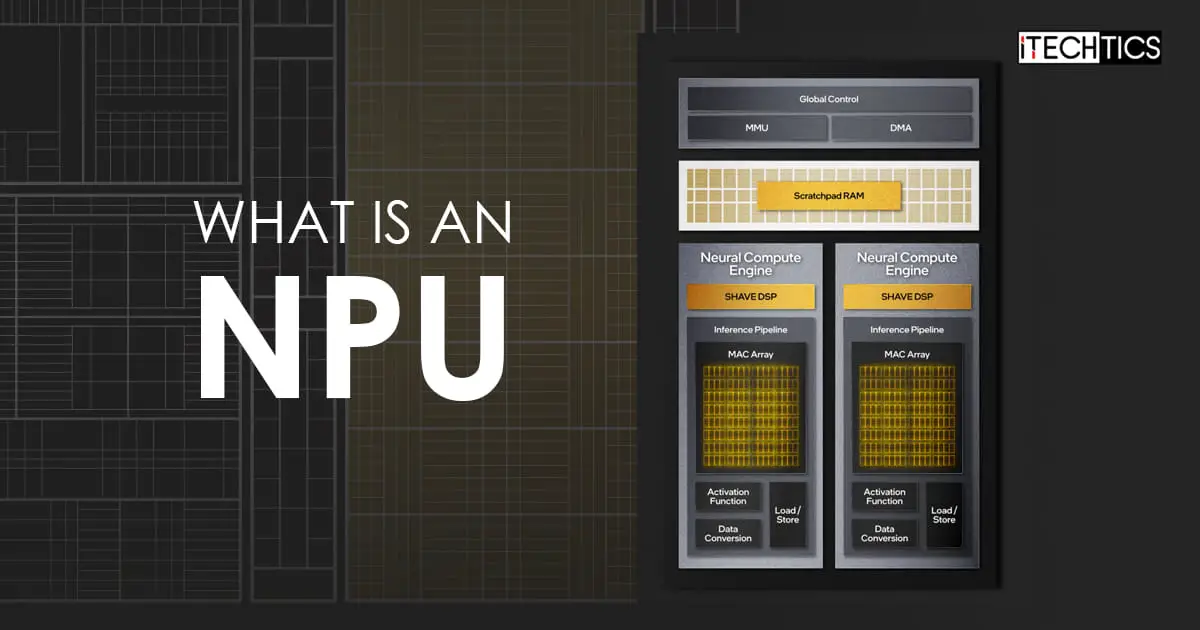

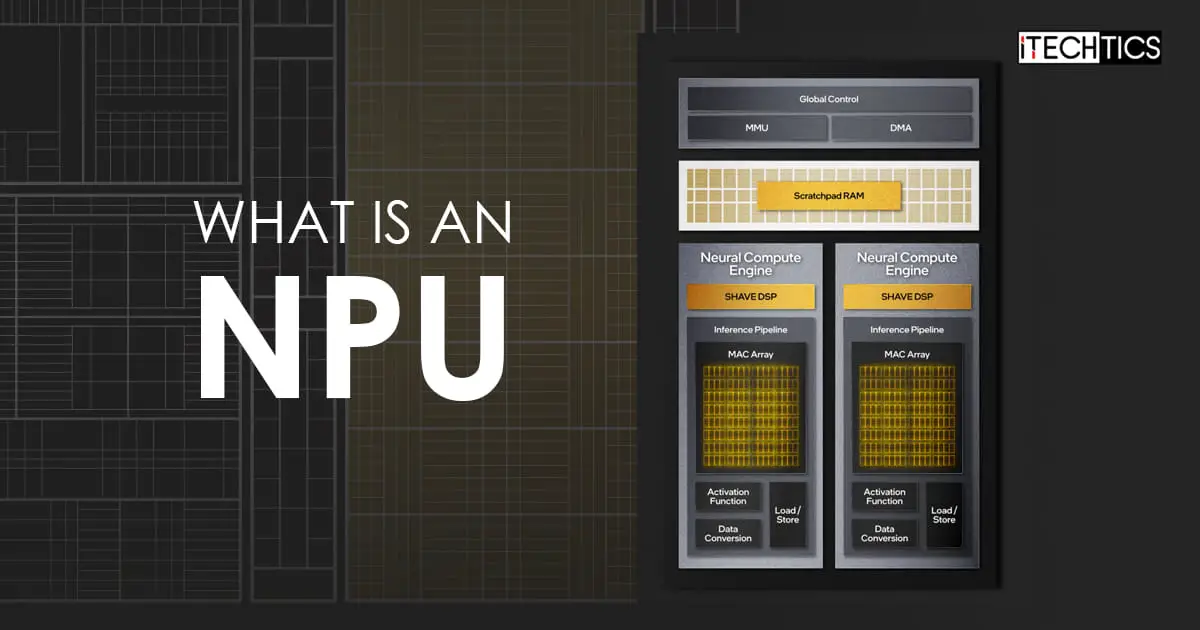

www.itechtics.com

www.itechtics.com

Benefits of a NPU.

Energy efficiency: The specialized design of NPUs makes them more energy-efficient than using CPUs or GPUs for AI tasks, which is important for battery-powered devices and data centers.

Cost-effectiveness: While individual NPUs may be expensive, their high performance and efficiency can translate to overall cost savings when compared to using large numbers of CPUs or GPUs for the same workload.

Performance boost: NPUs can significantly accelerate AI workloads, leading to faster processing and real-time results. This is crucial for applications like autonomous vehicles, image recognition, and natural language processing.

AI for games: NPUs can potentially improve AI on games, and make NPCs more believable. I.e. you've talked to an NPC and you leave after the conversation, you return to the area and while not being able to talk with him 'cos the important conversation isn't available, instead of walking/running around in front of said NPC and they don't say a thing, they could just say things like "Hi again!" or "What are you doing here (after what we talked)?". It'd be great for games trying to learn how you play -drivatars, ranking, etc-.

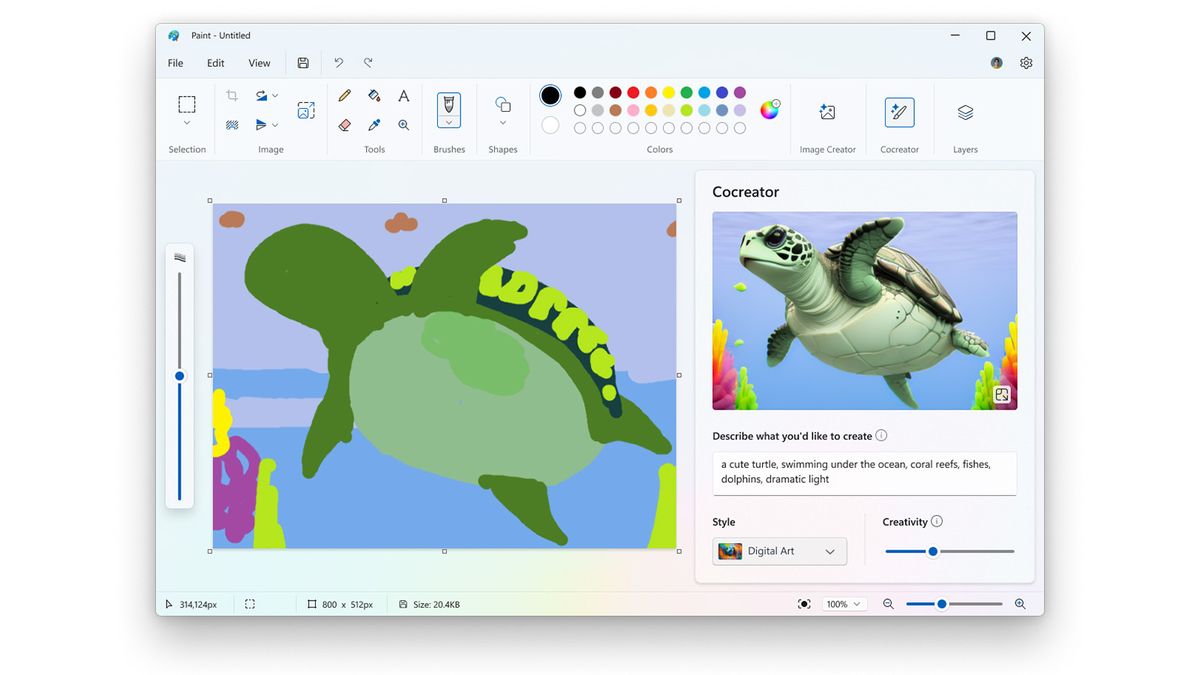

Use in apps: Some apps would benefit from the use of a NPU.

Will they replace CPUs and GPUs? They won't, but they are meant to complement them.

There are some articles explaining what is a NPU and the benefits of a NPU.

What is an NPU, and does your PC need one? Neural Processing Unit explained - Pureinfotech

NPU (Neural Processing Unit) is a piece of hardware that speeds up the process of AI tasks better than a GPU and CPU. Currently not required.

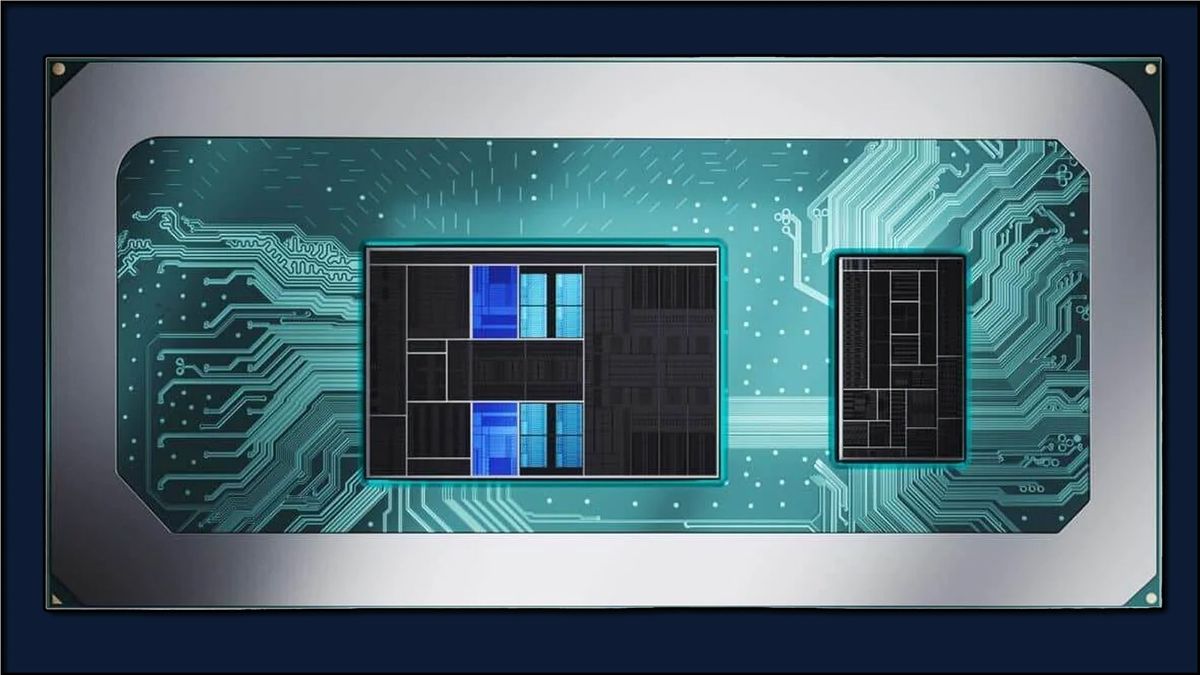

What is an NPU: the new AI chips explained

What is an NPU? Possibly the biggest advance in computing in a generation

What Is An NPU And Why Do You Need One - Everything Explained

If you use the latest artificial intelligence features on your devices, then you want to know what NPUs are and how they can benefit you.

www.itechtics.com

www.itechtics.com

Benefits of a NPU.

Energy efficiency: The specialized design of NPUs makes them more energy-efficient than using CPUs or GPUs for AI tasks, which is important for battery-powered devices and data centers.

Cost-effectiveness: While individual NPUs may be expensive, their high performance and efficiency can translate to overall cost savings when compared to using large numbers of CPUs or GPUs for the same workload.

Performance boost: NPUs can significantly accelerate AI workloads, leading to faster processing and real-time results. This is crucial for applications like autonomous vehicles, image recognition, and natural language processing.

AI for games: NPUs can potentially improve AI on games, and make NPCs more believable. I.e. you've talked to an NPC and you leave after the conversation, you return to the area and while not being able to talk with him 'cos the important conversation isn't available, instead of walking/running around in front of said NPC and they don't say a thing, they could just say things like "Hi again!" or "What are you doing here (after what we talked)?". It'd be great for games trying to learn how you play -drivatars, ranking, etc-.

Use in apps: Some apps would benefit from the use of a NPU.

Last edited: