Is Valhalla designed to max out the consoles IO, or was it designed to support PC IO and then just uses the consoles for faster loading?We can look at Valhalla for example the min spec is quite wide.

PC games are extremely scalable these days. I don't think we need to have any concerns about PC versions holding back any console specific features.

It's equivalent to PC GPU;s being way, way more capable than consoles in shader abilities but games never being designed around that peak abliity set because devs target a lower spec, whether consoles or just a lower, more mainstream PC spec. It takes some time from a new feature to actually become a design consideration in a game rather than just using the more power to drive the existing older methods at higher resolutions.

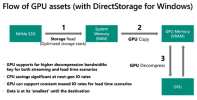

And again, I'm not specoifically arguing about games being 'held back' - I'm wondering what tech level is required in PC to match consoles in functionality and when that's due. I'd rather learn about specifics. eg. How important is GPU decompression? What GPUs are capable of supporting that already and/or will there need to be a period of upgrading before a signfiicant portion of PCs can use that feature? Until then I'm sure games will be designed to support all platforms but there'll also come a time, maybe 2035 say, when the minimum spec is 'PS5 level tech' and games won't scale down lower than that. So given that point of feature parity, what are the milestones PC needs to adopt to reach that point?