More food for thought: AMD's GPU development history

AMD's Top-end Single GPU Video Card

2012 - 7970 - 28nm - 3.79 TF

2013 - R9 290X - 28nm - 5.63 TF

2015 - Fury X - 28nm - 8.6 TF

2017 - Vega 64 (Air) @ Boost Clock - 14nm - 12.67 TF

2019 - Radeon VII @ Boost Clock - 7nm - 13.82 TF

Consoles based on AMD GPU

2013 - PS4 - 28nm -1.8 TF

2016 - PS4 Pro - 14nm - 4.2 TF

2017 - Xbox One X - 14nm - 6 TF

A console based on a SoC containing an AMD GPU has never had more than 1/2 the total TF capability of the top-end GPU from AMD at any time during this period. Keep that in mind when setting your expectations for the performance of next-gen consoles.

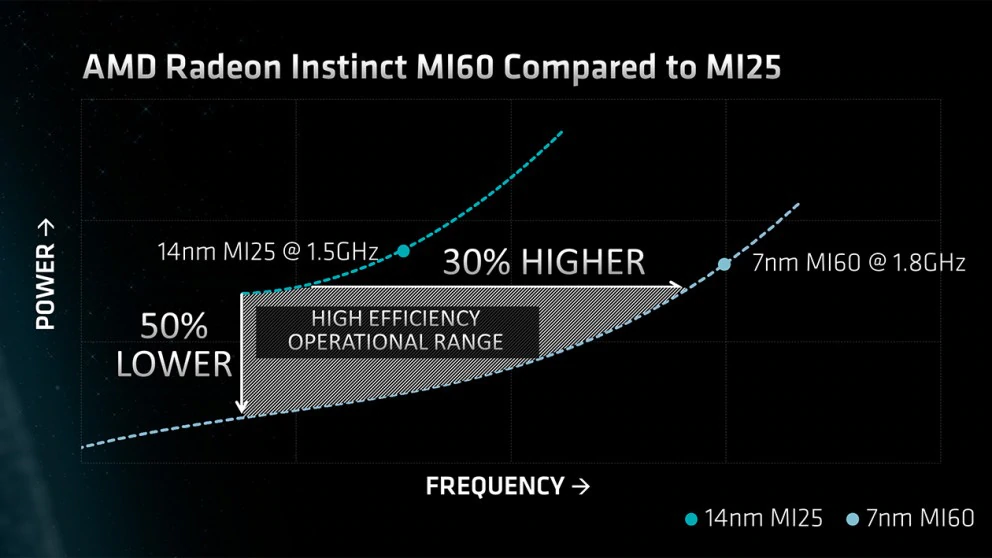

Perhaps we should be looking at the power consumption figures to get a better sense of the limitations as well as the die size. Might also be worth gauging the relative clock speeds as well vs the sustained-under-load clocks on desktop.