Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel XeSS upscaling

- Thread starter BRiT

- Start date

Fell asleep last night while running the benchmark for Tomb Raider, Thank you to the people at Eidos Montreal for including a benchmark in their game. Makes it easier for me to do this. I have to start from scratch because the video is about 8 hours of looking at 1 benchmark result. Hoping to to COD but no promises.

unfortunately you didn't make into the game last night. I say that 'cos it's one of my favourite games. Very interested in your tests, because the game looks totally pristine -like a Disney movie at times, I had a hard time finding a jaggie-, perhaps the best showcase for XeSS nowadays, and since you have a Iris Xe GPU.... Do Lara's hair disappear sometimes for you? This happened to me sometimes but I think it's more an Arc glitch than something related to XeSS.Fell asleep last night while running the benchmark for Tomb Raider, Thank you to the people at Eidos Montreal for including a benchmark in their game. Makes it easier for me to do this. I have to start from scratch because the video is about 8 hours of looking at 1 benchmark result. Hoping to to COD but no promises.

Sorry, 1080p only. The benchmark takes a long time to load the different scenes on the laptop. In this video the FPS is great deal easier to see. IMO Intel should really consider doing something like the FPS counter in the Nvidia Geforce Experience if they are serious about the graphics market..

enjoy the game! It's my favourite modern Tomb Raider game by far. I've watched the video and no setting gives a good framerate. XeSS Quality is usually the best compromise, along with Balanced, imho, though with the Iris Xe you might go further and decrease the game's resolution and apply XeSS from there. 20fps with XeSS Performance is not much. You haven't tried XeSS Quality, unless I am missing something. Have fun, I love Shadow of the Tomb Raider.Sorry, 1080p only. The benchmark takes a long time to load the different scenes on the laptop. In this video the FPS is great deal easier to see. IMO Intel should really consider doing something like the FPS counter in the Nvidia Geforce Experience if they are serious about the graphics market..

You are right about lowering the resolution I just did not get around to making that video last night but really the my point is that even on an extremely limited GPU like the Iris Xe, you will see gains if you narrow XeSS down to using a dedicated driver rather than just Shader Model 6.4. Where as in the case of GPUs that don't support dp4a, if I remember correctly you lose performance. Based on my last few test I think something like the Series S and Steam deck should see benefits if XeSS is implemented at the Driver level like it is for Intel iGPUs IMO.enjoy the game! It's my favourite modern Tomb Raider game by far. I've watched the video and no setting gives a good framerate. XeSS Quality is usually the best compromise, along with Balanced, imho, though with the Iris Xe you might go further and decrease the game's resolution and apply XeSS from there. 20fps with XeSS Performance is not much. You haven't tried XeSS Quality, unless I am missing something. Have fun, I love Shadow of the Tomb Raider.

CoD MW2 works well with XeSS IQ wise, but it's not super performant with XeSS on even on ARC GPUs. Now the framerate has improved a bit compared to the launch version but you can still see performance gains are not as good as in other games.Hmm. Modern Warfare 2 goes against what I am seeing in other game. XeSS loses performance on the Xe. Usually by 5 FPS. I'll see if there is any video that can be salvaged.

Native

XeSS Quality

as of currently, I am playing Ghostwire Tokyo. After playing this and Shadow of the Tomb Raider and trying some mods that add XeSS, the conclusion is that there is a big difference between AI trained games for XeSS like Ghostwire Tokyo and Shadow of the Tomb Raider, they are almost virtually without jaggies, and even XeSS balanced looks good on them. The games that add XeSS via mod work very well for the most part but they don't look as perfected and tweaked.

Last edited:

Ok, so now I'm confused. When I install the latest Intel driver on my Xe iGPU, it exposes XeSS to me. So if this article is correct, up until today am I not seeing XeSS working, or is that part of the article basically a re-announce for CES? I'm hoping the Arc Control software comes with a good Frame Rate counter. The Intel Graphics Command Center leaves much to be desired.

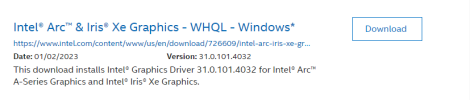

Edit: Installing the driver released on the 1/2/23 just to check things out.

Edit2: Overlay with everything but FPS

Edit3: Ultra Quality XeSS at 1080p is one frame faster than the last video I made in Tomb Raider. 15 fps compared to 14 FPS last time

Edit4: Still has a great deal of ghosting at 900p

Last edited:

Have you been running beta or stable drivers?Ok, so now I'm confused. When I install the latest Intel driver on my Xe iGPU, it exposes XeSS to me. So if this article is correct, up until today am I not seeing XeSS working, or is that part of the article basically a re-announce for CES? I'm hoping the Arc Control software comes with a good Frame Rate counter. The Intel Graphics Command Center leaves much to be desired.

Edit: Installing the driver released on the 1/2/23 just to check things out.

TopSpoiler

Regular

It was strangely quiet, but I finally found it.there isn't news about DirectSR from Microsoft, which aims to unify DLSS, FSR and XeSS upscaling technologies. Afaik, there was a presentation at GDC 2024 -March 21st-, but I haven't read nor heard a thing about it.

TopSpoiler

Regular

GDeflate in DirectStorage could be one of that.Is there any other feature of DirectX that includes a GPU implementation in the runtime?

AutoHDR for example. Maybe we should look at it more as a Windows feature.Wow I am really surprised DirectSR is coming with an included implementation. Is there any other feature of DirectX that includes a GPU implementation in the runtime? It will be even easier for developers to include upscaling in their games now.

Similar threads

- Replies

- 6

- Views

- 635

- Replies

- 3

- Views

- 486

- Replies

- 7

- Views

- 2K

- Replies

- 13

- Views

- 1K

- Replies

- 443

- Views

- 37K