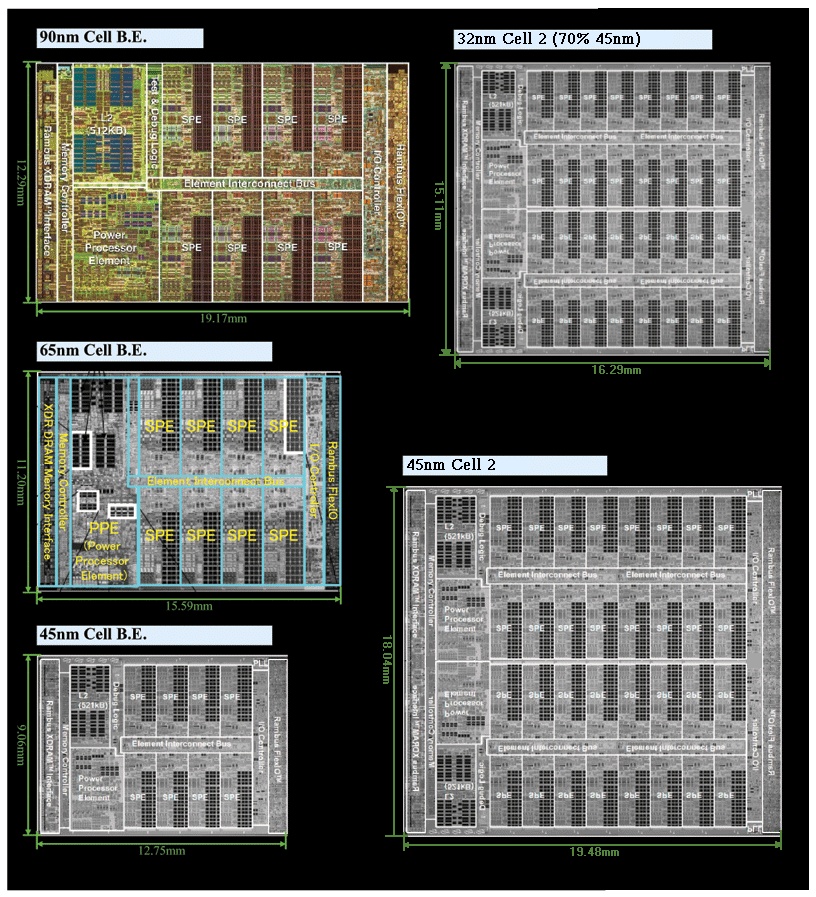

I wonder how they calculate those 34%. If they mean that the 45 nm Cell is 66% the area of the 65nm specimen, that's OK I guess. I wonder if DFM chews up some die area, and if so, how much.We describe the challenges of migrating the Cell Broadband EngineTM design from 65nm SOI to 45nm SOI using mostly an automated approach. The cycle-by-cycle machine behavior is preserved. The focus areas are migration effectiveness, power reduction, area reduction, and DFM improvements. The chip power consumption is reduced roughly by 40% and the chip area is reduced by 34%.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

IBM to manufacture Cell on 45nm SOI, Sony/Toshiba to move to 45nm bulk process

- Thread starter one

- Start date

That kinda was the plan, but it sounds like Yutaka Nakagawa doesn't think Cell is suitable for that, without any explanation why it is unsuitable.

Because both Broadcom and Conexant have SOCs that work with standard interfaces, can decode two HD threads at a time (enough for PIP) and cost in the region of $30-$40.

Cheers

Last edited by a moderator:

Charts from ISSCC 2008 compiled by H. Goto

http://pc.watch.impress.co.jp/docs/2008/0206/kaigai416.htm

The shrink speed of Cell is slower than that of EE. I/O and analog parts keep the 45nm Cell from further optimization in the same process without significant design changes.

http://pc.watch.impress.co.jp/docs/2008/0206/kaigai416.htm

The shrink speed of Cell is slower than that of EE. I/O and analog parts keep the 45nm Cell from further optimization in the same process without significant design changes.

Last edited by a moderator:

Yeah it's interesting to see the I/O constrain the 'dumb shrinkage' of the chip to the extent that there are actual "dead" portions of die area on the perimeter of the digital logic. Brings questions up as to if and what directions they'll take with the chip once PS4 looms on the horizon. Obviously a redesign would alleviate the situation, as would be the case with a Cell 2, but it's an interesting situation to be faced with nonetheless.

So, basically the gains of what would normally be associated with a single node shift required two in terms of die area. But nice power savings at least... and Cell can truly reach some high clockspeeds as envisioned part of the original design criteria. Doesn't do PS3 any good, but it'd be interesting to see IBM putting out some higher-clocked blades based on the 45nm chips. And looking forward to the HPC versions debut as well, if only to see where interest lies.

So, basically the gains of what would normally be associated with a single node shift required two in terms of die area. But nice power savings at least... and Cell can truly reach some high clockspeeds as envisioned part of the original design criteria. Doesn't do PS3 any good, but it'd be interesting to see IBM putting out some higher-clocked blades based on the 45nm chips. And looking forward to the HPC versions debut as well, if only to see where interest lies.

Panajev2001a

Veteran

It is interesting yes, they seem to be hitting the limits a bit of process shrinks... they still did a quite nice job cutting the size in less than half of the original area with the 90 nm to 45 nm transition.

Maybe for the 32 nm node they will integrate a custom I/O chip (like they did with PS2's I/O CPU replaced by a PPC440 based CPU IIRC) instead of the current SSC related I/O CPU thus also taking away a nice portion of FlexIO traces off the main PCB (2x2.5 GB/s lanes).

Then, when CELL 2 comes they could integrate an appropriately spec'ed down-clocked version of it reducing the chip's size even further.

Still...

It depends when we will be able to see heir 32 nm process go into mass production and have lots of nice chips coming out of it: it is possible that CELL 2 for PS4 (if they stick with CELL and I hope they do ) might launch with 45 nm technology.

) might launch with 45 nm technology.

After all, it is the same way they proceeded with PS3: launched using a tried and true manufacturing process (90 nm for both CPU and GPU) already experimented with designing PSP's SoC and PS2's EE+GS@90 nm. 90 nm SOI was not a cutting edge manufacturing process even in November 2006 when PS3 first launched: Intel was shipping 65 nm processors and launched 45 nm processors in consumers' PC's in 2007.

If they do decide to push for CELL 2 on 32 nm technology after PS4 launches it might make more sense not to do a major re-design of CELL 1 on 45 nm and/or 32 nm technologies and branch away from CELL 2.

If they stick with CELL and nVIDIA or with a similar GPU provider that can match the quirks of RSX (SCE owns the IP of that design) like IMG Tech. (PVR) they might have even more options for PSThree ...

...

Get a 1 PPE + 8 SPE's version of CELL 2 + a new GPU (maybe a notebook related part of that time-frame appropriately clocked to minimize heat production) licensed from the same GPU manufacturer which provides PS4's GPU. I have faith in the minds of STI and SCE Engineers and that they will do a great job .

.

Maybe for the 32 nm node they will integrate a custom I/O chip (like they did with PS2's I/O CPU replaced by a PPC440 based CPU IIRC) instead of the current SSC related I/O CPU thus also taking away a nice portion of FlexIO traces off the main PCB (2x2.5 GB/s lanes).

Then, when CELL 2 comes they could integrate an appropriately spec'ed down-clocked version of it reducing the chip's size even further.

Still...

It depends when we will be able to see heir 32 nm process go into mass production and have lots of nice chips coming out of it: it is possible that CELL 2 for PS4 (if they stick with CELL and I hope they do

After all, it is the same way they proceeded with PS3: launched using a tried and true manufacturing process (90 nm for both CPU and GPU) already experimented with designing PSP's SoC and PS2's EE+GS@90 nm. 90 nm SOI was not a cutting edge manufacturing process even in November 2006 when PS3 first launched: Intel was shipping 65 nm processors and launched 45 nm processors in consumers' PC's in 2007.

If they do decide to push for CELL 2 on 32 nm technology after PS4 launches it might make more sense not to do a major re-design of CELL 1 on 45 nm and/or 32 nm technologies and branch away from CELL 2.

If they stick with CELL and nVIDIA or with a similar GPU provider that can match the quirks of RSX (SCE owns the IP of that design) like IMG Tech. (PVR) they might have even more options for PSThree

Get a 1 PPE + 8 SPE's version of CELL 2 + a new GPU (maybe a notebook related part of that time-frame appropriately clocked to minimize heat production) licensed from the same GPU manufacturer which provides PS4's GPU. I have faith in the minds of STI and SCE Engineers and that they will do a great job

Panajev2001a

Veteran

Carl, look again at this picture though... when chip designers warn you against having to raise Voltage to raise clock-speed (impact on Power Consumption which starts growing by the cube of Voltage):

Look at the difference in power output at 4 GHz between then 90 nm part and the 65 nm one, look at the power consumption growth (relative to the 90 nm reference point) of 90 nm, 65 nm, and 45 nm as you increase in clock speed!

.

.

CELL at 4 GHz@45 nm consumes less than at 3.2 GHz@65 nm and slightly more than at 2.8 GHz@65 nm, pretty impressive if you ask me .

.

Look at the difference in power output at 4 GHz between then 90 nm part and the 65 nm one, look at the power consumption growth (relative to the 90 nm reference point) of 90 nm, 65 nm, and 45 nm as you increase in clock speed!

CELL at 4 GHz@45 nm consumes less than at 3.2 GHz@65 nm and slightly more than at 2.8 GHz@65 nm, pretty impressive if you ask me

Last edited by a moderator:

Then, when CELL 2 comes they could integrate an appropriately spec'ed down-clocked version of it reducing the chip's size even further.

I was thinking the same thing; it would certainly get the most mileage out of the redesign.

If they stick with CELL and nVIDIA or with a similar GPU provider that can match the quirks of RSX (SCE owns the IP of that design) like IMG Tech. (PVR) they might have even more options for PSThree...

Get a 1 PPE + 8 SPE's version of CELL 2 + a new GPU (maybe a notebook related part of that time-frame appropriately clocked to minimize heat production) licensed from the same GPU manufacturer which provides PS4's GPU. I have faith in the minds of STI and SCE Engineers and that they will do a great job.

Well, I do have to say I have a bit of trepidation concerning Kutaragi's moving on and the lead hardware designers re-assignment. All of the arguments for continuing with Cell in a re-designed form seem to be there, but the power at SCE now residing in the hands of traditional businessmen vs engineers makes me worry that they make sure to understand that the very same complaints of 'difficulty' they've been hearing wrt programming for PS3 will self-ammeliorate as time goes on leading up to PS4. My concern is that the calls of today may lead them to consider a complete architecture shift to answer the 'voice' of the developers. A voice that itself is a morphing beast, and I just hope they realize that.

Carl, look again at this picture though... when chip designers warn you against having to raise Voltage to raise clock-speed (impact on Power Consumption which starts growing by the cube of Voltage)

I'm a mad overclocker; no need to explain the perils of voltage.

But since the 90nm chip debuted, there have been fundamental improvements to the power situation with Cell. The separate voltage delivery to the SRAM arrays in the 65nm version, and then carrying on with the increase in the use of CMOS logic in the 45nm version (if I read the machine translation correctly).

Stable operation for the 45nm chip at 4GHz vs 3.2GHz requires a ~66% increase in power (and still comes in at almost half as much as the 90nm at 3.2), whereas the original 90nm required a full doubling in power delivery... so a smoother curve for sure since the refinements in voltage delivery were made.

This to me just seems like a very promising graph considering the much smaller vcore increments required to ramp clocks, and given the performance gains Cell could experience in HPC computing, and the already wattage friendly numbers the 90nm Cell puts up relative to other architectures Flop-wise, well... it's a little exciting.

(If Toshiba is able to achieve a good transition to 45nm on the RSX wattage-wise, and the Blu-ray array continues to improve along the lines of Sony's newly announced OPU, a slim/light PS3 redesign may be here very soon indeed.)

Panajev2001a

Veteran

Think about the success of x86 and what it means not destroying years of compilers+libraries R&D every 5 year period.

Besides, I do not think that developers would be THAT pleased about yet another architecture shift... those who could not get at all into CELL development by the time PS4 starts rising its head will NOT be getting good results from x86 CPU's multi-core solutions.

Massively multi-core IS the future for the high performance industry (I do not think neither Sony nor Microsoft will go completely the Wii-way for their next-generation products) and best CELL coding practices seem to work awesomely on Xbox 360's CPU as well as on multi-core PC's as well or even better than what MS advices for Xbox 360 multi-threaded programming according to a developer working on the next Tomb Raider game IIRC.

My hope for a relatively conservative CELL 2 based CPU + say an G1xx-G200 based nVIDIA GPU (along the lines of what RSX is for PS3) instead of maybe a much more revolutionary console works well with the people that sits in charge of Sony corp. now. Luckily the NIH illness can be of some help in this case ironically and most importantly IMHO it is the best business option out of the two. Evolving CELL and continuing their partnership with nVIDIA also provides the benefit of being able to start the next generation with much more mature tools and coding practices.

Think about the success of x86 and what it means not destroying years of compilers+libraries R&D every 5 year period

Besides, I do not think that developers would be THAT pleased about yet another architecture shift... those who could not get at all into CELL development by the time PS4 starts rising its head will NOT be getting good results from x86 CPU's multi-core solutions.

Massively multi-core IS the future for the high performance industry (I do not think neither Sony nor Microsoft will go completely the Wii-way for their next-generation products) and best CELL coding practices seem to work awesomely on Xbox 360's CPU as well as on multi-core PC's as well or even better than what MS advices for Xbox 360 multi-threaded programming according to a developer working on the next Tomb Raider game IIRC.

Last edited by a moderator:

Panajev2001a

Veteran

This to me just seems like a very promising graph considering the much smaller vcore increments required to ramp clocks, and given the performance gains Cell could experience in HPC computing, and the already wattage friendly numbers the 90nm Cell puts up relative to other architectures Flop-wise, well... it's a little exciting.

Yes, very exciting... thanks to the massive improvements in power consumption of their 45 nm parts as well as what they have achieved with their 65 nm ones (plus other additions such as the enhanced DP FP capabilities of their DP enhanced SPE designs) they might finally let CELL flex its muscles in the HPC sector raising the clock-speed and pushing for optimized dual core CELL BE solutions too (considering Rock and Itanium chips that tally at about 2x the area of the original CELL chip@90 nm ... there is room to grow

Such a move would increase even further the use of the CELL architecture and thus the developers' experience with it.

Think about the success of x86 and what it means not destroying years of compilers+libraries R&D every 5 year period.

Besides, I do not think that developers would be THAT pleased about yet another architecture shift... those who could not get at all into CELL development by the time PS4 starts rising its head will NOT be getting good results from x86 CPU's multi-core solutions.

Massively multi-core IS the future for the high performance industry (I do not think neither Sony nor Microsoft will go completely the Wii-way for their next-generation products) and best CELL coding practices seem to work awesomely on Xbox 360's CPU as well as on multi-core PC's as well or even better than what MS advices for Xbox 360 multi-threaded programming according to a developer working on the next Tomb Raider game IIRC.

Pana I completely agree with you, and alluded to some of those same points/concerns.

BUT, you can understand why I'd be worried, given an organization where Jack Tretton might now have more of a say in what happens!

I do think the NVidia relationship is pretty set in stone (for the time being), and with IBM and Toshiba continuing to be Sony's primary semi partners going forward, it would be natural for the Cell evolution to be the most obvious path for Sony. But that in part will depend on those two remaining warm to the architecture themselves, as if they cool I wouldn't doubt they would try to pull at Sony for a shift.

Again, I agree with your thoughts; they mirror my own. But as Cell so uniquely bears the imprint of Kutaragi in its creation, his departure I think lets the logs of the raft drift apart a little. Now, do they become completely undone as the years go by, or is the value in continuation readily perceived by those at SCE that perhaps have a less fond memory of the project as a whole?

I'm hoping rationality wins the day.

Panajev2001a

Veteran

Pana I completely agree with you, and alluded to some of those same points/concerns.

BUT, you can understand why I'd be worried, given an organization where Jack Tretton might now have more of a say in what happens!

I do think the NVidia relationship is pretty set in stone (for the time being), and with IBM and Toshiba continuing to be Sony's primary semi partners going forward, it would be natural for the Cell evolution to be the most obvious path for Sony. But that in part will depend on those two remaining warm to the architecture themselves, as if they cool I wouldn't doubt they would try to pull at Sony for a shift.

Again, I agree with your thoughts; they mirror my own. But as Cell so uniquely bears the imprint of Kutaragi in its creation, his departure I think lets the logs of the raft drift apart a little. Now, do they become completely undone as the years go by, or is the value in continuation readily perceived by those at SCE that perhaps have a less fond memory of the project as a whole?

I'm hoping rationality wins the day.

True, rationality plus investors asking "WHAT?!? WHY SPENDING MONEY TO REINVENT THE WHEEL AND MAKE FUN OF OUR MONEY YOU INVESTED IN CELL RELATED SOFTWARE AND HARDWARE?!?!?!".

You do not have to remind me that Tretton, Reeves, Hirai, and Stringer run Sony

If it were purely a technological incentive to keep going with the road-map STI has traced for CELL I'd be a lot more worried, but there are also tangible business incentives and 3rd party relations/support incentives not to do a huge revolution architecture wise.

If by that time there will be a nice single core or dual core processor designed by Intel that comfortably exceeded CELL BE's performance (allowing for not too complex software emulation with the purpose of backwards compatibility with PS3 games on PS4) then I'd be almost sure that going back to mostly single threaded game engines would be a GREAT incentive to also make some of today's developers happy (especially the ones that think that such a thing can happen before Quantum computers in people's home PC's

They are not going to spend tons of money on 45 nm and 32 nm fabs for CELL production anyway, they would be helping to design them with their work at STI, but then they would purchase the chips from IBM and Toshiba just like they would be buying them from Intel or AMD so again another reasons why investors should not be worried about staying with CELL and the STI's road-map IMHO

I'm going out on a limb and saying that this is just about impossible.If by that time there will be a nice single core or dual core processor designed by Intel that comfortably exceeded CELL BE's performance (allowing for not too complex software emulation with the purpose of backwards compatibility with PS3 games on PS4)

The first point is that full-speed emulation is not acceptably performant until there is more than a "comfortable" performance margin.

If a non-Cell chip is powerful enough to emulate it acceptably, we should check to make sure it doesn't also defy gravity.

Emulating 7 separate cores on a dual-core chip means somehow fitting 3.5 cores-worth of cache or local store activity per core per cycle, not including the emulation code getting in the way.

If the chip has some kind of monster cache capable of 5 accesses per cycle or it clocks at 10 GHz, it would still be too slow.

Sonys take was always that the big quantities of PS3`s helps producing Cell`s cheaper - something that only holds true aslong theres a single version of it for both PS3 and HPC/whatever else.Maybe for the 32 nm node they will integrate a custom I/O chip (like they did with PS2's I/O CPU replaced by a PPC440 based CPU IIRC) instead of the current SSC related I/O CPU thus also taking away a nice portion of FlexIO traces off the main PCB (2x2.5 GB/s lanes).

Im pretty sure a "PS3-only" Cell wouldnt need x noncoherent FlexIO-Lines (6 or 7?), as only one is actually used. To be blunt it would probably be better off with a way simpler Interconnect, given that Cell & RSX sit next to each other anyway and both are known quantities. No need for an power hungry Interconnect with deep buffers.

Panajev2001a

Veteran

I'm going out on a limb and saying that this is just about impossible.

I know, I was being sarcastic

Didn't this tip you off ?

today's developers happy (especially the ones that think that such a thing can happen before Quantum computers in people's home PC's )

Hehe

It was the same point brought by Carmack at the latest Quakecon: if we could have faster and faster single threaded performance like we were used to in the past we would be crazy not to go for such CPU's, but that way of extracting higher and higher performance has already hit against the wall... repeatedly

I didn't interpret the smiley as applying to the full sentence, rather, just the fragment in the parenthesis.

Panajev2001a

Veteran

I didn't interpret the smiley as applying to the full sentence, rather, just the fragment in the parenthesis.

It's ok, I did not take it personally.

Gotta love technological progression

Similar threads

- Replies

- 4

- Views

- 14K

- Replies

- 16

- Views

- 4K

- Replies

- 2

- Views

- 2K

- Replies

- 47

- Views

- 8K