You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FXAA On PS3....Why use this over MLAA?

- Thread starter Persistantthug

- Start date

Depends higher pixel density makes aliasing of each step look smaller. However with each step being smaller it could cause more noticble shimmer till it's past a certain point. The general idea though is it tends to be better with more pixel density. AKA it was much more noticeable with a PS2 on my 27 inch SDTV vs a PS3 on my 24 inch 1080p HDTV.

fxaa3_8 is slightly slower than the optimized (luma/ifAll/microcode) version of fxaa2, but it provides better quality. The new shader seems to be actually slightly ALU bound. Cutting one instruction before the branch should make it TEX bound again (and pretty much identical in performance to the optimized fxaa2 -- but with higher quality). Have to play around with it a bit

I have to send Timothy big thanks for making antialiasing possible in 60 fps console games. GPU based MLAA was slightly too much (1.6ms = 10% of frame time).

I have to send Timothy big thanks for making antialiasing possible in 60 fps console games. GPU based MLAA was slightly too much (1.6ms = 10% of frame time).

Assuming you want to go back to forward rendering... I am sure many developers find it attractive to use their high end optimized deferred renderers on Vita as well (assuming it has robust multiple render target support).Vita doesn't need it as much due to PowerVR magic. 3DS is likely below the baseline for programability on the PU at least.

Last edited by a moderator:

Great work Sebbi!Hope you can show us some comparison pictures when its all said and done:smile:fxaa3_8 is slightly slower than the optimized (luma/ifAll/microcode) version of fxaa2, but it provides better quality. The new shader seems to be actually slightly ALU bound. Cutting one instruction before the branch should make it TEX bound again (and pretty much identical in performance to the optimized fxaa2 -- but with higher quality). Have to play around with it a bit

I have to send Timothy big thanks for making antialiasing possible in 60 fps console games. GPU based MLAA was slightly too much (1.6ms = 10% of frame time).

Assuming you want to go back to forward rendering... I am sure many developers find it attractive to use their high end optimized deferred renderers on Vita as well (assuming it has robust multiple render target support).

Oh,btw,is that MLAA you guys came up with or that Iryoku one?

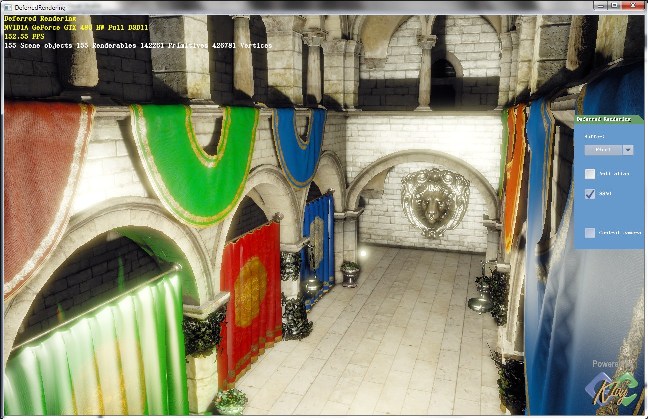

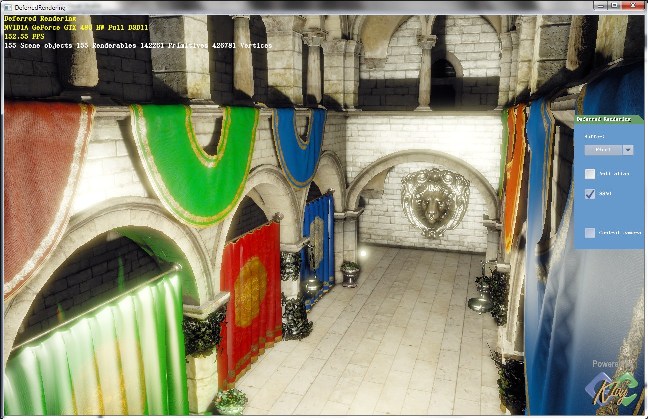

Screens and informations about FXAA :

In chinese

Better use google Chrome here.

http://www.klayge.org/2011/06/22/klayge中的fxaa已经完成/

With FXAA

In chinese

Better use google Chrome here.

http://www.klayge.org/2011/06/22/klayge中的fxaa已经完成/

With FXAA

Perhaps dont belong here...Voxel Cone Tracing and FXAA?

http://timothylottes.blogspot.com/2011/06/voxel-cone-tracing.html

http://blog.icare3d.org/2011/06/interactive-indirect-illumination-and.html

(same pictures engine on my previous post)

http://timothylottes.blogspot.com/2011/06/voxel-cone-tracing.html

http://blog.icare3d.org/2011/06/interactive-indirect-illumination-and.html

(same pictures engine on my previous post)

Last edited by a moderator:

Do you think FXAA could technically be deemed as a more optimized version of QAA considering the slight blurring of textures?

Nah FXAA is a post-process. QAA is MSAA that takes samples from neighboring pixels.

Do you think FXAA could technically be deemed as a more optimized version of QAA considering the slight blurring of textures?

But other than the slight blur, FXAA does a great job cleaning up the jaggies and like ultragpu said, it saves performance.

I thought it was just like MLAA, it could slightly blur textures, but it doesn't have to.

The differences between MLAA and FXAA:

http://timothylottes.blogspot.com/2011/03/nvidia-fxaa.html

http://timothylottes.blogspot.com/2011/03/nvidia-fxaa.html

Why FXAA ?

Wanted something which handles sub-pixel aliasing better than MLAA, something which didn't require compute, something which runs on DX9 level hardware, and something which runs in a single full-screen pixel shader pass. Basically the simplest and easiest thing to integrate and use.

FXAA Limits

Johan Andersson had a great way of describing this, MLAA like methods snap features to pixels. There is no sub-pixel information as input to these kind of post filters. FXAA does however detect and reduce the contrast of sub-pixel features so the pixel snapping is less visibly distracting. FXAA is by default tuned to be mild. Optionally turning this sub-pixel contrast reduction filter all the way up can reduce the detail in the image, but result in a more "movie" like experience.

What about surface normal aliasing?

Typically rough and detailed normal textures filter into a flat surface, often keeping a strong amount of specular reflection. Combine this with a rapidly changing triangle surface normal of small pixel or sub-pixel sized features, and horrid shader normal aliasing will result! FXAA's sub-pixel contrast reduction filter can only help a little in this situation, normal aliasing tends to flicker temporally, which is the primary artifact seen by the viewer. NVIDIA's Endless City Demo (which uses an early version of FXAA) is a good example of this: combining a high amount of finely tessellated features and application of a simple environment map,

think someone from Sony ATG claimed that the PS3 implementation of MLAA doesn't do that. So far only game that does that on PS3 is Red Faction Armageddon, but that might be just due to the software upscaling implementation of the game. The rest like Shift2, and Alice looks just as sharp on the system with MLAA compare to 360 if I remember correctly. All the FXAA sample we have so far seems to blur the overall IQ in all cases.

add:"movie" like experience. as in CG movie look?

add:"movie" like experience. as in CG movie look?

think someone from Sony ATG claimed that the PS3 implementation of MLAA doesn't do that. So far only game that does that on PS3 is Red Faction Armageddon, but that might be just due to the software upscaling implementation of the game. The rest like Shift2, and Alice looks just as sharp on the system with MLAA compare to 360 if I remember correctly. All the FXAA sample we have so far seems to blur the overall IQ in all cases.

add:"movie" like experience. as in CG movie look?

I just remember reading a reply or a quote regarding Joker's claim that MLAA can blur textures and it was stated that it could blur textures but it doesn't have to. I could be mistaken, running off of 2.5 hours of sleep and struggling to make it through the work day =p

In my understanding...

By design, MLAA will treat texture details as an integral part of the image. If the texture has "big color differences between neighboring pixels, this will result in blending of these pixels". The algorithm works by detecting color line features (as Z, U, or L-shaped pixel blocks) and blend them according to how exactly the lines "cut" into the pixels. The application will solve a system of equations to determine the line characteristics, and hence weighted color value of the pixels. If computed correctly, the same Z, U and L-shaped line "features" should be preserved. The end result is MLAA should smooth out all the perceived color edges without messing them up. Otherwise, regular AA'ed edges will appear smudged too.

In FXAA, its edge detection compares a pixel with its neighbors to estimate the final color values. There is no equation solving or feature preservation per se. The output should be reasonably "correct" nonetheless. You set thresholds for local contrast and subpixel aliasing test/heuristics. I guess that's why it's fast and can be done in one pixel shading pass. It works for subpixel aliasing because the contrast of the features is lowered (filtered/blurred ?), making the correction less distracting. So the threadholds values seem to be the key to FXAA applications. The developers probably have to experiment more to determine the ideal settings for different scenes ?

By design, MLAA will treat texture details as an integral part of the image. If the texture has "big color differences between neighboring pixels, this will result in blending of these pixels". The algorithm works by detecting color line features (as Z, U, or L-shaped pixel blocks) and blend them according to how exactly the lines "cut" into the pixels. The application will solve a system of equations to determine the line characteristics, and hence weighted color value of the pixels. If computed correctly, the same Z, U and L-shaped line "features" should be preserved. The end result is MLAA should smooth out all the perceived color edges without messing them up. Otherwise, regular AA'ed edges will appear smudged too.

In FXAA, its edge detection compares a pixel with its neighbors to estimate the final color values. There is no equation solving or feature preservation per se. The output should be reasonably "correct" nonetheless. You set thresholds for local contrast and subpixel aliasing test/heuristics. I guess that's why it's fast and can be done in one pixel shading pass. It works for subpixel aliasing because the contrast of the features is lowered (filtered/blurred ?), making the correction less distracting. So the threadholds values seem to be the key to FXAA applications. The developers probably have to experiment more to determine the ideal settings for different scenes ?

With subpixel features, they can only be rendered as a single pixel mixing intensities of the surfaces within it, meaning size is denoted by intensity. eg. consider a range of white dots, stars perhaps, on a dark ground. If each of these dots is pure white, without any subpixel sampling resolution they'll either appear as white dots or not be rendered. With limitless supersampling, each point will appear as a pixel of brightness relative to its size.In FXAA, its edge detection compares a pixel with its neighbors to estimate the final color values. There is no equation solving or feature preservation per se. The output should be reasonably "correct" nonetheless. You set thresholds for local contrast and subpixel aliasing test/heuristics. I guess that's why it's fast and can be done in one pixel shading pass. It works for subpixel aliasing because the contrast of the features is lowered (filtered/blurred ?)...

FXAA and other post effects that work on final colour data and reduce contrast will take a single white point of a star and reduce its contrast, making it conceptually smaller than the original object. This would mean you couldn't preserve pixel level features - if you deliberately wanted a full white pixel for a star, it'd get a little muted. There's also no solution for sub-pixel positioning of objects. The end result will be reduced contrast, reduced flicker, but also reduced fidelity.

I wonder if a surface couldn't have a property defined whether to apply FXAA to it or not? A mask could be rendered and the FXAA conditionally applied, so certain aspects like a skybox could be unfiltered.

forumaccount

Newcomer

I wonder if a surface couldn't have a property defined whether to apply FXAA to it or not? A mask could be rendered and the FXAA conditionally applied, so certain aspects like a skybox could be unfiltered.

Since the newer algorithm requires RGBL input (where the L is computed in a separate step) one assumes that masking could be accomplished by fabricating the L term across an area of the screen. I'm not sure how well it would work.

Similar threads

- Replies

- 21

- Views

- 10K