Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectX Ray-Tracing [DXR]

- Thread starter BRiT

- Start date

-

- Tags

- the future is now

The nice part:

"Getting a “cinematic” 24fps with real-time raytracing still requires some serious hardware: it’s currently running on Nvidia’s ultra-high-end, four-GPU DGX-1 setup, which lists for $150,000. Even with that, some elements of the scene, like the walls, need to be rasterized rather than made fully reflective, Libreri told Ars. And the Volta technology that is key to powering this kind of performance isn't even available in consumer-grade GPUs below the $3,000+ Titan V, so don't expect this kind of scene to run on your home gaming rig in the near-term."

https://arstechnica.com/gaming/2018...just-how-great-real-time-raytracing-can-look/

"Getting a “cinematic” 24fps with real-time raytracing still requires some serious hardware: it’s currently running on Nvidia’s ultra-high-end, four-GPU DGX-1 setup, which lists for $150,000. Even with that, some elements of the scene, like the walls, need to be rasterized rather than made fully reflective, Libreri told Ars. And the Volta technology that is key to powering this kind of performance isn't even available in consumer-grade GPUs below the $3,000+ Titan V, so don't expect this kind of scene to run on your home gaming rig in the near-term."

https://arstechnica.com/gaming/2018...just-how-great-real-time-raytracing-can-look/

Alessio1989

Regular

24fps is a crap experience...

Yea, but still imagine if ILM wanted to sell the experience? All you need is 1080p streaming to home. Let the work be done server side. Beam it to peoples houses.The nice part:

"Getting a “cinematic” 24fps with real-time raytracing still requires some serious hardware: it’s currently running on Nvidia’s ultra-high-end, four-GPU DGX-1 setup, which lists for $150,000. Even with that, some elements of the scene, like the walls, need to be rasterized rather than made fully reflective, Libreri told Ars. And the Volta technology that is key to powering this kind of performance isn't even available in consumer-grade GPUs below the $3,000+ Titan V, so don't expect this kind of scene to run on your home gaming rig in the near-term."

https://arstechnica.com/gaming/2018...just-how-great-real-time-raytracing-can-look/

I wonder if such a business model could exist.. hmmm.

it's okay, just the same demo with a few extra features, imho. I'd like to see a different tech demo from 3DMark, I used those quite a bit and still my favourite is Sky Diver.

In that 3DMark new demo and the Star Wars one...there is a lot of use of the shiny-shiny effect (the so called brilli brilli effect), like an excess of sparkling gems and chrome. Seed is nice because the ambient occlusion shading looks very natural so do the reflections and so on.

However the Star Wars video looks totally real, it seems a movie.

Last edited:

you don't need a Volta for that.God-damned assholes. Now I have the urge to throw a huge stack of money at a Volta card. Stupid Remedy and their stupid awesome games.

The new features are part of "RTX," a "highly scalable" solution that, according to the company, will "usher in a new era" of real-time ray tracing. Keeping with the acronyms, RTX is compatible with DXR, Microsoft's new ray tracing API for DirectX. To be clear, DXR and RTX will support older graphics cards; it's only the special GameWorks features that will be locked to "Volta and future generation GPU architectures."

DavidGraham

Veteran

Any info on the resolution? I mean if they are rendering @8K then this is mighty excessive, and would warrant the use of such a system.The nice part:

"Getting a “cinematic” 24fps with real-time raytracing still requires some serious hardware: it’s currently running on Nvidia’s ultra-high-end, four-GPU DGX-1 setup,

it's okay, just the same demo with a few extra features, imho.

It looks awful and janky, imo - a lot of what's going on there looks to me more like screen-space reflections and render-to-texture effects. It lacks that sense of scene consistency/persistence that you should get from tracing against the scene's full data structure.

So that places realtime photorealistic graphics in consoles in about...2030?? Bloody convincing though. Helps when your people are in armour!The nice part:

"Getting a “cinematic” 24fps with real-time raytracing still requires some serious hardware: it’s currently running on Nvidia’s ultra-high-end, four-GPU DGX-1 setup, which lists for $150,000. Even with that, some elements of the scene, like the walls, need to be rasterized rather than made fully reflective, Libreri told Ars. And the Volta technology that is key to powering this kind of performance isn't even available in consumer-grade GPUs below the $3,000+ Titan V, so don't expect this kind of scene to run on your home gaming rig in the near-term."

https://arstechnica.com/gaming/2018...just-how-great-real-time-raytracing-can-look/

But on the plus side it may help to make CG films/effects cheaper due to quicker processes or for lower budget Indys more interesting without wincing while watching the film (some poor work out there due to constraints it seems), using the previous generation of Unity saved Oats Studio around 6 months of work for their CG short films on ADAM.So that places realtime photorealistic graphics in consoles in about...2030?? Bloody convincing though. Helps when your people are in armour!

Last edited:

Who knows. There were previously claims of this kind, back in 2011 it was allegedly already possible to run raytraced games in real time.So that places realtime photorealistic graphics in consoles in about...2030?? Bloody convincing though. Helps when your people are in armour!

Why?...since they don't need to be rendered in real time? The merit is in the real time stuff, wyswyg. That's where the authenticity is,imo.I would think the bigger interest in the is for film projects.

Sure but when on a budget or require flexibility it will probably help?Who knows. There were previously claims of this kind, back in 2011 it was allegedly already possible to run raytraced games in real time.

Why?...since they don't need to be rendered in real time? The merit is in the real time stuff, wyswyg. That's where the authenticity is,imo.

This is what some film industry people said at Oats Studio for doing ADAM after the demo:

https://unity.com/madewith/adam#the-projectIn just five months, the Oats team produced in real-time what would normally take close to a year using traditional rendering. “This is the future of animated content,” declares CG supervisor Abhishek Joshi, who was CG lead on Divergent and Game of Thrones. “Coming from offline, ray-traced renders, the speed and interactivity has allowed us complete creative freedom and iteration speed unheard of with a non-RT workflow.”

It is not the solution for blockbusters yet, but maybe could open for Indys/cheaper films, better CGI effects where resource constrained *shrug*.

Last edited:

Why?...since they don't need to be rendered in real time? The merit is in the real time stuff, wyswyg. That's where the authenticity is,imo.

Iteration times.

Yeah that is what the quote I provided says as wellIteration times.

That was CG supervisor Abhishek Joshi, who was CG lead on Divergent and Game of Thrones.

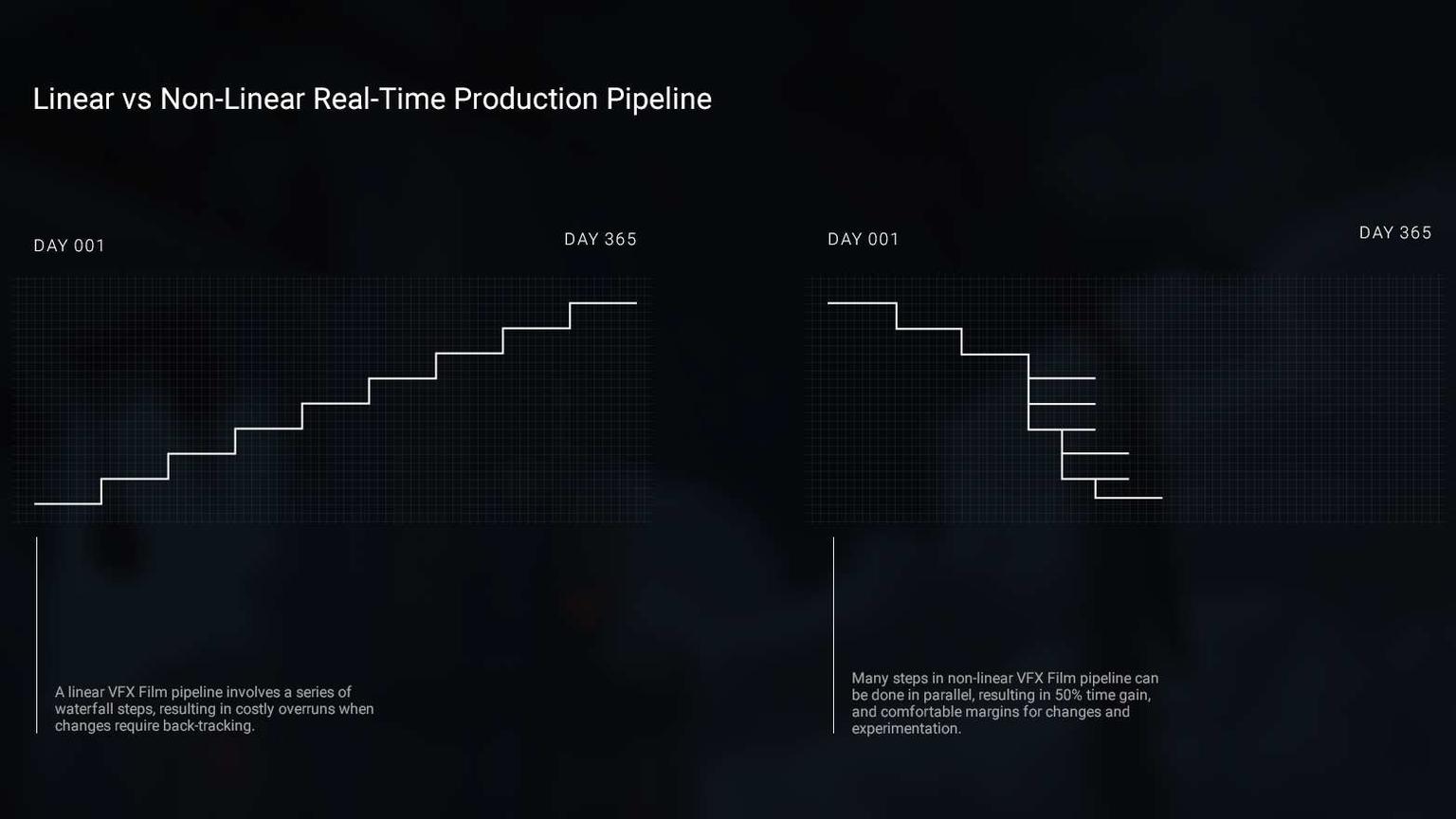

And also how they can break from a linear to non-linear real-time production pipeline, part of the article link in earlier post:Iteration times.

Separately,

I like their approach to facial animation, but overall and generally for the ADAM CG short film they do rely heavily on Alembic support.

Similar threads

- Replies

- 16

- Views

- 821

- Replies

- 17

- Views

- 6K

- Locked

- Replies

- 10

- Views

- 1K

- Replies

- 137

- Views

- 34K