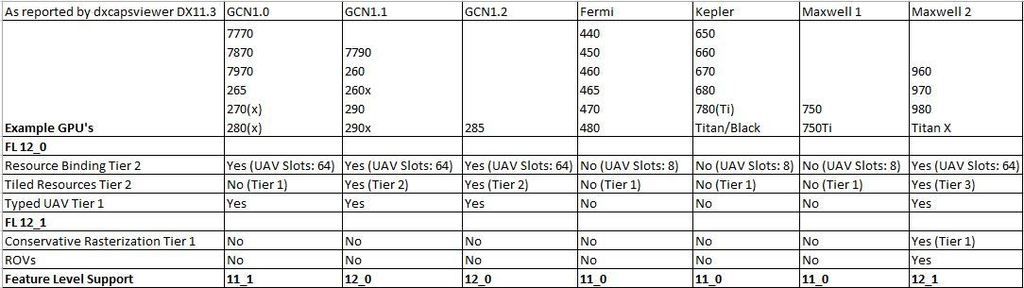

His reasoning is that because Mantle which (no one has seen and is still under NDA) has a feature called Bindless resources that immediately makes Direct X 12 bindless resources Tier 3. I appreciate the correlation but that doesn't change the fact that DXDIAG is reporting only hardware level features. If AMD considered 64 as 'bindless' then he's flat out wrong - because 64 is a very specific hardware value. What if dxdiag when Tier 3 shows up for 390x shows a different value. Hence why I believe we should not jump to conclusions.The best evidence I can find that all GCN parts support Tier 3 Resource Binding is this forum post:

http://forums.anandtech.com/showthread.php?p=37199507

It's just a post but it does seem to come from a knowledgeable source and later in the thread he does post links to the AMD source material (of which there is too much for me to go through right now).

Now, another reason I don't want to trust him as a resource is that later on he mentions that OpenGL and nvidia has been supporting bindless resources as an extension this whole time. Once again, API features and hardware features are not the same. DXDIAG only shows the hardware features! we must not dirty the truth! We should not be sparking an Nvidia vs AMD war over an assumption. 64 is the value stated by DxDiag, we need to report that, and that is labelled as Tier 2. That very specific thread was locked because of what we are about to do (claiming one is Tier 3 and one is Tier 2 vs Tier 2 for both MaxwellV2 and GCN). Except we have the proof sitting out in plain sight this time.

Last edited: