I haven't kept up with all the talk about RDNA after the AMD 6800 launch, so I don't know if this is common knowledge already, but apparently RDNA 1.1 [0] exists and is very similiar to RDNA 2. The author assumes it's what's used in the consoles which would be interesting remembering all that RDNA 1.5 talk around the net even after RDNA 2 being mentioned at the console reveals.

View attachment 4966

[0]

https://www.hardwaretimes.com/amd-r...rchitectural-deep-dive-a-focus-on-efficiency/

After reading the article it seems more like speculation

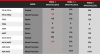

Below you can see the raw throughput of the RDNA 1, 1.1, and 2.0 CUs. Now, you’ll likely ask what’s RDNA 1.1? Well, AMD didn’t say but I reckon it’s what powers the PS5 and most likely the Xbox Series X. Regardless, there’s no difference between the two designs, and it’s the Render backend which has really undergone a makeover with RDNA 2.

RDNA 1.1 CUs have the optional lower precision int8/int4 added while RDNA2 CU has it by default (and by extension PS5).

There's two key differences however between 1.1 & 2.0 CUs. The latter has ray accelerators added and redesigned/optimized to hit higher frequencies at same power consumption (1.3x). Its worth mentioning that in the road to playstation presentation Cerny specifically mentioned RDNA2 CUs.

Now the following 2 slides caught my attention in relation to PS5

Designed ground up for

frequency, power and efficiency. All three goals key to the PS5 design, i think this is as good confirmation as we gonna get of PS5 supporting VRS hw bits. I see no reason why Sony would forego RB+ when it perfectly suits their high frequency design. Time constraints don't seem likely considering RB+ is part of the basic RDNA2 design. Its not an added feature, optimized VRS is just a nice side effect.

If anything the delay MS mentioned could be related to them waiting to implement all RDNA2 API features on the dev kits that are certified to release launch games. It might just be a case of Sony not adding the API features on dev kits in time for launch games. But the hardware support is all there for future API/Devkits iterations

Seeing as how PS5 is coming in hot lacking features that hardware otherwise supports (VRR, 8K, cold storage, internal expansion) It seems plausible that Sony prioritized basic API features for launch with the missing features coming at a later date

Variable Rate Shading and Sampler Feedback are primarily an API-level feature, rather than a hardware one and as such works in a similar way across all GPU architectures.

XSX does have a unique customization hence SFS. An added hw texture filters to fall back to lower LODs until the streaming assets make it so its not obvious when there's a delay. Plain SF PS5 should be able to support with an API update if it doesn't already

Infinity cache seems to be designed to work exceptionally well with high frequency GPU design, i think this is the key to PS5 high clocks and it being neck to neck with XSX.

I think there's a high posibility of anywhere from 32 to 64MB of IC in on PS5. They likely settled on a sweet spot of performance per die space. Im thinking somewhere close to 50MB

Other

circumstantial evidence which give more plausibility to this being the case:

Matt

comment on DF face off

Imagine when people have more time to really optimize their code/data and hit these caches more often.

Cerny talk about bringing data closer where it needs to be

PS5 handling of alpha heavy scenes in games

Finally this is where i think XSX & PS5 diverge is with

mesh shading where MS went for the standard RDNA2 which is simpler/easier to implement across DX12U devices whereas Sony went for a custom tailored solution something that is harder/more complicated to implement but allows for greater control and flexibility similar to NVs solution.