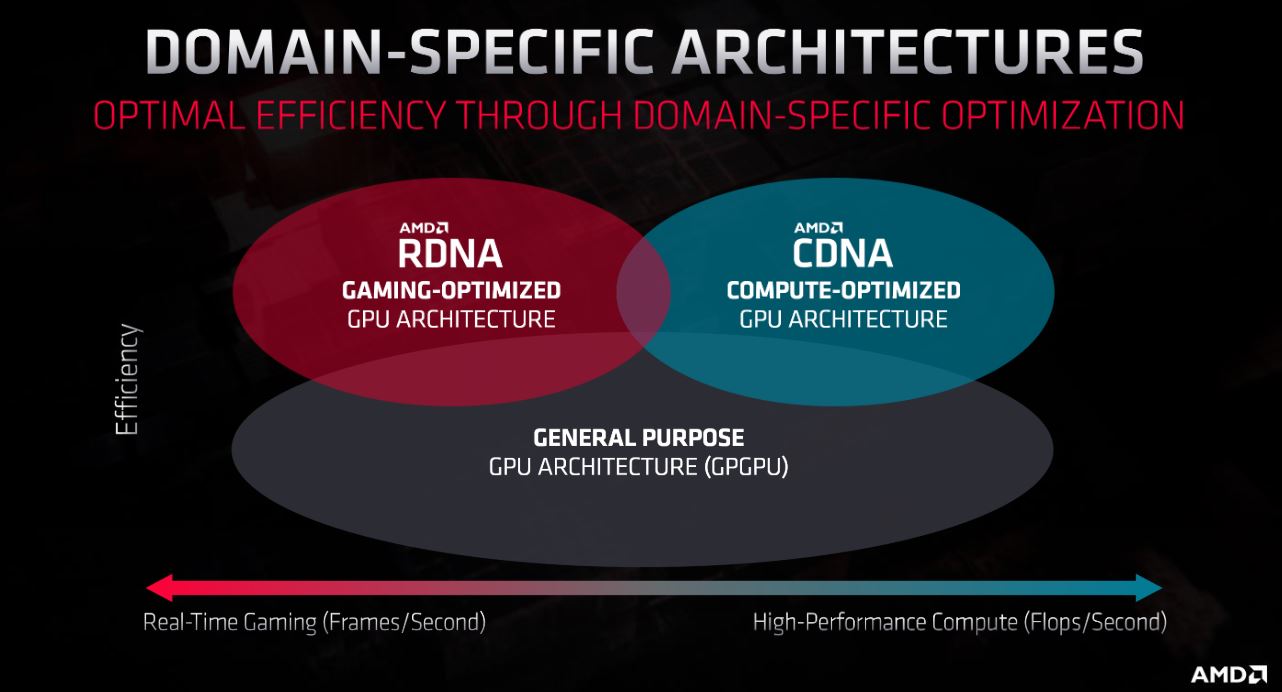

The conclusion makes sense to me too. We know that CDNA in the form of Arcturus is basically GCN (Vega - though I guess the G in the name would be a bit inappropriate here...) with some graphic bits stripped off. So CDNA2 could really be anything, and imho it makes a whole lot of sense if this would be really the same as some rdna version (unless they'd actually stick to GCN even). Despite the flashy diagram, I don't expect amd to develop really two completely separate architectures. Separate chips yes (although I have to say I am still somewhat sceptical about the viability of even this approach, but apparently amd is willing to go there), but there's no real evidence it's really going to be a separate architecture other than in marketing name.

Some elements that seem likely to benefit CDNA that showed up with Navi are the doubled L0(RDNA)/L1(GCN) bandwidth and an apparently more generous allocation for the scalar register file. The RDNA L1 cache is read-only and it may be that compute loads with a lot of write traffic might be outside its optimum, but on the other hand I'm not sure what Arcturus is doing with subdividing the GPU's broader resources. The larger number of CUs and the lack of a 3d graphics ring might point to it acting more like a set of semi-independent shader engines managed by a subset of the ACEs, and that sort of subdivision might still align with what Navi did with the hierarchy.

The longer cache lines could be a wrinkle in memory coherence, since RDNA's cache granularity is now out of step with the CPU hierarchy. It can be handled with a little bit of extra tracking, however.

How the WGP arrangement may help or hinder (outside of bugs) may need further vetting. It seems like WGP mode can help heavier shader types, but per the RDNA whitepaper there are some tradeoffs like shared request queues that might be less helpful in compute.

Some elements like the current formulation Wave64 may not be full replacements for native 64-wide wavefronts, as there are some restrictions in instances where the execution mask is all-zero, which is a failure case for RDNA. RDNA loses some of the skip modes that GCN has, drops some of the cross-lane options, and drops some branching instructions. On the other hand, it does have some optimizations for skipping some instructions automatically if they are predicated off.

CDNA's emphasis on compute, and Arcturus potentially having much more evolved matrix instructions and hardware, could make the case for a different kind of Wave64, or a switch in emphasis where it's preferred to keep the prior architectural width.

Usually HPC is less concerned with backwards compatibility, but perhaps AMD's tools or existing code may still tend towards the old style?

Physically, the clock speed emphasis may be partially blunted. Arcturus-related code commits seems to be giving up some of the opportunistic up-clocking graphics products use, with the argument being the broader compute hardware would wind up throttling anyway. If the upper clock range is less likely to be used, perhaps the implementation choices would emphasis leakage and density versus expending transistors and pipeline stages on the "multi-GHz" range AMD seems to be claiming for RDNA2.

On top of all that, there seem to be errata for Navi that may be particularly noticeable for compute and might have delayed any RDNA-like introduction into the development pipeline for HPC.

I'm curious what AMD's managed to cull from Arcturus. For example, the driver changes make note of not having a 3d engine, but there are still references to setting up values for the geometry front ends and primitive FIFOs, for example. Also unclear is what that means for the command processor, since besides graphics it is usually the device that the system uses to set up and manage the overall GPU. Losing it doesn't seem to gain much other than a little rectangle in the middle of the chip, for example. If it is gone or somehow re-engineered, perhaps it has more to do with some limitation in interfacing with a much larger number of CUs rather than the area cost of a microcontroller.

Because (in case you're interested in a serious answer). Vega 20 has 43,4 edit: 43,2 TFLOPS of compute per mm², while Navi 10 has 40,4 - both in their fastest incarnations. And that is with Vega 20's insanely wide memory controllers and half-rate DP, neither of which are free in terms of die space.

And with Vega, compute applications work, whereas Navi still has issues. You don't want your next Supercomputer installation with a 100k cards choke on the first day.

One item of note with regards to Vega 20's wide memory controllers is that while they are wide, Navi 10's GDDR6 controllers are physically large. From rough pixel counting from the die shots for both on Fritzchens Fritz for the two, Navi's memory sections have an area in the same range as Vega 20's, which would have a corresponding impact on the FLOPS/mm2. At least my initial attempts at measuring seem to indicate Navi 10's is noticeably larger. Vega 20 is a larger chip, which usually means the overhead of miscellaneous blocks and IO tends to be lower versus what smaller dies must contend with.

If the references to a new architectural register type and matrix hardware are what they seem to me, Arcturus is going to have a large rise in FLOPS/mm2, with the impact dependent on the precision choices and granularity chosen. That wouldn't be an apples to apples comparison, though.

One thing I did find recently is some discussion on certain issues that code generation has for GPUs for mesa code, in which there are some additional details about some of the bug flags for RDNA.

https://gitlab.freedesktop.org/mesa...18f4a3c8abc86814143bf/src/amd/compiler/README

It's not just hardware bugs (includes some unflattering documentation issues) and not just RDNA, but RDNA has a list of hardware problems. I think some of those would be more objectionable for compute, and maybe one reason why the consoles seem to have gone for for a more fully-baked RDNA2.

(edit: fixed some grammar)