Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD: Pirate Islands (R* 3** series) Speculation/Rumor Thread

- Thread starter iMacmatician

- Start date

-

- Tags

- amd

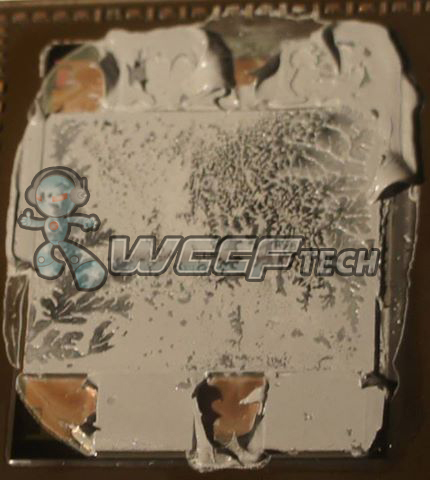

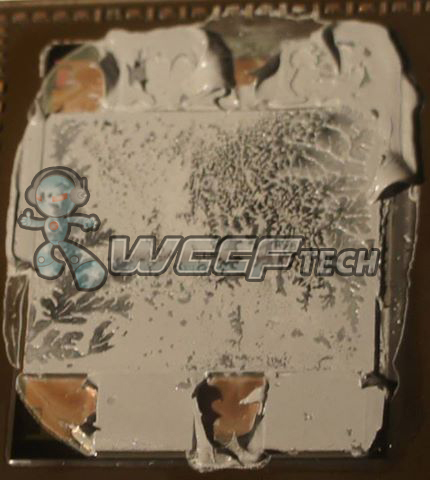

That the pictured die was visible after removing the water block, rather than being obscured by a heatspreader is interesting.

The memory stacks appear to be less tall than the GPU, but unless they are half the height or additional thinning is on the way, the picture may change once the double capacity stacks in later products come out.

Memory might be content with the thick dollop of interface material on top we see in the picture, but the GPU would be less so. Having a base plate's full pressure on the stacked die may also be iffy.

The water cooled edition might also be priced well enough and in reasonable quantity to justify the time put into more carefully mounting the cooler on the package, versus the variance the air cooled cards tolerate.

The memory stacks appear to be less tall than the GPU, but unless they are half the height or additional thinning is on the way, the picture may change once the double capacity stacks in later products come out.

Memory might be content with the thick dollop of interface material on top we see in the picture, but the GPU would be less so. Having a base plate's full pressure on the stacked die may also be iffy.

The water cooled edition might also be priced well enough and in reasonable quantity to justify the time put into more carefully mounting the cooler on the package, versus the variance the air cooled cards tolerate.

Quick and sloppy, I'm getting 23x25.5 = ~586mm2.

Which seems like the 576mm2 I heard from a few weeks ago was pretty accurate.

Edit- Was using AMD's 5x7mm for a HBM stack.

Hynix has 5.48x7.29mm in their HBM slides.

Which seems like the 576mm2 I heard from a few weeks ago was pretty accurate.

Edit- Was using AMD's 5x7mm for a HBM stack.

Hynix has 5.48x7.29mm in their HBM slides.

Last edited:

gamervivek

Regular

7.29 has it reaching massive proportions of ~700mm2,

http://forums.anandtech.com/showthread.php?t=2430478&page=71

http://forums.anandtech.com/showthread.php?t=2430478&page=71

Relooking at the HBM slides the 5.48x7.29mm is for the base die, not the exposed die ontop.7.29 has it reaching massive proportions of ~700mm2,

http://forums.anandtech.com/showthread.php?t=2430478&page=71

I think my number is closer, especially since reticle limit is somewhere just north of 600mm2.

I think there are some patents for tile-based compression, where the framebuffer is divided up into squares, deltas are generated from that data, the tile is subdivided, and those subdivisions are run through the process, those outputs are run through the process and so on, generating a hierarchy of compressed data and encoding information.Anyone knows how this color compression scheme works anyhow? As it has to be lossless, and you must surely not want it to be too cumbersome to extract the value of an individual pixel either so you don't end up having to constantly fetch more data than you would without compression...how would you go about implementing something like this?

The highly regular nature of the encoding allows for more parallel hardware, with a more balanced computational demand between encoding and decoding. ROPs in particular already operate in terms of tiles of pixels, and there are already hierarchical structures for handling depth that have some similarity to this.

Whether the output is always much smaller than the uncompressed format is unclear, particularly if the variability in compression means that memory allocations need to be conservative. That the higher levels of the hierarchy are loaded first, and stay on chip more, can allow bandwidth to be saved by skipping accesses.

3dilettante and a few other hardware oriented guys (sorry forgot who) were discussing the GCN tessellator and geometry shader design in another thread year or two ago. The conclusion of that discussion was that the load balancing needs improvements (hull, domain and vertex shader have load balancing issues because of the data passing between these shader stages). These guys could pop-in and give their in-depth knowledge. I have not programmed that much for DX10 Radeons (as consoles skipped DX10). GCN seems to share a lot of geometry processing design with the 5000/6000 series Radeons.

To requote this, is this the thread you are referencing?

https://forum.beyond3d.com/threads/...eries-speculation-rumour-thread.54117/page-88

I think people misunderstood my definition of "cache". I was not talking about spilling registers to L1 or implementing a caching scheme similar to the memory caches. Let me explain what I meant.Sorry, I don't think it's a good idea from a hardware designer point of view.

While registers and cache memory serve the same purpose, i.e. provide fast transistor-based memory to offset slow capacitor-based RAM, they belong to very different parts of the microarchitecture and use very different design techniques to implement.

Registers are part of the instruction set architecture, they are much closer to the ALU and are directly accessible from the microcode, so they are "hard-coded" as transistor logic. Caches are a part of the external memory controller, accessible through the memory address bus only, designed in blocks of

"cells", and they are transparent to the programmer and not really a part of the ISA.

The size of a register file (or any on-chip memory) determines how near to the execution unit it can be. A bigger register file would thus increase the pipeline latency (or reduce the clock ceiling, or both). It would also increase the power usage, as the data needs to transfer for a longer distance. In addition to this, each execution unit is not directly connected to all the registers by wires. In a general case N to M connection routing scales logarithmically (this adds latency and extra transistors = extra cost + power).

So it would definitely be a big improvement to have a smaller register file closer to the execution units. It would reduce power, reduce the routing logic size (additional reductiosn to power and complexity). GPU register files are huge, and the registers are statically allocated by the compiler. In shaders with coherent branches (the most common branch type in optimized production quality shaders), there are (by definition) at least some registers that are not used at all. You need to always allocate enough registers for the worst case execution path. Another easy example is lighting. You have 3 registers to accumulate diffuse.rgb and 3 registers to accumulate specular.rgb. These registers are accessed maybe once in 40 cycles. The shader compiler also likes to move memory->register loads as early as possible and the usage as late as possible to hide the latency of memory stalls. This means that at any point of the shader execution there is a huge amount of registers used just for storage (register is allocated to keep the memory load result until it is actually used, possibly 100+ instructions later).

Only a small part of the registers are actually used right now. There is no performance penalty in moving other registers further away from the execution units in a bigger register file. Automated caching is likely not as power efficient as compile time static register cache logic. Life time and usage points of each register are known at compile time, allowing the shader compiler to issue register moves to the small (fast) register file before the register is actually used. Memory loads should end up in the slow register file (unless immediately used). This kind of two-tier model allows a much bigger total register file size. It would definitely be a good idea for GPUs, since a big percentage of registers are used for storage (and some are not even used at all, since register allocation to branches is static).

A good paper about this topic. They claim big (54%) power savings:

http://dl.acm.org/citation.cfm?id=2155675

https://lh4.googleusercontent.com/-...AAALJs/62rqQEaLQ-g/w2178-h1225-no/desktop.jpg

1002-67C8, 1150MHz core freq, 8GB HBM.

source: http://www.chiphell.com/forum.php?mod=redirect&goto=findpost&ptid=1302682&pid=29101917

Isn't the device ID completely wrong for Fiji?

GPU register files are just big chunks of SRAM with special hardcoded (by the compiler) adressing. AFAICT, Sebbi is pondering a two level such structure, one small+fast chunk and one big+slow chunk.

An ALU bound kernel would live mostly in the small+fast (and more power efficient) register segment a kernel peppered with gather/scather would live primarily in the big+slow segment. Individual warps/wavefronts could migrate back and forth (by the compiler).

Makes sense to me, though it adds complexity to the compiler and scheduler.

BTW. Another advantage of HBM is significantly reduced latency. That'll go some way to relieve register pressure.

Cheers

An ALU bound kernel would live mostly in the small+fast (and more power efficient) register segment a kernel peppered with gather/scather would live primarily in the big+slow segment. Individual warps/wavefronts could migrate back and forth (by the compiler).

Makes sense to me, though it adds complexity to the compiler and scheduler.

BTW. Another advantage of HBM is significantly reduced latency. That'll go some way to relieve register pressure.

Cheers

D

Deleted member 2197

Guest

Latest rumour ...

http://www.tweaktown.com/news/45602...atercooled-hbm-based-flagship-card/index.html

Instead, the Radeon R9 Fury X will be the flagship video card, a watercooled part based on the Fiji XT GPU. Under that, we'll have the Radeon R9 Fury, which should be based on the Fiji PRO architecture, with an entire restack of current cards. Under these two new High Bandwidth Memory-powered video cards we'll have the Radeon R9 390X, Radeon R9 390, Radeon R9 390, R9 380, R7 370 and R7 360.

The Radeon R9 Fury X will be a reference card with AIBs not able to change the cooler, but TweakTown can confirm that it will be the short card that has been spotted in the leaked images. The Radeon R9 Fury will see aftermarket coolers placed onto it, so we should see some very interesting cards released under the Radeon R9 Fury family.

The Radeon R9 Fury X has a rumored MSRP of $849, making it $200 more than the NVIDIA GeForce GTX 980 Ti, but $150 cheaper than the Titan X. The Fury X branding is a nice change from AMD, but it does sound awfully close to the Titan X with that big, shiny, overpowering 'X' in its name, doesn't it

http://www.tweaktown.com/news/45602...atercooled-hbm-based-flagship-card/index.html

Someone went slightly overboard with the thermal grease there

http://wccftech.com/exclusive-amd-radeon-fury-fiji-gpu-die-pictured/

it does sound awfully close to the Titan X with that big, shiny, overpowering 'X' in its name, doesn't it

hm.... Better or worse than FurryZ ?

FurryR

FurryZX

Someone went slightly overboard with the thermal grease there

LDS is optimized for different purposes. LDS can do (fast) atomics by itself (accumulate, etc). LDS reads and writes have a separate index for every thread (64 threads in a wave). This is 64 way scatter/gather. Register load & store (64 lanes at a time guaranteed at sequential addresses) would be much simpler to implement in hardware. When scatter & gather are not needed, the register file could be even split by lanes (or groups of 16 lanes) if that increases the register locality. Emulating a bigger register file by LDS is both slower and costs much more power than having a native support.@sebbbi That cache is the LDS for a lot of variables, now already.

But you are right that with the current GCN hardware, it is sometimes a good idea to offload seldom used registers to LDS. However offloading just a single 32 bit register in a 256x1x1 threadgroup already costs 1024 bytes of LDS. You can't afford to offload many registers (or you lose occupancy). In some cases you only need to offload one 32 bit register per wave (64 thread). In this case offload to LDS is actually useful. However if the compiler was better, it would automatically offload this to a SGPR register (= much faster and no LDS needed).

Love_In_Rio

Veteran

AMD Radeon Fury X currently slower than Nvidia 980Ti?:

http://wccftech.com/amd-radeon-r9-fury-fiji-xt-gpu-slower-gtx-980-ti/

If so, a pity. They should have changed to GCN 2.0 time ago...You can´t be with the same architecture for more than four years. Maxwell 2 is so good/efficient compared to GCN...(GCN 1.1,1.2 or whatever, they are basically the same). This could be like R600 situation again (then however they introduced a new architecture and it served to improve it greatly in the new node) but without any major architecture changes (well, at least they are testing the new HBM memory...).

http://wccftech.com/amd-radeon-r9-fury-fiji-xt-gpu-slower-gtx-980-ti/

If so, a pity. They should have changed to GCN 2.0 time ago...You can´t be with the same architecture for more than four years. Maxwell 2 is so good/efficient compared to GCN...(GCN 1.1,1.2 or whatever, they are basically the same). This could be like R600 situation again (then however they introduced a new architecture and it served to improve it greatly in the new node) but without any major architecture changes (well, at least they are testing the new HBM memory...).

Last edited:

But you are right that with the current GCN hardware, it is sometimes a good idea to offload seldom used registers to LDS.

I was more thinking about the graphics-pipe where LDS is not exposed to us, but the compiler uses it as a "cache" and communication channel. We even got a second level of that, the GDS, but not on DirectX unfortunately.